The idea that all the current living things found on the Earth descended from one single cell long, long, ago runs deep in biology, going all the way back to Darwin himself in On the Origin of Species (1859), which had one single diagram in the whole volume - see Figure 1 down below. But is that really the case? In this posting, I would like to explore the possibility that it is not.

Figure 1 – Darwin's On the Origin of Species (1859) had one single figure, displayed above, that describes the tree of life descending from one single cell, later to be known as the LUCA - the Last Universal Common Ancestor.

The Phylogenic Tree of Life

Before proceeding further, recall that we now know that there actually are three forms of life on this planet, as first described by Carl Woese in 1977 at my old Alma Mater the University of Illinois - the Bacteria, the Archea and the Eukarya. The Bacteria and the Archea both use the simple prokaryotic cell architecture, while the Eukarya use the much more complicated eukaryotic cell architecture, and all of the "higher" forms of life that we are familiar with are simply made of aggregations of eukaryotic cells. Even the simple yeasts that make our breads, and get us drunk, consist of very complex eukaryotic cells. The troubling thing is that only an expert could tell the difference between a yeast eukaryotic cell and a human eukaryotic cell because they are so similar, while any school child could easily tell the difference between the microscopic images of a prokaryotic bacterial cell and a eukaryotic yeast cell - see Figure 2.

Figure 2 – The prokaryotic cell architecture of the bacteria and archaea is very simple and designed for rapid replication. Prokaryotic cells do not have a nucleus enclosing their DNA. Eukaryotic cells, on the other hand, store their DNA on chromosomes that are isolated in a cellular nucleus. Eukaryotic cells also have a very complex internal structure with a large number of organelles, or subroutine functions, that compartmentalize the functions of life within the eukaryotic cells.

Prokaryotic cells essentially consist of a tough outer cell wall enclosing an inner cell membrane and contain a minimum of internal structure. The cell membrane is composed of phospholipids and proteins. The DNA within prokaryotic cells generally floats freely as a large loop of DNA, and their ribosomes, used to help translate mRNA into proteins, float freely within the entire cell as well. The ribosomes in prokaryotic cells are not attached to membranes, like they are in eukaryotic cells, which have membranes called the rough endoplasmic reticulum for that purpose. The chief advantage of prokaryotic cells is their simple design and the ability to thrive and rapidly reproduce even in very challenging environments, like little AK-47s that still manage to work in environments where modern tanks will fail. Eukaryotic cells, on the other hand, are found in the bodies of all complex organisms, from single-celled yeasts to you and me, and they divide up cell functions amongst a collection of organelles (functional subroutines), such as mitochondria, chloroplasts, Golgi bodies, and the endoplasmic reticulum. Figure 3 depicts Carl Woese's rewrite of Darwin's famous tree of life, and shows that complex forms of life, like you and me, that are based upon cells using the eukaryotic cell architecture, actually spun off from the archaea and not the bacteria. Now archaea and bacteria look identical under a microscope, and that is the reason why at first we thought they were all just bacteria for hundreds of years. But in the 1970s Carl Woese discovered that the ribosomes used to transcribe mRNA into proteins were different between certain microorganisms that had all been previously lumped together as "bacteria". Carl Woese determined that the lumped together "bacteria" really consisted of two entirely different forms of life - the bacteria and the archaea - see Figure 3. The bacteria and archaea both have cell walls, but use slightly different organic molecules to build them. Some archaea, known as the extremophiles that live in harsh conditions also wrap their DNA around stabilizing histone proteins. Eukaryotes also wrap their DNA around histone proteins to form chromatin and chromosomes - for more on that see: An IT Perspective on the Origin of Chromatin, Chromosomes and Cancer. For that reason, and other biochemical reactions that the archaea and eukaryotes both share, it is now thought that the eukaryotes split off from the archaea and not the bacteria.

Figure 3 – In 1977 Carl Woese developed a new tree of life consisting of the Bacteria, the Archea and the Eukarya. The Bacteria and Archea use a simple prokaryotic cell architecture, while the Eukarya use the much more complicated eukaryotic cell structure.

The other thing about eukaryotic cells, as opposed to prokaryotic cells, is that eukaryotic cells are HUGE! They are like 15,000 times larger by volume than prokaryotic cells! See Figure 4 for a true-scale comparison of the two.

Figure 4 – Not only are eukaryotic cells much more complicated than prokaryotic cells, they are also HUGE!

Recall that in the Endosymbiosis theory of Lynn Margulis, it is thought that the mitochondria of eukaryotic cells were originally parasitic bacteria that once invaded archaeal prokaryotic cells and took up residence. Certain of those ancient archaeal prokaryotic cells, with their internal bacterial mitochondrial parasites, were then able to survive the parasitic bacterial onslaught, and later, went on to form a strong parasitic/symbiotic relationship with them, like all forms of self-replicating information tend to do. The reason researchers think this is what happened is because mitochondria have their own DNA and that DNA is stored as a loose loop like bacteria store their DNA. In The Rise of Complexity in Living Things and Software we explored Nick Lane's contention that it was the arrival of the parasitic/symbiotic prokaryotic mitochondria in eukaryotic cells that provided the necessary energy to produce the very complicated eukaryotic cell architecture.

Originally, Carl Woese proposed that all three Domains diverged from one single line of "progenotes" in the distant past, as depicted in Figure 5 below. Over time, this became the standard model for the early diversification of life on the Earth.

Figure 5 – Originally, Carl Woese proposed that all three domains diverged from a single "progenote". From Phylogenetic Classification and the Universal Tree (1999) by W. Ford Doolittle.

But in later years, he and others, like W. Ford Doolittle, proposed the three domains diverged from a network of progenotes that shared many genes amongst themselves by way of lateral gene transmission, as depicted in Figure 6 below.

Figure 6 – Some now propose that the three domains diverged from a network of progenotes that shared many genes amongst themselves by way of lateral gene transmission. From Phylogenetic Classification and the Universal Tree (1999) by W. Ford Doolittle.

For more on that see Phylogenetic Classification and the Universal Tree (1999) by W. Ford Doolittle at:

https://pdfs.semanticscholar.org/62d3/c52775621e1809e5fb94d3030a91894cbb9a.pdf

I just finished reading The Common Ancestor of Archaea and Eukarya Was Not an Archaeon (2013) by Patrick Forterre which is available at:

https://www.hindawi.com/journals/archaea/2013/372396/

This paper calls into question the current idea that the Eukarya Domain arose from an archaeal prokaryotic cell that fused with bacterial prokaryotic cells. In the The Common Ancestor of Archaea and Eukarya Was Not an Archaeon, Patrick Forterre contends that the eukaryotic cell architecture actually arose from a third separate line of protoeukaryotic cells that were more complicated than prokaryotic archaeal cells, but simpler than today's complex eukaryotic cells. In this view, modern simple prokaryotic archaeal and bacterial cells evolved from the more complex protoeukaryotic cell by means of simplification in order to occupy high-temperature environments. This is best summed up by the abstract for the above paper:

Abstract

It is often assumed that eukarya originated from archaea. This view has been recently supported by phylogenetic analyses in which eukarya are nested within archaea. Here, I argue that these analyses are not reliable, and I critically discuss archaeal ancestor scenarios, as well as fusion scenarios for the origin of eukaryotes. Based on recognized evolutionary trends toward reduction in archaea and toward complexity in eukarya, I suggest that their last common ancestor was more complex than modern archaea but simpler than modern eukaryotes (the bug in-between scenario). I propose that the ancestors of archaea (and bacteria) escaped protoeukaryotic predators by invading high-temperature biotopes, triggering their reductive evolution toward the “prokaryotic” phenotype (the thermoreduction hypothesis). Intriguingly, whereas archaea and eukarya share many basic features at the molecular level, the archaeal mobilome resembles more the bacterial than the eukaryotic one. I suggest that selection of different parts of the ancestral virosphere at the onset of the three domains played a critical role in shaping their respective biology. Eukarya probably evolved toward complexity with the help of retroviruses and large DNA viruses, whereas similar selection pressure (thermoreduction) could explain why the archaeal and bacterial mobilomes somehow resemble each other.

In the paper, Patrick Forterre goes into great detail discussing the many similarities and differences between the Bacteria, the Archea and the Eukarya on a biochemical level. While reading the paper, I once again began to appreciate the great difficulties that arise when trying to piece together the early evolution of carbon-based life on the Earth in deep time, with only one example readily at hand to study. I was particularly struck by the many differences between the biochemistry of the Bacteria, the Archea and the Eukarya that did not seem to jive with them all coming from a common ancestor - a LUCA or Last Universal Common Ancestor. As a softwarephysicist, I naturally began to think back on the historical evolution of software and of other forms of self-replicating information over time. This led me to wonder if the Bacteria, the Archea and the Eukarya really all evolved from a common LUCA? What if the Bacteria, the Archea and the Eukarya actually represented the vestiges of three separate lines of descent that all independently arose on their own? Perhaps carbon-based life arose many times on the early Earth and the Bacteria, the Archea and the Eukarya just represent the last collection of survivors? The differences between the Bacteria, the Archea and the Eukarya could be due to their separate originations, and their similarities could be due to them converging upon similar biochemical solutions to solve similar problems.

The Power of Convergence

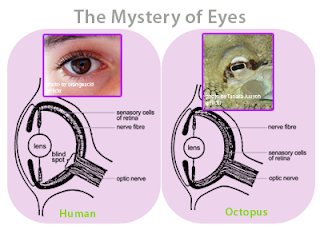

In biology, convergence is the idea that sometimes organisms that are not at all related will come up with very similar solutions to common problems that they share. For example, the concept of the eye has independently evolved at least 40 different times in the past 600 million years, so there are many examples of “living fossils” showing the evolutionary path. For example, the camera-like structures of the human eye and the eye of an octopus are nearly identical, even though each structure evolved totally independent of each other. Could it be that the complex structures of the Bacteria, the Archea and the Eukarya also evolved from dead organic molecules independently?

Figure 7 - The eye of a human and the eye of an octopus are nearly identical in structure, but evolved totally independently of each other. As Daniel Dennett pointed out, there are only a certain number of Good Tricks in Design Space and natural selection will drive different lines of descent towards them.

Similarly, in SoftwareBiology and A Proposal For All Practicing Paleontologists we see that the evolution of software over the past 77 years, or 2.4 billion seconds, ever since Konrad Zuse first cranked up his Z3 computer in May of 1941, has closely followed the same path as life on Earth over the past 4.0 billion years in keeping with Simon Conway Morris’s contention that convergence has played the dominant role in the evolution of life on Earth. As I mentioned above, an oft-cited example of this is the evolution of the complex camera-like human eye. Even Darwin himself had problems with trying to explain how something as complicated as the human eye could have evolved through small incremental changes from some structure that could not see at all. After all, what good is 1% of an eye? As I have often stated in the past, this is not a difficult thing for IT professionals to grasp because we are constantly evolving software on a daily basis through small incremental changes to our applications. However, when we do look back over the years to what our small incremental changes have wrought, it is quite surprising to see just how far our applications have come from their much simpler ancestors and to realize that it would be very difficult for an outsider to even recognize their ancestral forms. However, with the aid of computers, many researchers in evolutionary biology have shown just how easily a camera-like eye can evolve. Visible photons have an energy of about 1 – 3 eV, which is about the energy of most chemical reactions. Consequently, visible photons are great for stimulating chemical reactions, like the reactions in chlorophyll that turn the energy of visible photons into the chemical energy of carbohydrates or stimulating the chemical reactions of other light-sensitive molecules that form the basis of sight. In a computer simulation, the eye can simply begin as a flat eyespot of photosensitive cells that look like a patch like this: |. In the next step, the eyespot forms a slight depression, like the beginnings of the letter C, which allows the simulation to have some sense of image directionality because the light from a distant source will hit different sections of the photosensitive cells on the back part of the C. As the depression deepens and the hole in the C gets smaller, the incipient eye begins to behave like a pin hole camera that forms a clearer, but dimmer, image on the back part of the C. Next a transparent covering covers over the hole in the pin hole camera to provide some protection for the sensitive cells at the back of the eye, and a transparent humor fills the eye to keep its shape: C). Eventually, the transparent covering thickens into a flexible lens under the protective covering that can be used to focus light, and to allow for a wider entry hole that provides a brighter image, essentially decreasing the f-stop of the eye like in a camera: C0).

So it is easy to see how a 1% eye could easily evolve into a modern complex eye through small incremental changes that always improve the visual acuity of the eye. Such computer simulations predict that a camera-like eye could easily evolve in as little as 500,000 years.

Figure 8 – Computer simulations of the evolution of a camera-like eye (click to enlarge).

Now the concept of the eye has independently evolved at least 40 different times in the past 600 million years, so there are many examples of “living fossils” showing the evolutionary path. In Figure 9 below, we see that all of the steps in the computer simulation of Figure 8 can be found today in various mollusks. Notice that the human-like eye on the far right is really that of an octopus, not a human, again demonstrating the power of natural selection to converge upon identical solutions by organisms with separate lines of descent.

Figure 9 – There are many living fossils that have left behind signposts along the trail to the modern camera-like eye. Notice that the human-like eye on the far right is really that of an octopus (click to enlarge).

Could it be that the very similar unicellular designs of the Bacteria, the Archea and the Protoeukarya

represent yet another example of convergence bringing forth very complex structures multiple times, that of membrane-based living cells, from the extant organic molecules of the early earth? I know that is a pretty wild idea but think of the implications. It would mean that the probability of living things emerging from organic molecules was nearly assured given the right conditions, and that simple unicellular life in our Universe should be quite common. In The Bootstrapping Algorithm of Carbon-Based Life I covered Dave Deamer's and Bruce Damer's new Hot Spring Origins Hypothesis model for the origin of carbon-based life on the early Earth. Perhaps such a model is not limited to producing only a single type of membrane-based form of unicellular life. In fact, I would contend that its Bootstrapping Algorithm might indeed produce a number of such types. Perhaps a little softwarephysics might shed some light on the subject.

Some Help From Softwarephysics

Recall that one of the fundamental findings of softwarephysics is that carbon-based life and software are both forms of self-replicating information, and that both have converged upon similar solutions to combat the second law of thermodynamics in a highly nonlinear Universe. For biologists, the value of softwarephysics is that software has been evolving about 100 million times faster than living things over the past 77 years, or 2.4 billion seconds, ever since Konrad Zuse first cranked up his Z3 computer in May of 1941, and the evolution of software over that period of time is the only history of a form of self-replicating information that has actually been recorded by human history. In fact, the evolutionary history of software has all occurred within a single human lifetime, and many of those humans are still alive today to testify as to what actually had happened, something that those working on the origin of life on the Earth and its early evolution can only try to imagine. Again, in softwarephysics, we define self-replicating information as:

Self-Replicating Information – Information that persists through time by making copies of itself or by enlisting the support of other things to ensure that copies of itself are made.

The Characteristics of Self-Replicating Information

All forms of self-replicating information have some common characteristics:

1. All self-replicating information evolves over time through the Darwinian processes of inheritance, innovation and natural selection, which endows self-replicating information with one telling characteristic – the ability to survive in a Universe dominated by the second law of thermodynamics and nonlinearity.

2. All self-replicating information begins spontaneously as a parasitic mutation that obtains energy, information and sometimes matter from a host.

3. With time, the parasitic self-replicating information takes on a symbiotic relationship with its host.

4. Eventually, the self-replicating information becomes one with its host through the symbiotic integration of the host and the self-replicating information.

5. Ultimately, the self-replicating information replaces its host as the dominant form of self-replicating information.

6. Most hosts are also forms of self-replicating information.

7. All self-replicating information has to be a little bit nasty in order to survive.

8. The defining characteristic of self-replicating information is the ability of self-replicating information to change the boundary conditions of its utility phase space in new and unpredictable ways by means of exapting current functions into new uses that change the size and shape of its particular utility phase space. See Enablement - the Definitive Characteristic of Living Things for more on this last characteristic.

So far we have seen 5 waves of self-replicating information sweep across the Earth, with each wave greatly reworking the surface and near subsurface of the planet as it came to predominance:

1. Self-replicating autocatalytic metabolic pathways of organic molecules

2. RNA

3. DNA

4. Memes

5. Software

Some object to the idea of memes and software being forms of self-replicating information because currently, both are products of the human mind. But I think that objection stems from the fact that most people simply do not consider themselves to be a part of the natural world. Instead, most people consciously or subconsciously consider themselves to be a supernatural and immaterial spirit that is temporarily haunting a carbon-based body. Now, in order for evolution to take place, we need all three Darwinian processes at work – inheritance, innovation and natural selection. And that is the case for all forms of self-replicating information, including carbon-based life, memes and software. Currently, software is being written and maintained by human programmers, but that will likely change in the next 10 – 50 years when the Software Singularity occurs and AI software will be able to write and maintain software better than a human programmer. Even so, one must realize that human programmers are also just machines with a very complicated and huge neural network of neurons that have been trained with very advanced Deep Learning techniques to code software. Nobody learned how to code software sitting alone in a dark room. All programmers inherited the memes for writing software from teachers, books, other programmers or by looking at the code of others. Also, all forms of selection are "natural" unless they are made by supernatural means. So a programmer pursuing bug-free software by means of trial and error is no different than a cheetah deciding upon which gazelle in a herd to pursue.

Software is now rapidly becoming the dominant form of self-replicating information on the planet and is having a major impact on mankind as it comes to predominance. So we are now living in one of those very rare times when a new form of self-replicating information, in the form of software, is coming to predominance. For biologists, this presents an invaluable opportunity because software has been evolving about 100 million times faster than living things over the past 77 years, or 2.4 billion seconds, ever since Konrad Zuse first cranked up his Z3 computer in May of 1941. And the evolution of software over that period of time is the only history of a form of self-replicating information that has actually been recorded by human history. In fact, the evolutionary history of software has all occurred within a single human lifetime, and many of those humans are still alive today to testify as to what actually had happened, something that those working on the origin of life on the Earth and its early evolution can only try to imagine. For more on that see: A Brief History of Self-Replicating Information.

To gain some insights let us take a look at the origin of software and certain memes to see if they had a common LUCA, or if they independently arose several times instead, and then later merged. The origin of human languages and writing will serve our purposes for the origins of a class of memes. As Daniel Dennett pointed out, languages are simply memes that you can speak. Writing is just a meme for recording memes.

The Rise of Software

Currently, we are witnessing one of those very rare moments in time when a new form of self-replicating information, in the form of software, is coming to dominance. Software is now so ubiquitous that it now seems like the whole world is immersed in a Software Universe of our own making, surrounded by PCs, tablets, smartphones and the software now embedded in most of mankind's products. In fact, I am now quite accustomed to sitting with audiences of younger people who are completely engaged with their "devices", before, during and after a performance. This may seem like a very recent development in the history of mankind, but in Crocheting Software we saw that crochet patterns are actually forms of software that date back to the early 19th century! In Crocheting Software we also saw that the origin of computer software was such a hodge-podge of precursors, false starts, and failed attempts that it is nearly impossible to pinpoint an exact date for its origin, but for the purposes of softwarephysics I have chosen May of 1941, when Konrad Zuse first cranked up his Z3 computer, as the starting point for modern software. Zuse wanted to use his Z3 computer to perform calculations for aircraft designs that were previously done manually in a very tedious manner. In the discussion below, I will first outline a brief history of the evolution of hardware technology to explain how we got to this state, but it is important to keep in mind that it was the relentless demands of software for more and more memory and CPU-cycles over the years that really drove the exponential explosion of hardware capability. I hope to show that software independently arose many times over the years, using many differing hardware technologies. Self-replicating information is very opportunistic, and will exapt whatever hardware happens to be available at the time. Four billion years ago, carbon-based life exapted the extant organic molecules and the naturally occurring geochemical cycles of the day in order to bootstrap itself into existence, and that is what software has been doing for the past 2.4 billion seconds on the Earth. As we briefly cover the evolutionary history of computer hardware down below, please keep in mind that for each new generation of machines, the accompanying software had to essentially independently arise again because each new machine had a unique instruction set, meaning that an executable program on one computer could not run on a different computer because they had different instruction sets. As IT professionals, writing and supporting software, and as end-users, installing and using software, we are all essentially software enzymes caught up in a frantic interplay of self-replicating information. Software is currently domesticating our minds, to churn out ever more software, of ever-increasing complexity, and this will likely continue at an ever-accelerating pace, until one day, when software finally breaks free, and begins to generate itself using AI and machine learning techniques. For more details on the evolutionary history of software see the SoftwarePaleontology section of SoftwareBiology. See Software Embryogenesis for a description of the software architecture of a modern high-volume corporate website in action, just prior to the current Cloud Computing Revolution that we are now experiencing.

A Brief Evolutionary History of Computer Hardware

It all started back in May of 1941 when Konrad Zuse first cranked up his Z3 computer. The Z3 was the world's first real computer and was built with 2400 electromechanical relays that were used to perform the switching operations that all computers use to store information and to process it. To build a computer, all you need is a large network of interconnected switches that have the ability to switch each other on and off in a coordinated manner. Switches can be in one of two states, either open (off) or closed (on), and we can use those two states to store the binary numbers of “0” or “1”. By using a number of switches teamed together in open (off) or closed (on) states, we can store even larger binary numbers, like “01100100” = 38. We can also group the switches into logic gates that perform logical operations. For example, in Figure 10 below we see an AND gate composed of two switches A and B. Both switch A and B must be closed in order for the light bulb to turn on. If either switch A or B is open, the light bulb will not light up.

Figure 10 – An AND gate can be simply formed from two switches. Both switches A and B must be closed, in a state of “1”, in order to turn the light bulb on.

Additional logic gates can be formed from other combinations of switches as shown in Figure 11 below. It takes about 2 - 8 switches to create each of the various logic gates shown below.

Figure 11 – Additional logic gates can be formed from other combinations of 2 – 8 switches.

Once you can store binary numbers with switches and perform logical operations upon them with logic gates, you can build a computer that performs calculations on numbers. To process text, like names and addresses, we simply associate each letter of the alphabet with a binary number, like in the ASCII code set where A = “01000001” and Z = ‘01011010’ and then process the associated binary numbers.

Figure 12 – Konrad Zuse with a reconstructed Z3 in 1961 (click to enlarge).

Figure 13 – Block diagram of the Z3 architecture (click to enlarge).

The electrical relays used by the Z3 were originally meant for switching telephone conversations. Closing one relay allowed current to flow to another relay’s coil, causing that relay to close as well.

Figure 14 – The Z3 was built using 2400 electrical relays, originally meant for switching telephone conversations.

Figure 15 – The electrical relays used by the Z3 for switching were very large, very slow and used a great deal of electricity which generated a great deal of waste heat.

Now I was born about 10 years later in 1951, a few months after the United States government installed its very first commercial computer, a UNIVAC I, for the Census Bureau on June 14, 1951. The UNIVAC I was 25 feet by 50 feet in size, and contained 5,600 vacuum tubes, 18,000 crystal diodes and 300 relays with a total memory of 12 K. From 1951 to 1958 a total of 46 UNIVAC I computers were built and installed.

Figure 16 – The UNIVAC I was very impressive on the outside.

Figure 17 – But the UNIVAC I was a little less impressive on the inside.

Figure 18 – Most of the electrical relays of the Z3 were replaced with vacuum tubes in the UNIVAC I, which were also very large, used lots of electricity and generated lots of waste heat too, but the vacuum tubes were 100,000 times faster than relays.

Figure 19 – Vacuum tubes contain a hot negative cathode that glows red and boils off electrons. The electrons are attracted to the cold positive anode plate, but there is a gate electrode between the cathode and anode plate. By changing the voltage on the grid, the vacuum tube can control the flow of electrons like the handle of a faucet. The grid voltage can be adjusted so that the electron flow is full blast, a trickle, or completely shut off, and that is how a vacuum tube can be used as a switch.

In the 1960s the vacuum tubes were replaced by discrete transistors and in the 1970s the discrete transistors were replaced by thousands of transistors on a single silicon chip. Over time, the number of transistors that could be put onto a silicon chip increased dramatically, and today, the silicon chips in your personal computer hold many billions of transistors that can be switched on and off in about 10-10 seconds. Now let us look at how these transistors work.

There are many different kinds of transistors, but I will focus on the FET (Field Effect Transistor) that is used in most silicon chips today. A FET transistor consists of a source, gate and a drain. The whole affair is laid down on a very pure silicon crystal using a multi-step process that relies upon photolithographic processes to engrave circuit elements upon the very pure silicon crystal. Silicon lies directly below carbon in the periodic table because both silicon and carbon have 4 electrons in their outer shell and are also missing 4 electrons. This makes silicon a semiconductor. Pure silicon is not very electrically conductive in its pure state, but by doping the silicon crystal with very small amounts of impurities, it is possible to create silicon that has a surplus of free electrons. This is called N-type silicon. Similarly, it is possible to dope silicon with small amounts of impurities that decrease the amount of free electrons, creating a positive or P-type silicon. To make an FET transistor you simply use a photolithographic process to create two N-type silicon regions onto a substrate of P-type silicon. Between the N-type regions is found a gate which controls the flow of electrons between the source and drain regions, like the grid in a vacuum tube. When a positive voltage is applied to the gate, it attracts the remaining free electrons in the P-type substrate and repels its positive holes. This creates a conductive channel between the source and drain which allows a current of electrons to flow.

Figure 20 – A FET transistor consists of a source, gate and drain. When a positive voltage is applied to the gate, a current of electrons can flow from the source to the drain and the FET acts like a closed switch that is “on”. When there is no positive voltage on the gate, no current can flow from the source to the drain, and the FET acts like an open switch that is “off”.

Figure 21 – When there is no positive voltage on the gate, the FET transistor is switched off, and when there is a positive voltage on the gate the FET transistor is switched on. These two states can be used to store a binary “0” or “1”, or can be used as a switch in a logic gate, just like an electrical relay or a vacuum tube.

Figure 22 – Above is a plumbing analogy that uses a faucet or valve handle to simulate the actions of the source, gate and drain of an FET transistor.

The CPU chip in your computer consists largely of transistors in logic gates, but your computer also has a number of memory chips that use transistors that are “on” or “off” and can be used to store binary numbers or text that is encoded using binary numbers. The next thing we need is a way to coordinate the billions of transistor switches in your computer. That is accomplished with a system clock. My current laptop has a clock speed of 2.5 GHz which means it ticks 2.5 billion times each second. Each time the system clock on my computer ticks, it allows all of the billions of transistor switches on my laptop to switch on, off, or stay the same in a coordinated fashion. So while your computer is running, it is actually turning on and off billions of transistors billions of times each second – and all for a few hundred dollars!

Computer memory was another factor greatly affecting the origin and evolution of software over time. Strangely, the original Z3 used electromechanical switches to store working memory, like we do today with transistors on memory chips, but that made computer memory very expensive and very limited, and this remained true all during the 1950s and 1960s. Prior to 1955 computers, like the UNIVAC I that first appeared in 1951, were using mercury delay lines that consisted of a tube of mercury that was about 3 inches long. Each mercury delay line could store about 18 bits of computer memory as sound waves that were continuously refreshed by quartz piezoelectric transducers on each end of the tube. Mercury delay lines were huge and very expensive per bit so computers like the UNIVAC I only had a memory of 12 K (98,304 bits).

Figure 23 – Prior to 1955, huge mercury delay lines built from tubes of mercury that were about 3 inches long were used to store bits of computer memory. A single mercury delay line could store about 18 bits of computer memory as a series of sound waves that were continuously refreshed by quartz piezoelectric transducers at each end of the tube.

In 1955 magnetic core memory came along, and used tiny magnetic rings called "cores" to store bits. Four little wires had to be threaded by hand through each little core in order to store a single bit, so although magnetic core memory was a lot cheaper and smaller than mercury delay lines, it was still very expensive and took up lots of space.

Figure 24 – Magnetic core memory arrived in 1955 and used a little ring of magnetic material, known as a core, to store a bit. Each little core had to be threaded by hand with 4 wires to store a single bit.

Figure 25 – Magnetic core memory was a big improvement over mercury delay lines, but it was still hugely expensive and took up a great deal of space within a computer.

Figure 26 – Finally in the early 1970s inexpensive semiconductor memory chips came along that made computer memory small and cheap.

Again, it was the relentless drive of software for ever-increasing amounts of memory and CPU-cycles that made all this happen, and that is why you can now comfortably sit in a theater with a smartphone that can store more than 10 billion bytes of data, while back in 1951 the UNIVAC I occupied an area of 25 feet by 50 feet to store 12,000 bytes of data. Like all forms of self-replicating information tend to do, over the past 2.4 billion seconds, software has opportunistically exapted the extant hardware of the day - the electromechanical relays, vacuum tubes, discrete transistors and transistor chips of the emerging telecommunications and consumer electronics industries, into the service of self-replicating software of ever-increasing complexity, as did carbon-based life exapt the extant organic molecules and the naturally occurring geochemical cycles of the day in order to bootstrap itself into existence.

But when I think back to my early childhood in the early 1950s, I can still vividly remember a time when there essentially was no software at all in the world. In fact, I can still remember my very first encounter with a computer on Monday, Nov. 19, 1956, watching the Art Linkletter TV show People Are Funny with my parents on an old black and white console television set that must have weighed close to 150 pounds. Art was showcasing the 21st UNIVAC I to be constructed and had it sorting through the questionnaires from 4,000 hopeful singles, looking for the ideal match. The machine paired up John Caran, 28, and Barbara Smith, 23, who later became engaged. And this was more than 40 years before eHarmony.com! To a five-year-old boy, a machine that could “think” was truly amazing. Since that very first encounter with a computer back in 1956, I have personally witnessed software slowly becoming the dominant form of self-replicating information on the planet, and I have also seen how software has totally reworked the surface of the planet to provide a secure and cozy home for more and more software of ever- increasing capability. For more on this please see A Brief History of Self-Replicating Information. That is why I think there would be much to be gained in exploring the origin and evolution of the $10 trillion computer simulation that the Software Universe provides, and that is what softwarephysics is all about.

The Origin of Human Languages

Now I am not a linguist, but from what I can find on Google, there seem to be about 5000 languages spoken in the world today that linguists divide into about 20 families, and we suspect there were many more languages in days gone by when people lived in smaller groups. The oldest theory for the origin of human language is known as monogenesis, and like the concept of a single LUCA in biology, it posits that language spontaneously arose only once and that all of the other thousands of languages then diverged from this single mother tongue, sort of like the Tower of Babel in the book of Genesis. The second theory is known as polygenesis, and it posits that human language emerged independently many times in many separate far-flung groups. These multiple origins of language then began to differentiate, and that is why we now have 5,000 different languages grouped into 20 different families. Each of the 20 families might remain as a vestige of the multiple originations of human language from the separate mother tongues. Many linguists in the United States are in favor of a form of monogenesis known as the Mother Tongue Theory, which stems from the Out of Africa Theory for the original dispersion of Homo sapiens throughout the world. The Mother Tongue Theory holds that an original human language originated about 150,000 years ago in Africa, and that language went along for the ride when Homo sapiens diffused out of Africa to colonize the entire world. So the jury is still out, and probably always will be, on the proposition of human languages having a single LUCA. Personally, I find the extreme diversity of human languages to favor the independent polygenesis of human language multiple times by many independent groups of Homo sapiens. But that might stem from having taken a bit of Spanish, Latin, and German in grade school and high school. When I got to college, I took a year of Russian, only to learn that there was a whole different way of communicating!

The Origin of Writing Systems

The origin of writing systems seems to provide a more fruitful analogy because writing systems are more recent, and by definition, they leave behind a "fossil record" in written form. Since I am getting way beyond my area of expertise, let me quote directly from the Wikipedia at:

https://en.wikipedia.org/wiki/History_of_writing

It is generally agreed that true writing of language was independently conceived and developed in at least two ancient civilizations and possibly more. The two places where it is most certain that the concept of writing was both conceived and developed independently are in ancient Sumer (in Mesopotamia), around 3100 BC, and in Mesoamerica by 300 BC, because no precursors have been found to either of these in their respective regions. Several Mesoamerican scripts are known, the oldest being from the Olmec or Zapotec of Mexico.

Independent writing systems also arose in Egypt around 3100 BC and in China around 1200 BC, but historians debate whether these writing systems were developed completely independently of Sumerian writing or whether either or both were inspired by Sumerian writing via a process of cultural diffusion. That is, it is possible that the concept of representing language by using writing, though not necessarily the specifics of how such a system worked, was passed on by traders or merchants traveling between the two regions.

Ancient Chinese characters are considered by many to be an independent invention because there is no evidence of contact between ancient China and the literate civilizations of the Near East, and because of the distinct differences between the Mesopotamian and Chinese approaches to logography and phonetic representation. Egyptian script is dissimilar from Mesopotamian cuneiform, but similarities in concepts and in earliest attestation suggest that the idea of writing may have come to Egypt from Mesopotamia. In 1999, Archaeology Magazine reported that the earliest Egyptian glyphs date back to 3400 BC, which "challenge the commonly held belief that early logographs, pictographic symbols representing a specific place, object, or quantity, first evolved into more complex phonetic symbols in Mesopotamia."

Similar debate surrounds the Indus script of the Bronze Age Indus Valley civilization in Ancient India (2600 BC). In addition, the script is still undeciphered, and there is debate about whether the script is true writing at all or, instead, some kind of proto-writing or nonlinguistic sign system.

An additional possibility is the undeciphered Rongorongo script of Easter Island. It is debated whether this is true writing and, if it is, whether it is another case of cultural diffusion of writing. The oldest example is from 1851, 139 years after their first contact with Europeans. One explanation is that the script was inspired by Spain's written annexation proclamation in 1770.

Various other known cases of cultural diffusion of writing exist, where the general concept of writing was transmitted from one culture to another, but the specifics of the system were independently developed. Recent examples are the Cherokee syllabary, invented by Sequoyah, and the Pahawh Hmong system for writing the Hmong language.

Therefore, I think that it can be safely assumed that many memes, like the meme for writing, making pots and flake tools, arose independently multiple times throughout human history and then those memes further differentiated.

Notice that, like the origin of true software from its many precursors, it is very difficult to pick an exact date for the origin of true writing from its many precursors too. When exactly do cartoon-like symbols evolve into a true written language? The origin of true languages from the many grunts of Homo sapiens might also have been very difficult to determine. Perhaps very murky origins are just another common characteristic of all forms of self-replicating information, including carbon-based life.

Conclusion

Most likely, the idea that the Bacteria, the Archea and the Eukarya represent the last vestiges of three separate lines of descent that independently arose from dead organic molecules in the distant past, with no LUCA - Last Universal Common Ancestor, is most probably incorrect. However, given the chaotic origination histories of many forms of self-replicating information, including the memes and software, I also have reservations about the idea that all carbon-based life on the Earth sprang from one single LUCA too, as depicted in Figure 5. It would seem most likely that there were many precursors to carbon-based cellular life on the Earth, and that it would be nearly impossible to have identified when carbon-based life actually came to be, even if we were around to watch it all happen. Perhaps 4.0 billion years ago, carbon-based life independently arose several times with many common biochemical characteristics because those common biochemical characteristics were the only ones that worked at the time, as depicted in Figure 6. Later, these separate originations of life most likely merged somewhat in the parasitic/symbiotic manner that all forms of self-replicating information are prone to do to form the Bacteria, the Archea and the Eukarya. At least it's something to think about.

Comments are welcome at scj333@sbcglobal.net

To see all posts on softwarephysics in reverse order go to:

https://softwarephysics.blogspot.com/

Regards,

Steve Johnston