In the Western World, there is an oft-cited ancient Chinese curse framed as "May You Live In Interesting Times" that is frequently cast upon foes like the ancient Sicilian Malocchio or the "Evil Eye". However, like many other memes, this ancient Chinese curse is nowhere to be found amongst the Chinese themselves. The closest thing that the Chinese have is a phrase from Volume 3 of the 1627 short story collection by Feng Menglong, entitled Stories to Awaken the World - “Better to be a dog in times of tranquility than a human in times of chaos.”. Research indicates that the phrase likely originated in the mind of Austen Chamberlain’s father, Joseph Chamberlain, around the late 19th and early 20th centuries.

Joseph Chamberlain used a similar statement during a speech in 1898, emphasizing the interesting yet challenging times they were living in. Over time, the Chamberlain family may have come to believe that the elder Chamberlain had not used his own phrase but had repeated a phrase from Chinese culture.

Softwarephysics maintains that we all are now living in very interesting times as software is now becoming the dominant form of self-replicating information on the planet. For more on that please see A Brief History of Self-Replicating Information, Welcome To The First Galactic Singularity and The Singularity Has Arrived and So Now Nothing Else Matters. The amazing thing is that all of this happened within the span of a single human lifetime. Having been born in 1951, I can easily remember a time from my early childhood when there essentially was no software or hardware at all in the world for the most part. So I had the pleasure of seeing much of our current vast global infrastructure of software and hardware slowly unfold in real time before my very eyes. To share a similar experience, I suggest watching some of the very interesting historical videos available at the Computer History Archives Project. The Computer History Archives Project currently has 234 historical videos covering the evolutionary history of hardware and software.

Computer History Archives Project ("CHAP")

https://www.youtube.com/@ComputerHistoryArchivesProject

Figure 1 – A screen shot from the Computer History Archives Project.

Figure 2 – Scrolling down in the Computer History Archives Project.

Figure 3 – Scrolling down in the Computer History Archives Project.

As an aid, here is a brief recap of hardware history.

A Brief History of the Evolution of Computing Hardware

To build a digital computer, all you need is a large network of interconnected switches that can switch each other on and off in a coordinated manner. Switches can be in one of two states, either open (off) or closed (on), and we can use those two states to store the binary numbers “0” or “1”. By using several switches teamed together in open (off) or closed (on) states, we can store even larger binary numbers, like “01100100” = 38. We can also group the switches into logic gates that perform logical operations. For example, in Figure 4 below we see an AND gate composed of two switches A and B. Both switch A and B must be closed for the light bulb to turn on. If either switch A or B is open, the light bulb will not light up.

Figure 4 – An AND gate can be simply formed from two switches. Both switches A and B must be closed, in a state of “1”, to turn the light bulb on.

Additional logic gates can be formed from other combinations of switches as shown in Figure 5 below. It takes about 2 - 8 switches to create each of the various logic gates shown below.

Figure 5 – Additional logic gates can be formed from other combinations of 2 – 8 switches.

Once you can store binary numbers with switches and perform logical operations upon them with logic gates, you can build a computer that performs calculations on numbers. To process text, like names and addresses, we simply associate each letter of the alphabet with a binary number, like in the ASCII code set where A = “01000001” and Z = ‘01011010’ and then process the associated binary numbers.

The early computers of the 1940s used electrical relays for switches. Closing one relay allowed current to flow to another relay’s coil, causing that relay to close as well.

Figure 6 – The Z3 digital computer first became operational in May of 1941 when Konrad Zuse first cranked it up in his parent's bathroom in Berlin. The Z3 consisted of 2400 electro-mechanical relays that were designed for switching telephone conversations.

Figure 7 – The electrical relays used by early computers for switching were very large, very slow and used a great deal of electricity which generated a great deal of waste heat.

Let’s begin where it all started in the spring of 1941 when Konrad Zuse built the Z3 with 2400 electromechanical telephone relays. The Z3 was the world’s first full-fledged computer. You don’t hear much about Konrad Zuse because he was working in Germany during World War II. Instead, most refer to the ENIAC I as the world's first full-fledged computer. But work on the ENIAC I did not start until July of 1943 at the University of Pennsylvania's Moore School of Electrical Engineering under the code name "Project PX" and the ENIAC I was not fully operational until 1946. The lack of recognition of Konrad Zuse's Z3 computer probably has something to do with Germany losing World War II.

Figure 8 – The ENIAC I became functional in 1946 consisting of 18,000 vacuum tubes, 7,200 crystal diodes, 1,500 relays, 70,000 resistors, 10,000 capacitors and approximately 5,000,000 hand-soldered joints. The original ENIAC I did not have any memory. It had accumulators that acted like registers in a modern computer and relied on punch cards for external memory.

The Z3 had a clock speed of 5.33 Hz and could multiply two very large numbers together in 3 seconds. It used a 22-bit word and had a total memory of 64 words. It only had two registers, but it could read in and store programs via a punched tape. In 1945, while Berlin was being bombed by over 800 bombers each day, Zuse worked on the Z4 and developed Plankalkuel, the first high-level computer language more than 10 years before the appearance of FORTRAN in 1956. Zuse was able to write the world’s first chess program with Plankalkuel. And in 1950 his startup company Zuse-Ingenieurbüro Hopferau began to sell the world’s first commercial computer, the Z4, 10 months before the sale of the first UNIVAC.

Figure 9 – Konrad Zuse with a reconstructed Z3 in 1961 (click to enlarge)

Figure 10 – Block diagram of the Z3 architecture (click to enlarge)

Now over the past 83 years, or 2.6 billion seconds, hardware has improved by a factor of over a billion. You can now buy a $400 machine that is approximately a billion times faster than the Z3 with nearly a billion times as much memory. To learn more about how Konrad Zuse built the world’s very first real computers - the Z1, Z2 and Z3 in the 1930s and early 1940s, see the following article that was written in his own words:

http://ei.cs.vt.edu/~history/Zuse.html

Figure 11 – In the 1950s. the electrical relays were replaced by vacuum tubes that were 100,000 times faster than the relays but were still quite large, used large amounts of electricity and also generated a great deal of waste heat.

The United States government installed its very first commercial digital computer, a UNIVAC I, for the Census Bureau on June 14, 1951. The UNIVAC I required an area of 25 feet by 50 feet and contained 5,600 vacuum tubes, 18,000 crystal diodes and 300 relays with a total memory of 12 KB. From 1951 to 1958 a total of 46 UNIVAC I computers were built and installed.

Figure 12 – Vacuum tubes contain a hot negative cathode that glows red and boils off electrons. The electrons are attracted to the cold positive anode plate, but there is a gate electrode between the cathode and anode plate. By changing the voltage on the grid, the vacuum tube can control the flow of electrons like the handle of a faucet. The grid voltage can be adjusted so that the electron flow is full blast, a trickle, or completely shut off, and that is how a vacuum tube can be used as a switch.

Figure 13 – In 1951, the

UNIVAC I digital computer was very impressive on the outside.

Figure 14 – But the UNIVAC I was a little less impressive on the inside.

In the 1960s the vacuum tubes were replaced by discrete transistors and in the 1970s the discrete transistors were replaced by thousands of transistors on a single silicon chip. Over time, the number of transistors that could be put onto a silicon chip increased dramatically, and today, the silicon chips in your personal computer hold many billions of transistors that can be switched on and off in about 10-10 seconds. Now let us look at how these transistors work.

There are many different kinds of transistors, but I will focus on the FET (Field Effect Transistor) that is used in most silicon chips today. A FET transistor consists of a source, gate and a drain. The whole affair is laid down on a very pure silicon crystal using a multi-step process that relies upon photolithographic processes to engrave circuit elements upon the very pure silicon crystal. Silicon lies directly below carbon in the periodic table because both silicon and carbon have 4 electrons in their outer shell and are also missing 4 electrons. This makes silicon a semiconductor. Pure silicon is not very electrically conductive in its pure state, but by doping the silicon crystal with very small amounts of impurities, it is possible to create silicon that has a surplus of free electrons. This is called N-type silicon. Similarly, it is possible to dope silicon with small amounts of impurities that decrease the amount of free electrons, creating a positive or P-type silicon. To make an FET transistor you simply use a photolithographic process to create two N-type silicon regions onto a substrate of P-type silicon. Between the N-type regions is found a gate that controls the flow of electrons between the source and drain regions, like the grid in a vacuum tube. When a positive voltage is applied to the gate, it attracts the remaining free electrons in the P-type substrate and repels its positive holes. This creates a conductive channel between the source and drain which allows a current of electrons to flow.

Figure 15 – A FET transistor consists of a source, gate and drain. When a positive voltage is applied to the gate, a current of electrons can flow from the source to the drain and the FET acts like a closed switch that is “on”. When there is no positive voltage on the gate, no current can flow from the source to the drain, and the FET acts like an open switch that is “off”.

Figure 16 – When there is no positive voltage on the gate, the FET transistor is switched off, and when there is a positive voltage on the gate the FET transistor is switched on. These two states can be used to store a binary “0” or “1”, or can be used as a switch in a logic gate, just like an electrical relay or a vacuum tube.

Figure 17 – Above is a plumbing analogy that uses a faucet or valve handle to simulate the actions of the source, gate and drain of an FET transistor.

The CPU chip in your computer consists largely of transistors in logic gates, but your computer also has a number of memory chips that use transistors that are “on” or “off” and can be used to store binary numbers or text that is encoded using binary numbers. The next thing we need is a way to coordinate the billions of transistor switches in your computer. That is accomplished with a system clock. My current laptop has a clock speed of 2.5 GHz which means it ticks 2.5 billion times each second. Each time the system clock on my computer ticks, it allows all of the billions of transistor switches on my laptop to switch on, off, or stay the same in a coordinated fashion. So while your computer is running, it is actually turning on and off billions of transistors billions of times each second – and all for a few hundred dollars!

A Brief History of Computer Memory

Computer memory was another factor greatly affecting the origin and evolution of software over time. Strangely, the original Z3 used electromechanical switches to store working memory, like we do today with transistors on memory chips, but that made computer memory very expensive and very limited, and this remained true all during the 1950s and 1960s. Prior to 1955 computers, like the UNIVAC I that first appeared in 1951, were using mercury delay lines that consisted of a tube of mercury that was about 3 inches long. Each mercury delay line could store about 18 bits of computer memory as sound waves that were continuously refreshed by quartz piezoelectric transducers on each end of the tube. Mercury delay lines were huge and very expensive per bit so computers like the UNIVAC I only had a memory of 12 K (98,304 bits).

Figure 18 – Prior to 1955, huge mercury delay lines built from tubes of mercury that were about 3 inches long were used to store bits of computer memory. A single mercury delay line could store about 18 bits of computer memory as a series of sound waves that were continuously refreshed by quartz piezoelectric transducers at each end of the tube.

In 1955 magnetic core memory came along, and used tiny magnetic rings called "cores" to store bits. Four little wires had to be threaded by hand through each little core in order to store a single bit, so although magnetic core memory was a lot cheaper and smaller than mercury delay lines, it was still very expensive and took up lots of space.

Figure 19 – Magnetic core memory arrived in 1955 and used a little ring of magnetic material, known as a core, to store a bit. Each little core had to be threaded by hand with 4 wires to store a single bit.

Figure 20 – Magnetic core memory was a big improvement over mercury delay lines, but it was still hugely expensive and took up a great deal of space within a computer.

Figure 21 – Finally in the early 1970s inexpensive semiconductor memory chips came along that made computer memory small and cheap.

Again, it was the relentless drive of software for ever-increasing amounts of memory and CPU-cycles that made all this happen, and that is why you can now comfortably sit in a theater with a smartphone that can store more than 64 billion bytes of data, while back in 1951 the UNIVAC I occupied an area of 25 feet by 50 feet to store 12,000 bytes of data. Like all forms of self-replicating information tend to do, over the past 2.6 billion seconds, software has opportunistically exapted the extant hardware of the day - the electromechanical relays, vacuum tubes, discrete transistors and transistor chips of the emerging telecommunications and consumer electronics industries, into the service of self-replicating software of ever-increasing complexity, as did carbon-based life exapt the extant organic molecules and the naturally occurring geochemical cycles of the day in order to bootstrap itself into existence.

But when I think back to my early childhood in the early 1950s, I can still vividly remember a time when there essentially was no software at all in the world. In fact, I can still remember my very first encounter with a computer on Monday, Nov. 19, 1956, watching the Art Linkletter TV show People Are Funny with my parents on an old black and white console television set that must have weighed close to 150 pounds. Art was showcasing the 21st UNIVAC I to be constructed and had it sorting through the questionnaires from 4,000 hopeful singles, looking for the ideal match. The machine paired up John Caran, 28, and Barbara Smith, 23, who later became engaged. And this was more than 40 years before eHarmony.com! To a five-year-old boy, a machine that could “think” was truly amazing. Since that very first encounter with a computer back in 1956, I have personally witnessed software slowly becoming the dominant form of self-replicating information on the planet, and I have also seen how software has totally reworked the surface of the planet to provide a secure and cozy home for more and more software of ever-increasing capability. For more on this please see A Brief History of Self-Replicating Information.

A Brief History of External Storage

The external storage of data was first handled by storing characters as holes in punch cards.

Figure 22 - Each card could hold a maximum of 80 bytes. Normally, one line of code was punched onto each card.

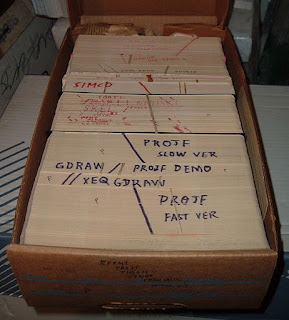

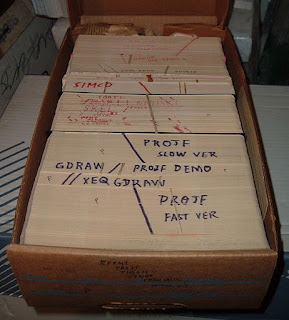

Figure 23 - The cards for a program were held together into a deck with a rubber band, or for very large programs, the deck was held in a special cardboard box that originally housed blank cards. Many times the data cards for a run followed the cards containing the source code for a program. The program was compiled and linked in two steps of the run and then the generated executable file processed the data cards that followed in the deck.

Figure 24 - To run a job, the cards in a deck were fed into a card reader, as shown on the left above, to be compiled, linked, and executed by a million dollar mainframe computer with a clock speed of about 750 KHz and about 1 MB of memory.

Figure 25 - The cards were cut on an IBM 029 keypunch machine, like the one I learned to program Fortran on back in 1972 at the University of Illinois. The IBM 029 keypunch machine did have a way of duplicating a card by feeding the card to be duplicated into the machine, while at the same time, a new blank card was registered in the machine ready for punching, and the information on the first card was then punched on to the second card. This was a very slow and time-consuming way to copy reusable code from one program to another. But there was another machine that could do the same thing for a whole deck of cards all at once, and that machine was much more useful in duplicating existing cards that could then be spliced into the card deck that you were working on.

Take note that the IBM 029 keypunch machine that was used to punch cards did allow you to copy one card at a time, but that was a rather slow way to copy cards for reuse. But there was another machine that could read an entire card deck all at once, and punch out a duplicate card deck of the original in one shot. That machine made it much easier to punch out the cards from an old card deck and splice the copied cards into the card deck that you were working on. Even so, that was a fairly clumsy way of using reusable code, so trying to use reusable code back during the unstructured prokaryotic days of IT prior to 1972 was difficult at best. Also, the chaotic nature of the unstructured prokaryotic "spaghetti code" of the 1950s and 1960s made it difficult to splice in reusable code on cards.

This all changed in the early 1970s with the rise of structured eukaryotic source code that divided functions up amongst a set of subroutines, or organelles, and the arrival of the IBM 3278 terminal and the IBM ISPF screen editor on TSO in the late 1970s that eliminated the need to program on punch cards. One of the chief characteristics of structured programming was the use of "top-down" programming, where programs began execution in a mainline routine that then called many other subroutines. The purpose of the mainline routine was to perform simple high-level logic that called the subordinate subroutines in a fashion that was easy to follow. This structured programming technique made it much easier to maintain and enhance software by simply calling subroutines from the mainline routine in the logical manner required to perform the needed tasks, like assembling Lego building blocks into different patterns that produced an overall new structure. The structured approach to software also made it much easier to reuse software. All that was needed was to create a subroutine library of reusable source code that was already compiled. A mainline program that made calls to the subroutines of the subroutine library was compiled as before. The machine code for the previously compiled subroutines was then added to the resulting executable file by a linkage editor. This made it much easier for structured eukaryotic programs to use reusable code by simply putting the software "conserved core processes" into already compiled subroutine libraries. The ISPF screen editor running under TSO on IBM 3278 terminals also made it much easier to reuse source code because now many lines of source code could be simply copied from one program file to another, with the files stored on disk drives, rather than on punch cards or magnetic tape.

Figure 26 - The IBM ISPF full screen editor ran on IBM 3278 terminals connected to IBM mainframes in the late 1970s. ISPF was also a screen-based interface to TSO (Time Sharing Option) that allowed programmers to do things like copy files and submit batch jobs. ISPF and TSO running on IBM mainframes allowed programmers to easily reuse source code by doing copy/paste operations with the screen editor from one source code file to another. By the way, ISPF and TSO are still used today on IBM mainframe computers and are fine examples of the many "conserved core processes" to be found in software.

In IT we call the way data is stored and accessed an “access method”, and we have primarily evolved two ways of storing and accessing data, either sequentially or via indexes. With a sequential access method data is stored in records, with one record following another, like a deck of index cards with the names and addresses of your friends and associates on them. In fact, the very first sequential files were indeed large decks of punch cards. Later, the large “tub files” of huge decks of punch cards were replaced by storing records sequentially on magnetic tape.

Figure 27 - Each card was composed of an 80-byte record that could hold 80 characters of data with the names and addresses of customers.

Figure 28 – The very first sequential files were large decks of punch cards composed of one record after another.

An indexed access method works like the index at the end of a book and essentially tells you where to find a particular record in a deck of index or punch cards. In the discussion below we will see how moving from sequential access methods, running on magnetic tape, to indexed access methods, running on disk drives, prompted a dramatic revolution in commercial software architecture, allowing commercial software to move from the batch processing of the 1950s and 1960s to the interactive processing of the 1970s and beyond and which is the most prominent form of processing today. However, even today commercial software still uses both sequential and indexed access methods because in some cases the batch processing of sequential data makes more sense than the interactive processing of indexed data.

Sequential Access Methods

One of the simplest and oldest sequential access methods is called QSAM - Queued Sequential Access Method:

Queued Sequential Access Method

http://en.wikipedia.org/wiki/Queued_Sequential_Access_Method

I did a lot of magnetic tape processing in the 1970s and early 1980s using QSAM. At the time we used 9 track tapes that were 1/2 inch wide and 2400 feet long on a reel with a 10.5 inch diameter. The tape had 8 data tracks and one parity track across the 1/2 inch tape width. That way we could store one byte across the 8 1-bit data tracks in a frame, and we used the parity track to check for errors. We used odd parity, if the 8 bits on the 8 data tracks in a frame added up to an even number of 1s, we put a 1 in the parity track to make the total number of 1s an odd number. If the 8 bits added up to an odd number of 1s, we put a 0 in the parity track to keep the total number of 1s an odd number. Originally, 9 track tapes had a density of 1600 bytes/inch of tape, with a data transfer rate of 15,000 bytes/second. Remember, a byte is 8 bits and can store one character, like the letter “A” which we encode in the ASCII code set as A = “01000001”.

Figure 29 – A 1/2 inch wide 9 track magnetic tape on a 2400 foot reel with a diameter of 10.5 inches

Figure 30 – 9 track magnetic tape had 8 data tracks and one parity track using odd parity which allowed for the detection of bad bytes with parity errors on the tape.

Later, 6250 bytes/inch tape drives became available, and I will use that density for the calculations that follow. Now suppose you had 50 million customers and the current account balance for each customer was stored on an 80-byte customer record. A record was like a row in a spreadsheet. The first field of the record was usually a CustomerID field that contained a unique customer ID like a social security number and was essentially the equivalent of a promoter region on the front end of a gene in DNA. The remainder of the 80-byte customer record contained fields for the customer’s name and billing address, along with the customer’s current account information. Between each block of data on the tape, there was a 0.5 inch gap of “junk” tape. This “junk” tape allowed for the acceleration and deceleration of the tape reel as it spun past the read/write head of a tape drive and perhaps occasionally reversed direction. Since an 80-byte record only came to 80/6250 = 0.0128 inches of tape, which is quite short compared to the overhead of the 0.5 inch gap of “junk” tape between records, it made sense to block many records together into a single block of data that could be read by the tape drive in a single I/O operation. For example, blocking 100 80-byte records increased the block size to 8000/6250 = 1.28 inches and between each 1.28 inch block of data on the tape, there was the 0.5 inch gap of “junk” tape. This greatly reduced the amount of wasted “junk” tape on a 2400 foot reel of tape. So each 100 record block of data took up a total of 1.78 inches of tape and we could get 16,180 blocks on a 2400 foot tape or the data for 1,618,000 customers per tape. The advantage of QSAM, over an earlier sequential access method known as BSAM, was that you could read and write an entire block of records at a time via an I/O buffer. In our example, a program could read one record at a time from an I/O buffer which contained the 100 records from a single block of data on the tape. When the I/O buffer was depleted of records, the next 100 records were read in from the next block of records on the tape. Similarly, programs could write one record at a time to the I/O buffer, and when the I/O buffer was filled with 100 records, the entire I/O buffer with 100 records in it was written as the next block of data on an output tape.

The use of a blocked I/O buffer provided a significant distinction between the way data was physically stored on tape and the way programs logically processed the data. The difference between the way things are physically implemented and the way things are logically viewed by software is a really big deal in IT. The history of IT over the past 70 years has really been a history of logically abstracting physical things through the increasing use of layers of abstraction, to the point where today, IT professionals rarely think of physical things at all. Everything just resides in a logical “Cloud”. I think that taking more of a logical view of things, rather than taking a physical view of things, would greatly help biologists at this point in the history of biology. Biologists should not get so hung up about where the information for biological software is physically located. Rather, biologists should take a cue from IT professionals, and start thinking more of biological software in logical terms, rather than physical terms.

Figure 31 – Between each record, or block of records, on a magnetic tape, there was a 0.5 inch gap of “junk” tape. The “junk” tape allowed for the acceleration and deceleration of the tape reel as it spun past the read/write head on a tape drive. Since an 80-byte record only came to 80/6250 = 0.0128 inches, it made sense to block many records together into a single block that could be read by the tape drive in a single I/O operation. For example, blocking 100 80-byte records increased the block size to 8000/6250 = 1.28 inches, and between each 1.28 inch block of data on the tape, there was a 0.5 inch gap of “junk” tape for a total of 1.78 inches per block.

Figure 32 – Blocking records on tape allowed data to be stored more efficiently.

So it took 31 tapes to just store the rudimentary account data for 50 million customers. The problem was that each tape could only store 123 MB of data. Not too good, considering that today you can buy a 1 TB PC disk drive that can hold 8525 times as much data for about $40! Today, you could also store about 2048 times as much data on a $25.00 256 GB thumb drive. So how could you find the data for a particular customer on 74,000 feet (14 miles) of tape? Well, you really could not do that reading one block of data at a time with the read/write head of a tape drive, so we processed data with batch jobs using lots of input and output tapes. Generally, we had a Master Customer File on 31 tapes and a large number of Transaction tapes with insert, update and delete records for customers. All the tapes were sorted by the CustomerID field, and our programs would read a Master tape and a Transaction tape at the same time and apply the inserts, updates and deletes on the Transaction tape to a new Master tape. So your batch job would read a Master and Transaction input tape at the same time and would then write to a single new Master output tape. These batch jobs would run for many hours, with lots of mounting and unmounting of dozens of tapes.

Figure 33 – Batch processing of 50 million customers took a lot of tapes and tape drives.

Clearly, this technology would not work for a customer calling in and wanting to know his current account status at this very moment. The solution was to use multiple transcription sites along the 14 miles of tape. This was accomplished by moving the customer data to disk drives. A disk drive is like a stack of old phonograph records on a rapidly rotating spindle. Each platter has its own access arm, like the tone arm on an old turntable that has a read/write head. To quickly get to the data on a disk drive IT invented new access methods that used indexes, like ISAM and VSAM. These hierarchical indexes work like this. Suppose you want to find one customer out of 50 million via their CustomerID. You first look up the CustomerID in a book that only contains an index of other books. The index entry for the particular CustomerID tells you which book to look in next. The next book also just consists of an index of other books too. Finally, after maybe 4 or 5 reads, you get to a book that has an index of books with “leaf” pages. This index tells you what book to get next and on what “leaf page” you can find the customer record for the CustomerID that you are interested in. So instead of spending many hours reading through perhaps 14 miles of tape on 31 tapes, you can find the customer record in a few milliseconds and put it on a webpage. For example, suppose you have 200 customers instead of 50 million and you would like to find the information on customer 190. If the customer data were stored as a sequential file on magnetic tape, you would have to read through the first 189 customer records before you finally got to customer 190. However, if the customer data were stored on a disk drive, using an indexed sequential access method like ISAM or QSAM, you could get to the customer after 3 reads that get you to the leaf page containing records 176 – 200, and you would only have to read 14 records on the leaf page before you got to record 190. For more on these indexed access methods see:

ISAM Indexed Sequential Access Method

http://en.wikipedia.org/wiki/ISAM

VSAM Virtual Storage Access Method

http://en.wikipedia.org/wiki/VSAM

Figure 34 – Disk drives allowed for indexed access methods like ISAM and VSAM to quickly access an individual record.

Figure 35 – To find customer 190 out of 200 on a magnetic tape would require sequentially reading 189 customer records. Using the above hierarchical index would only require 3 reads to get to the leaf page containing records 176 – 200. Then an additional 14 reads would get you to customer record 190.

The key advance that came with the ISAM and VSAM access methods over QSAM was that it allowed commercial software to move from batch processing to interactive processing in the 1970s and 1980s. That was a major revolution in IT.

Today we store all commercial data on relational databases, like IBM’s DB2 or Oracle’s database software, but these relational databases still use hierarchical indexing like VSAM under the hood. Relational databases logically store data on tables. A table is much like a spreadsheet and contains many rows of data that are formatted into a number of well-defined columns. A large number of indexes are then formed using combinations of data columns to get to a particular row in the table. Tables can also be logically joined together into composite tables with logical rows of data that contain all of the data on several tables merged together, and indexes can be created on the joined tables to allow programs to quickly access the data. For large-scale commercial software, these relational databases can become quite huge and incredibly complicated, with huge numbers of tables and indexes, forming a very complicated nonlinear network of components, and the database design of these huge networks of tables and indexes is crucial to processing speed and throughput. A large-scale relational database may contain several thousand tables and indexes, and a poorly designed relational database design can be just as harmful to the performance of a high-volume corporate website as buggy software. A single corrupted index can easily bring a high-volume corporate website crashing down, resulting in the loss of thousands of dollars for each second of downtime.

Figure 36 – Modern relational databases store data on a large number of tables and use many indexes to quickly access the data in a particular row of a table or a row in a combination of joined tables. Large-scale commercial applications frequently have databases with several thousand tables and several thousand indexes.

But remember, under the hood, these relational databases are all based upon indexed access methods like VSAM, and VSAM itself is just a logical view of what is actually going on in the software controlling the disk drives themselves, so essentially we have a lengthy series of logical indexes of logical indexes, of logical indexes, of logical indexes…. The point is that in modern commercial software there is a great deal of information stored in the network of components that are used to determine how information is read and written. If you dig down deep into the files running a relational database, you can actually see things like the names and addresses of customers, but you will also find huge amounts of control information that lets programs get to those names and addresses efficiently, and if any of that control information gets messed up your website comes crashing down.

Comments are welcome at

scj333@sbcglobal.net

To see all posts on softwarephysics in reverse order go to:

https://softwarephysics.blogspot.com/

Regards,

Steve Johnston