I just finished reading The Plausibility of Life (2005) by Marc W. Kirschner and John C. Gerhart that presents their theory of facilitated variation. The theory of facilitated variation maintains that, although the concepts and mechanisms of Darwin's natural selection are well understood, the mechanisms that brought forth viable biological innovations in the past are a bit wanting in classical Darwinian thought. In classical Darwinian thought, it is proposed that random genetic changes, brought on by random mutations to DNA sequences, can very infrequently cause small incremental enhancements to the survivability of the individual, and thus provide natural selection with something of value to promote in the general gene pool of a species. Again, as frequently cited, most random genetic mutations are either totally inconsequential, or totally fatal in nature, and consequently, are either totally irrelevant to the gene pool of a species or are quickly removed from the gene pool at best. The theory of facilitated variation, like classical Darwinian thought, maintains that the phenotype of an individual is key, and not so much its genotype since natural selection can only operate upon phenotypes. The theory explains that the phenotype of an individual is determined by a number of 'constrained' and 'deconstrained' elements. The constrained elements are called the "conserved core processes" of living things that essentially remain unchanged for billions of years, and which are to be found to be used by all living things to sustain the fundamental functions of carbon-based life, like the generation of proteins by processing the information that is to be found in DNA sequences, and processing it with mRNA, tRNA and ribosomes, or the metabolism of carbohydrates via the Krebs cycle. The deconstrained elements are weakly-linked regulatory processes that can change the amount, location and timing of gene expression within a body, and which, therefore, can easily control which conserved core processes are to be run by a cell and when those conserved core processes are to be run by them. The theory of facilitated variation maintains that most favorable biological innovations arise from minor mutations to the deconstrained weakly-linked regulatory processes that control the conserved core processes of life, rather than from random mutations of the genotype of an individual in general that would change the phenotype of an individual in a purely random direction. That is because the most likely change of direction for the phenotype of an individual, undergoing a random mutation to its genotype, is the death of the individual.

Marc W. Kirschner and John C. Gerhart begin by presenting the fact that simple prokaryotic bacteria, like E. coli, require a full 4,600 genes just to sustain the most rudimentary form of bacterial life, while much more complex multicellular organisms, like human beings, consisting of tens of trillions of cells differentiated into hundreds of differing cell types in the numerous complex organs of a body, require only a mere 22,500 genes to construct. The baffling question is, how is it possible to construct a human being with just under five times the number of genes as a simple single-celled

E. coli bacterium? The authors contend that it is only possible for carbon-based life to do so by heavily relying upon reusable code in the genome of complex forms of carbon-based life.

Figure 1 – A simple single- celled E. coli bacterium is constructed using a full 4,600 genes.

Figure 2 – However, a human being, consisting of about 100 trillion cells that are differentiated into the hundreds of differing cell types used to form the organs of the human body, uses a mere 22,500 genes to construct a very complex body, which is just slightly under five times the number of genes used by simple E. coli bacteria to construct a single cell. How is it possible to explain this huge dynamic range of carbon-based life? Marc W. Kirschner and John C. Gerhart maintain that, like complex software, carbon-based life must heavily rely upon reusable code.

For a nice synopsis of the theory of facilitated variation see the authors' paper The theory of facilitated variation at:

http://www.pnas.org/content/104/suppl_1/8582.long

The theory of facilitated variation is an important idea, but since the theory of facilitated variation does not seem to have garnered the attention in the biological community that it justly deserves, I would like to focus on it in this posting. Part of the problem is that it is a relatively new theory that just appeared in 2005, so it has a very long way to go in the normal lifecycle of all new theories:

1. First it is ignored

2. Then it is wrong

3. Then it is obvious

4. Then somebody else thought of it 20 years earlier!

The other problem that the theory of facilitated variation faces is not limited to the theory itself. Rather, it stems from the problem that all such theories in biology must face, namely, that we only have one form of carbon-based life on this planet at our disposal, and we were not around to watch it evolve. That is where softwarephysics can offer some help. From the perspective of softwarephysics, the theory of facilitated variation is most certainly true because we have seen the very same thing arise in the evolution of software over the past 76 years, or 2.4 billion seconds, ever since Konrad Zuse first cranked up his Z3 computer in May of 1941. Again, the value of softwarephysics is that software has been evolving about 100 million times faster than living things for the past 2.4 billion seconds, and all of that evolutionary history occurred within a single human lifetime, with many of the participants still alive today to testify as to what actually had happened, something that those working on the evolution of carbon-based life can only try to imagine. Recall that one of the fundamental findings of softwarephysics is that living things and software are both forms of self-replicating information and that both have converged upon similar solutions to combat the second law of thermodynamics in a highly nonlinear Universe.

For more on that see: A Brief History of Self-Replicating Information.

Using the Evolution of Software as a Guide

On the Earth, we have seen carbon-based life go through three major architectural advances, and heavily using the reusable code techniques to be found in the theory of facilitated variation for the past 4 billion years.

1. The origin of life about 4 billion years ago, probably on land in the early hydrothermal fields of the early Earth, producing the prokaryotic cell architecture. See The Bootstrapping Algorithm of Carbon-Based Life.

2. The rise of the complex eukaryotic cell architecture about 2 billion years ago. See

The Rise of Complexity in Living Things and Software.

3. The rise of multicellular organisms consisting of millions, or billions, of eukaryotic cells all working together in the Ediacaran about 635 million years ago. See Software Embryogenesis.

The evolutionary history of software on the Earth has converged upon a very similar historical path through Design Space because software also had to battle with the second law of thermodynamics in a highly nonlinear Universe - see The Fundamental Problem of Software for more on that. Software progressed through these similar architectures:

1. The origin of simple unstructured prokaryotic software on Konrad Zuse's Z3 computer in May of 1941 - 2.4 billion seconds ago.

2. The rise of structured eukaryotic software in 1972 - 1.4 billion seconds ago.

3. The rise of object-oriented software (software using multicellular organization) in 1995 - 694 million seconds ago

For more details on the above evolutionary history of software see the SoftwarePaleontology section of SoftwareBiology.

Genetic Material is the Source Code of Carbon-Based Life

Before proceeding with seeing how the historical evolution of reusable code in software lends credence to the theory of facilitated variation for carbon-based life, we need to cover a few basics. We first need to determine what is the software equivalent of genetic material. The genetic material of software is called source code. Like genes strung out along the DNA of chromosomes, source code is a set of instructions that really cannot do anything on its own. The source code has to be first compiled into an executable file, containing the primitive machine instructions for a computer to execute, before it can be run by a computer to do useful things. Once the executable file is loaded into a computer and begins to run, it finally begins to do things and displays its true phenotype. For example, when you double-click on an icon on your desktop, like Microsoft Word, you are loading the Microsoft Word winword.exe executable file into the memory of your computer where it begins to execute under a PID (process ID). For example, after you double-click the Microsoft Word icon on your desktop, you can use CTRL-ALT-DEL to launch the Windows Task Manager, and then click on the Processes tab to find the winword.exe running under a specific PID. This compilation process is very similar to the transcription process used to form proteins by stringing together amino acids in the proper sequence. The output of the DNA transcription process is an executable protein that can begin processing organic molecules the moment it folds up into its usable form and is similar to the executable file that results from compiling the source code of a program.

For living things, of course, the equivalent of source code is the genes stored on stretches of DNA. For living things, in order to do something useful, the information in a gene, or stretch of DNA, has to be first transcribed into a protein because nearly all of the biological functions of carbon-based life are performed by proteins. This transcription process is accomplished by a number of enzymes, proteins that have a catalytic ability to speed up biochemical reactions. The sequence of operations aided by enzymes goes like this:

DNA → mRNA → tRNA → Amino Acid chain → Protein

More specifically, a protein is formed by combining 20 different amino acids into different sequences, and on average it takes about 400 amino acids strung together to form a functional protein. The information to do that is encoded in base pairs running along a strand of DNA. Each base can be in one of four states – A, C, G, or T, and an A will always be found to pair with a T, while a C will always pair with a G. So DNA is really a 2 track tape with one data track and one parity track. For example, if there is an A on the DNA data track, you will find a T on the DNA parity track. This allows not only for the detection of parity errors but also for the correction of parity errors in DNA by enzymes that run up and down the DNA tape looking for parity errors and correcting them.

Figure 3 – DNA is a two-track tape, with one data track and one parity track. This allows not only for the detection of parity errors but also for the correction of parity errors in DNA by enzymes that run up and down the DNA tape looking for parity errors and correcting them.

Now a single base pair can code for 4 different amino acids because a single base pair can be in one of 4 states. Two base pairs can code for 4 x 4 = 16 different amino acids, which is not enough. Three base pairs can code for 4 x 4 x 4 = 64 amino acids which are more than enough to code for 20 different amino acids. So it takes a minimum of three bases to fully encode the 20 different amino acids, leaving 44 combinations left over for redundancy. Biologists call these three base pair combinations a “codon”, but a codon really is just a biological byte composed of three biological bits or base pairs that code for an amino acid. Actually, three of the base pair combinations, or codons, are used as STOP codons – TAA, TAG and TGA which are essentially end- of-file markers designating the end of a gene along the sequential file of DNA. As with magnetic tape, there is a section of “junk” DNA between genes along the DNA 2 track tape. According to Shannon’s equation, a DNA base contains 2 bits of information, so a codon can store 6 bits. For more on this see Some More Information About Information.

Figure 4 – Three bases combine to form a codon, or a biological byte, composed of three biological bits, and encodes the information for one amino acid along the chain of amino acids that form a protein.

The beginning of a gene is denoted by a section of promoter DNA that identifies the beginning of the gene, like the CustomerID field on a record, and the gene is terminated by a STOP codon of TAA, TAG or TGA. Just as there was a 0.50 inch gap of “junk” tape between blocks of records on a magnetic computer tape, there is a section of “junk” DNA between each gene along the 6 feet of DNA tape found within human cells.

Figure 5 - On average, each gene is about 400 codons long and ends in a STOP codon TAA, TAG or TGA which are essentially end-of-file markers designating the end of a gene along the sequential file of DNA. As with magnetic tape, there is a section of “junk” DNA between genes which is shown in grey above.

In order to build a protein, genes are first transcribed to an I/O buffer called mRNA. The 2 track DNA file for a gene is first opened near the promoter of a gene and an enzyme called RNA polymerase then begins to copy the codons or biological bytes along the data track of the DNA tape to an mRNA I/O buffer. The mRNA I/O buffer is then read by a ribosome read/write head as it travels along the mRNA I/O buffer. The ribosome read/write head reads each codon or biological byte of data along the mRNA I/O buffer and writes out a chain of amino acids as tRNA brings in one amino acid after another in the sequence specified by the mRNA I/O buffer.

Figure 6 - In order to build a protein, genes are first transcribed to an I/O buffer called mRNA. The 2 track DNA file for a gene is first opened near the promoter of a gene and an enzyme called RNA polymerase then begins to copy the codons or biological bytes along the data track of the DNA tape to the mRNA I/O buffer. The mRNA I/O buffer is then read by a ribosome read/write head as it travels along the mRNA I/O buffer. The ribosome read/write head reads each codon or biological byte of data along the mRNA I/O buffer and writes out a chain of amino acids as tRNA brings in one amino acid after another in the sequence specified by the mRNA I/O buffer.

The above is a brief synopsis of how simple prokaryotic bacteria and archaea build proteins from the information stored in DNA. The process for eukaryotes is a bit more complex because eukaryotes have genes containing exons and introns. The exons code for the amino acid sequence of a protein, while the introns do not. For more on that and a more detailed comparison of the processing of genes on 2 track DNA and the processing of computer data on 9 track magnetic tapes back in the 1970s and 1980s see: An IT Perspective on the Origin of Chromatin, Chromosomes and Cancer.

Once an amino acid chain has folded up into a 3-D protein molecule, it can then perform one of the functions of life. The total of genes that are used by a particular species is known as the genome of the species, and the specific variations of those genes used by an individual are the genotype of the individual. The specific physical characteristics that those particular genes produce is called the phenotype of the individual. For example, there is a certain variation of a human gene that produces blue eyes and a certain variation that produces brown eyes. If you have two copies of the blue-eyed gene, one from your father and one from your mother, you end up as a phenotype with blue eyes, otherwise, you will end up a brown-eyed phenotype with any other combination of genes.

The Imperative Need for the Use of Reusable Code in the Evolution of Software

It is time to look at some source code. Below are three examples of the source code to compute the average of some numbers that you enter from your keyboard when prompted. The programs are written in the C, C++, and Java programming languages. Please note that modern applications now consist of many thousands to many millions of lines of code. The simple examples below are just for the benefit of our non-IT readers to give them a sense of what is being discussed when I describe the compilation of source code into executable files.

Figure 7 – Source code for a C program that calculates an average of several numbers entered at the keyboard.

Figure 8 – Source code for a C++ program that calculates an average of several numbers entered at the keyboard.

Figure 9 – Source code for a Java program that calculates an average of several numbers entered at the keyboard.

The strange thing is that, although the source code for each of the three small programs above looks somewhat similar, closer inspection shows that they are not identical, yet all three produce exactly the same phenotype when compiled and run. You would not be able to tell which was which by simply running the executable files for the programs because they all have the same phenotype behavior. This is especially strange because the programs are written in three different programming languages! Similarly, you could change the names of the variables "sum" and "count" to "total" and "num_values" in each program, and they would all compile into executables that produced exactly the same phenotype when run. The same is true for the genes of carbon-based life. Figure 10 down below reveals that the translation table of carbon-based life is highly redundant, so that the following codons TTA, TTG, CTT, CTC, CTA and CTG marked in green all code for the same amino acid leucine, and therefore, could be replaced in a gene by any of these six codons without changing the gene at all. Thus there potentially are a huge number of genes that could code for exactly the same protein.

Figure 10 – A closer look at Figure 4 reveals that the translation table of carbon-based life is highly redundant, so that the following codons TTA, TTG, CTT, CTC, CTA and CTG marked in green all code for the same amino acid leucine, and therefore, could be replaced in a gene by any of these six codons without changing the gene at all.

Based upon the above analysis, it would seem that producing useful software source code, or useful DNA genes, should be nearly a slam dunk because there appears to be nearly an infinite number of ways to produce the same useful executable file or the same useful protein from a phenotypic perspective. But for anybody who has ever tried to write software, that is clearly not the case, and by inference, it cannot be the case for genes either. The real problem with both genes and source code comes when you try to make some random substitutions, additions or deletions to an already functioning gene or piece of source code. For example, if you take the source code file for an apparently bug-free program and scramble it with some random substitutions, additions or deletions, the odds are that you will most likely end up with something that no longer works. You end up with a low-information high-entropy mess. That is because the odds of creating an even better version of the program by means of inserting random substitutions, additions or deletions are quite low while turning it into a mess are quite high. There are simply too many ways of messing up the program to win at that game, and the same goes for making random substitutions, additions or deletions for genes as well. This is simply the effects of the second law of thermodynamics in action. The second law of thermodynamics constantly degrades low-entropy useful information into high-entropy useless information - see: The Demon of Software for more on that.

For example, I first started programming software back in 1972 as a physics major at the University of Illinois in Urbana, and this reminds me of an incident from 1973, when I and two other geophysics graduate students at the University of Wisconsin in Madison tried to convert 100 lines of Fortran source code into a Basic program that we could run on our DEC PDP/8e minicomputer. We were trying to convert a 100 line Fortran program for an FFT (Fast Fourier Transform) that we had found listed in a textbook into the Basic programming language that ran on our DEC PDP/8e minicomputer. Since Fortran and Basic were so similar, we figured that converting the program would be a cinch, and we confidently started to punch the program into the teletype machine connected to the DEC PDP/8e minicomputer, using the line editor that came with the minicomputer. We were all novice programmers at the time, each with just one class in Fortran programming under our belts, but back in those days that made us all instant experts in the new science of computer science in the view of our elderly professors. So being rather naïve, we thought this would be a relatively easy task because all we had to do was to translate each line of Fortran source code into a corresponding line of Basic source code. We then eagerly plunged into programming off the top of our heads. However, much to our surprise, our very first Basic FFT program abended on its very first run! I mean all we had to do was to make some slight syntax changes, like asking an Englishman for the location of the nearest petrol station while motoring through the English countryside. Finally, after about 6 hours of intense and very frustrating labor, we finally had our Basic FFT program spitting out the correct answers. That early adventure with computers actually made me long for my old trusty sliderule! You see, that was the very first time I had ever actually tried to get a computer to do something that I really wanted it to do, rather than just completing one of those pesky assignments in my Fortran class at the University of Illinois. I guess that I had mistakenly figured that the programs assigned in my Fortran class were especially tricky because the professor was just trying to winnow out the real losers so that they would not continue on in computer science! I was pretty young back then.

As outlined in The Fundamental Problem of Software, it turns out that we young graduate students, like all IT professionals throughout history, had been fighting the second law of thermodynamics in a highly nonlinear Universe. The second law guarantees that for any valid FFT program, there are nearly an infinite number of matching invalid FFT programs, so the odds are whenever you make a small change to some functioning software source code, in hopes of improving it, you will instead end up with some software that no longer works at all! That is because the Universe is highly nonlinear in nature, so small erroneous changes in computer source code usually produce catastrophic behaviors in the phenotypes of the executable files that they generate - see Software Chaos for more on that. Similarly, the same goes for genes that produce functioning proteins. Small changes to those genes are not likely to produce proteins that are even better at what they do. Instead, small changes to genes will likely produce proteins that are totally nonfunctional, and since the Universe is highly nonlinear in nature, those nonfunctional proteins are likely to be lethal.

Figure 11 – Some graduate students huddled around a DEC PDP-8/e minicomputer entering program source code into the machine. Notice the teletype machines in the foreground on the left. Our machine cost about $30,000 in 1973 dollars ($166,000 in 2017 dollars) and was about the size of a large side-by-side refrigerator, with 32K of magnetic core memory. I just checked, and you can now buy a laptop online with 4 GB of memory (131,072 times as much memory) for $179.99.

So how do you deal with such a quandary when trying to produce innovative computer software or genes? Well, back in 1973 when we finally had a functioning Basic FFT program, we quickly turned it into a Basic subroutine that could be reused by all. It turned out that nearly all of the graduate students in the Geophysics Department at the University of Wisconsin at Madison who were using the DEC PDP/8e minicomputer for their thesis work needed to run FFTs, so this Basic FFT subroutine was soon reused by them all. Of course, we were not the first to discover the IT trick of using reusable code. Anybody who has ever done some serious coding soon discovers the value of building up a personal library of reusable code to draw upon when writing new software. The easiest way to reuse computer source code is to simply "copy/paste" sections of code into the source code that you are working on, but that was not so easy back in the 1950s and 1960s because people were programming on punch cards.

Figure 12 - Each card could hold a maximum of 80 bytes. Normally, one line of code was punched onto each card.

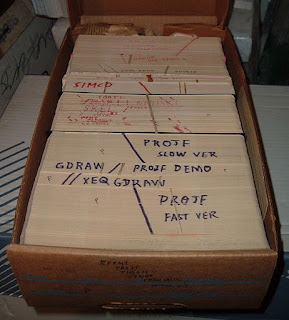

Figure 13 - The cards for a program were held together into a deck with a rubber band, or for very large programs, the deck was held in a special cardboard box that originally housed blank cards. Many times the data cards for a run followed the cards containing the source code for a program. The program was compiled and linked in two steps of the run and then the generated executable file processed the data cards that followed in the deck.

Figure 14 - To run a job, the cards in a deck were fed into a card reader, as shown on the left above, to be compiled, linked, and executed by a million dollar mainframe computer with a clock speed of about 750 KHz and about 1 MB of memory.

Figure 15 - The cards were cut on an IBM 029 keypunch machine, like the one I learned to program Fortran on back in 1972 at the University of Illinois. The IBM 029 keypunch machine did have a way of duplicating a card by feeding the card to be duplicated into the machine, while at the same time, a new blank card was registered in the machine ready for punching, and the information on the first card was then punched on to the second card. This was a very slow and time-consuming way to copy reusable code from one program to another. But there was another machine that could do the same thing for a whole deck of cards all at once, and that machine was much more useful in duplicating existing cards that could then be spliced into the card deck that you were working on.

Take note that the IBM 029 keypunch machine that was used to punch cards did allow you to copy one card at a time, but that was a rather slow way to copy cards for reuse. But there was another machine that could read an entire card deck all at once, and punch out a duplicate card deck of the original in one shot. That machine made it much easier to punch out the cards from an old card deck and splice the copied cards into the card deck that you were working on. Even so, that was a fairly clumsy way of using reusable code, so trying to use reusable code back during the unstructured prokaryotic days of IT prior to 1972 was difficult at best. Also, the chaotic nature of the unstructured prokaryotic "spaghetti code" of the 1950s and 1960s made it difficult to splice in reusable code on cards.

This all changed in the early 1970s with the rise of structured eukaryotic source code that divided functions up amongst a set of subroutines, or organelles, and the arrival of the IBM 3278 terminal and the IBM ISPF screen editor on TSO in the late 1970s that eliminated the need to program on punch cards. One of the chief characteristics of structured programming was the use of "top-down" programming, where programs began execution in a mainline routine that then called many other subroutines. The purpose of the mainline routine was to perform simple high-level logic that called the subordinate subroutines in a fashion that was easy to follow. This structured programming technique made it much easier to maintain and enhance software by simply calling subroutines from the mainline routine in the logical manner required to perform the needed tasks, like assembling Lego building blocks into different patterns that produced an overall new structure. The structured approach to software also made it much easier to reuse software. All that was needed was to create a subroutine library of reusable source code that was already compiled. A mainline program that made calls to the subroutines of the subroutine library was compiled as before. The machine code for the previously compiled subroutines was then added to the resulting executable file by a linkage editor. This made it much easier for structured eukaryotic programs to use reusable code by simply putting the software "conserved core processes" into already compiled subroutine libraries. The ISPF screen editor running under TSO on IBM 3278 terminals also made it much easier to reuse source code because now many lines of source code could be simply copied from one program file to another, with the files stored on disk drives, rather than on punch cards or magnetic tape.

Figure 16 - The IBM ISPF full screen editor ran on IBM 3278 terminals connected to IBM mainframes in the late 1970s. ISPF was also a screen-based interface to TSO (Time Sharing Option) that allowed programmers to do things like copy files and submit batch jobs. ISPF and TSO running on IBM mainframes allowed programmers to easily reuse source code by doing copy/paste operations with the screen editor from one source code file to another. By the way, ISPF and TSO are still used today on IBM mainframe computers and are fine examples of the many "conserved core processes" to be found in software.

The strange thing is that most of the core fundamental processes of computer data processing were developed back in the 1950s, such as sort and merge algorithms, during the unstructured prokaryotic period, but as we have seen they could not be easily reused because of technical difficulties that were not alleviated until the structured eukaryotic period of the early 1970s. Similarly, many of the fundamental "conserved core processes" of carbon-based life were also developed during the first 2.5 billion years of the early Earth by the prokaryotic bacteria and archaea. It became much easier for these "conserved core processes" to be reused when the eukaryotic cell architecture arose about 2 billion years ago.

Figure 17 – The Quicksort algorithm was developed by Tony Hoare in 1959 and applies a prescribed sequence of actions to an input list of unsorted numbers. It very efficiently outputs a sorted list of numbers, no matter what unsorted input it operates upon.

Multicellular Organization and the Major Impact of Reusable Code

But as outlined in the theory of facilitated variation the real impact of reusable code was not fully felt until carbon-based life stumbled upon the revolutionary architecture of multicellular organization, and the same was true in the evolutionary history of software. In software, multicellular organization is obtained through the use of object-oriented programming languages. Some investigation reveals that object-oriented programming has actually been around since 1962, but that it did not catch on at first. In the late 1980s, the use of the very first significant object-oriented programming language, known as C++, started to appear in corporate IT, but object-oriented programming really did not become significant in IT until 1995 when both Java and the Internet Revolution arrived at the same time. The key idea in object-oriented programming is naturally the concept of an object. An object is simply a cell. Object-oriented languages use the concept of a Class, which is a set of instructions for building an object (cell) of a particular cell type in the memory of a computer. Depending upon whom you cite, there are several hundred different cell types in the human body, but in IT we generally use many thousands of cell types or Classes in commercial software. For a brief overview of these concepts go to the webpage below and follow the links by clicking on them.

Lesson: Object-Oriented Programming Concepts

http://docs.oracle.com/javase/tutorial/java/concepts/index.html

A Class defines the data that an object stores in memory and also the methods that operate upon the object data. Remember, an object is simply a cell. Methods are like biochemical pathways that consist of many steps or lines of code. A public method is a biochemical pathway that can be invoked by sending a message to a particular object, like using a ligand molecule secreted from one object to bind to the membrane receptors on another object. This binding of a ligand to a public method of an object can then trigger a cascade of private internal methods within an object or cell.

Figure 18 – A Class contains the instructions for building an object in the memory of a computer and basically defines the cell type of an object. The Class defines the data that an object stores in memory and also the methods that can operate upon the object data.

Figure 19 – Above is an example of a Bicycle object. The Bicycle object has three private data elements - speed in mph, cadence in rpm, and a gear number. These data elements define the state of a Bicycle object. The Bicycle object also has three public methods – changeGears, applyBrakes, and changeCadence that can be used to change the values of the Bicycle object’s internal data elements. Notice that the code in the object methods is highly structured and uses code indentation to clarify the logic.

Figure 20 – Above is some very simple Java code for a Bicycle Class. Real Class files have many data elements and methods and are usually hundreds of lines of code in length.

Figure 21 – Many different objects can be created from a single Class just as many cells can be created from a single cell type. The above List objects are created by instantiating the List Class three times and each List object contains a unique list of numbers. The individual List objects have public methods to insert or remove numbers from the objects and also a private internal sort method that could be called whenever the public insert or remove methods are called. The private internal sort method automatically sorts the numbers in the List object whenever a number is added or removed from the object.

Figure 22 – Objects communicate with each other by sending messages. Really one object calls the exposed public methods of another object and passes some data to the object it calls, like one cell secreting a ligand molecule that then plugs into a membrane receptor on another cell.

Figure 23 – In a growing embryo, the cells communicate with each other by sending out ligand molecules called morphogens, or paracrine factors, that bind to the membrane receptors on other cells.

Figure 24 – Calling a public method of an object can initiate the execution of a cascade of private internal methods within the object. Similarly, when a paracrine factor molecule plugs into a receptor on the surface of a cell, it can initiate a cascade of internal biochemical pathways. In the above figure, an Ag protein plugs into a BCR receptor and initiates a cascade of biochemical pathways or methods within a cell.

When a high-volume corporate website, consisting of many millions of lines of code running on hundreds of servers, starts up and begins taking traffic, billions of objects (cells) begin to be instantiated in the memory of the servers in a manner of minutes and then begin to exchange messages with each other in order to perform the functions of the website. Essentially, when the website boots up, it quickly grows to a mature adult through a period of very rapid embryonic growth and differentiation, as billions of objects are created and differentiated to form the tissues of the website organism. These objects then begin exchanging messages with each other by calling public methods on other objects to invoke cascades of private internal methods which are then executed within the called objects - for more on that see Software Embryogenesis.

Object-Oriented Class Libraries

In addition to implementing a form of multicellular organization in software, the object-oriented programming languages, like C++ and Java, brought with them the concept of a class library. A class library consists of the source code for a large number of reusable Classes for a multitude of objects. For example, for a complete list of the reusable objects in the Java programming language see:

Java Platform, Standard Edition 7

API Specification

https://docs.oracle.com/javase/7/docs/api/

The "All Classes" pane in the lower left of the webpage lists all of the built-in classes of the Java programming language.

Figure 25 – All of the built-in Classes of the Java programming language inherit the data elements and methods of the undifferentiated stem cell Object.

The reusable classes in a class library all inherit the characteristics of the fundamental class Object. An Object object is like a stem cell that can differentiate into a large number of other kinds of objects. Below is a link to the Object Class and all of its reusable methods:

Class Object

https://docs.oracle.com/javase/7/docs/api/java/lang/Object.html

For example, a String object is a particular object type that inherits all of the methods of a general Object stem cell and can perform many operations on the string of characters it contains via the methods of a String object.

Figure 26 – A String object contains a number of characters in a string. A String object inherits all of the general methods of the Object Class, and contains many additional methods, like finding a substring in the characters stored in the String object.

The specifics of a String object can be found at:

Class String

https://docs.oracle.com/javase/7/docs/api/java/lang/String.html

Take a look at the Method Summary of the String class. It contains about 50 reusable code methods that can perform operations on the string of characters in a String object. Back in the unstructured prokaryotic times of the 1950s and 1960s, the source code to perform those operations was repeatedly reprogrammed over and over because there was no easy way to reuse source code in those days. But in the multicellular object-oriented period, the use of extensive class libraries allowed programmers to easily assemble the "conserved core processes" that had been developed back in the unstructured prokaryotic source code of the 1950s and 1960s into complex software by simply instantiating objects and making calls to the methods of the objects that they needed. Thus, the new multicellular object-oriented source code, that created and extended the classes found within the already existing class library, took on more of a regulatory nature than the source code of old. This new multicellular object-oriented source code carried out its required logical operations by simply instantiating certain objects in the class library of reusable code, and then choosing which methods needed to be performed on those objects. Similarly, in the theory of facilitated variation, we find that most of the "conserved core processes" of carbon-based life come from pre-Cambrian times before complex multicellular organisms came to be. For example, the authors point out that 79% of the genes for a mouse come from pre-Cambrian times. So only 21% of the mouse genes, primarily those genes that regulate the expression of the other 79% of the genes from pre-Cambrian times, manage to build a mouse from a finite number of "conserved core processes".

Facilitated Variation is Not a Form of Intelligent Design

While preparing this posting, I noticed on the Internet that some see the theory of facilitated variation as a vindication of the concept of intelligent design, but that is hardly the case. The same misunderstanding can arise from the biological findings of softwarephysics. Some tend to dismiss the biological findings of softwarephysics because software is currently a product of the human mind, while biological life is not a product of intelligent design. Granted, biological life is not a product of intelligent design, but neither is the human mind. The human mind and biological life are both the result of natural processes at work over very long periods of time. This objection simply stems from the fact that we are all still, for the most part, self-deluded Cartesian dualists at heart, with seemingly a little “Me” running around within our heads that just happens to have the ability to write software and to do other challenging things. Thus, most human beings do not think of themselves as part of the natural world. Instead, they think of themselves, and others, as immaterial spirits temporarily haunting a body, and when that body dies the immaterial spirit lives on. In this view, human beings are not part of the natural world. Instead, they are part of the supernatural. But in softwarephysics, we maintain that the human mind is a product of natural processes in action, and so is the software that it produces. For more on that see The Ghost in the Machine the Grand Illusion of Consciousness.

Still, I realize that there might be some hesitation to pursue this line of thought because it might be construed by some as an advocacy of intelligent design, but that is hardly the case. The evolution of software over the past 76 years has essentially been a matter of Darwinian inheritance, innovation and natural selection converging upon similar solutions to that of biological life. For example, it took the IT community about 60 years of trial and error to finally stumble upon an architecture similar to that of complex multicellular life that we call SOA – Service Oriented Architecture. The IT community could have easily discovered SOA back in the 1960s if it had adopted a biological approach to software and intelligently designed software architecture to match that of the biosphere. Instead, the worldwide IT architecture we see today essentially evolved on its own because nobody really sat back and designed this very complex worldwide software architecture; it just sort of evolved on its own through small incremental changes brought on by many millions of independently acting programmers through a process of trial and error. When programmers write code, they always take some old existing code first and then modify it slightly by making a few changes. Then they add a few additional new lines of code and test the modified code to see how far they have come. Usually, the code does not work on the first attempt because of the second law of thermodynamics, so they then try to fix the code and try again. This happens over and over until the programmer finally has a good snippet of new code. Thus, new code comes into existence through the Darwinian mechanisms of inheritance coupled with innovation and natural selection - for more on that see How Software Evolves.

Some might object that this coding process of software is actually a form of intelligent design, but that is not the case. It is important to differentiate between intelligent selection and intelligent design. In softwarephysics we extend the concept of natural selection to include all selection processes that are not supernatural in nature, so for me, intelligent selection is just another form of natural selection. This is really nothing new. Predators and prey constantly make “intelligent” decisions about what to pursue and what to evade, even if those “intelligent” decisions are only made with the benefit of a few interconnected neurons or molecules. So in this view, the selection decisions that a programmer makes after each iteration of working on some new code really are a form of natural selection. After all, programmers are just DNA survival machines with minds infected with memes for writing software, and the selection processes that the human mind undergo while writing software are just as natural as the Sun drying out worms on a sidewalk or a cheetah deciding upon which gazelle in a herd to pursue.

For example, when IT professionals slowly evolved our current $10 trillion worldwide IT architecture over the past 2.4 billion seconds, they certainly did not do so with the teleological intent of creating a simulation of the evolution of the biosphere. Instead, like most organisms in the biosphere, these IT professionals were simply trying to survive just one more day in the frantic world of corporate IT. It is hard to convey the daily mayhem and turmoil of corporate IT to outsiders. When I first hit the floor of Amoco’s IT department back in 1979, I was in total shock, but I quickly realized that all IT jobs essentially boiled down to simply pushing buttons. All you had to do was to push the right buttons, in the right sequence, at the right time, and with zero errors. How hard could that be? Well, it turned out to be very difficult indeed, and in response, I began to subconsciously work on softwarephysics to try to figure out why this job was so hard, and how I could dig myself out of the mess that I had gotten myself into. After a while, it dawned on me that the fundamental problem was the second law of thermodynamics operating in a nonlinear simulated universe. The second law made it very difficult to push the right buttons in the right sequence and at the right time because there were so many erroneous combinations of button pushes. Writing and maintaining software was like looking for a needle in a huge utility phase space. There just were nearly an infinite number of ways of pushing the buttons “wrong”. The other problem was that we were working in a very nonlinear utility phase space, meaning that pushing just one button incorrectly usually brought everything crashing down. Next, I slowly began to think of pushing the correct buttons in the correct sequence as stringing together the correct atoms into the correct sequence to make molecules in chemical reactions that could do things. I also knew that living things were really great at doing that. Living things apparently overcame the second law of thermodynamics by dumping entropy into heat as they built low entropy complex molecules from high entropy simple molecules and atoms. I then began to think of each line of code that I wrote as a step in a biochemical pathway. The variables were like organic molecules composed of characters or “atoms” and the operators were like chemical reactions between the molecules in the line of code. The logic in several lines of code was the same thing as the logic found in several steps of a biochemical pathway, and a complete function was the equivalent of a full-fledged biochemical pathway in itself. For more on that see Some Thoughts on the Origin of Softwarephysics and Its Application Beyond IT and SoftwareChemistry.

Conclusion

The key finding of softwarephysics is that it is all about self-replicating information:

Self-Replicating Information – Information that persists through time by making copies of itself or by enlisting the support of other things to ensure that copies of itself are made.

Basically, we have seen five waves of self-replicating information come to dominate the Earth over the past four billion years:

1. Self-replicating autocatalytic metabolic pathways of organic molecules

2. RNA

3. DNA

4. Memes

5. Software

Software is now rapidly becoming the dominant form of self-replicating information on the planet and is having a major impact on mankind as it comes to predominance. For more on that see: A Brief History of Self-Replicating Information.

So like carbon-based life and software, memes are also forms of self-replicating information that heavily use the concept of reusable code found in the theory of facilitated variation. Many memes are just composites of a number of "conserved core memes" that are patched together by some regulatory memes into something that appears to be unique and can stand on its own. Just watch any romantic comedy, or listen to any political stump speech. For example, about 90% of this posting on reusable code is simply reusable text that I pulled from other softwarephysics postings and patched together in a new sequence using a small amount of new regulatory text. It is just a facilitated variation on a theme, like Johannes Brahms' Variations on a Theme of Paganini (1863):

https://www.youtube.com/watch?v=1EIE78D0m1g&t=1s.

or Rachmaninoff ‘s The Rhapsody on a Theme of Paganini (1934):

https://www.youtube.com/watch?v=HvKTPDg0IW0&t=1s

reusing Paganini's original Caprice no.24 (1817):

https://www.youtube.com/watch?v=PZ307sM0t-0&t=1s.

Comments are welcome at scj333@sbcglobal.net

To see all posts on softwarephysics in reverse order go to:

https://softwarephysics.blogspot.com/

Regards,

Steve Johnston

No comments:

Post a Comment