About four years ago, I sensed that a dramatic environmental change was occurring in IT, so I posted Cloud Computing and the Coming Software Mass Extinction on March 9, 2016, in anticipation of another IT mass extinction that would likely make my current IT job go extinct again. At the time, I was 64 years old, and I was working in the Middleware Operations group of Discover Financial Services, the credit card company, supporting all of their externally-facing websites and internal applications used to run the business. Having successfully survived the Mainframe ⟶ Distributed Computing mass extinction of the early 1990s, I decided that it was a good time to depart IT, rather than to deal with the turmoil of another software mass extinction. So I retired from my very last IT position in December of 2016 at the age of 65. Since about four years have now gone by since my last posting on Cloud Computing, I thought that it might be a good time to take a look again to see what had happened. In many of my previous posts on softwarephysics, I have noted that software has been evolving about 100 million times faster than carbon-based life on the Earth and, also, that software has been following a very similar path through Design Space, as did carbon-based life, while quickly racing through this amazingly rapid evolution. Thus, over the years, I have found that, roughly speaking, about one second of software evolution is equivalent to about two years of evolution for carbon-based life forms. So four years of software evolution comes to about 250 million years of carbon-based life evolution. Now carbon-based life has evolved quite a bit over the past 250 million years, so I expected to see some very dramatic changes to the way that IT was being practiced today compared to what it looked like when I departed in 2016. In order to do that, I took a couple of overview online courses on Cloud Computing at Coursera to see just how far Cloud Computing had changed IT over the past 250 million years. Surprisingly, after taking several of these Cloud Computing courses, I found myself quite at home with the way that IT is currently practiced today! That is because I found that modern IT has actually returned to the way that IT was practiced back in the 1980s when I first became an IT professional!

In this posting, I would like to examine the origin and evolution of Cloud Computing by retelling my own personal professional experiences with working with computers for the past 48 years. That comes to about three billion years of evolution for carbon-based life! But before doing that, let's review how carbon-based life evolved on this planet. That will help to highlight many of the similarities between the evolution of carbon-based life and software. Carbon-based life probably first evolved on this planet about 4 billion years ago on land in warm hydrothermal pools - for more on that see The Bootstrapping Algorithm of Carbon-Based Life for details. Carbon-based life then moved to the sea as the simple prokaryotic cells of bacteria and archaea. About two billion years later the dramatic evolution of complex eukaryotic cells arrived - for more on that see The Rise of Complexity in Living Things and Software. The rise of multicellular organisms, consisting of millions, or billions, of eukaryotic cells all working together, came next in the Ediacaran about 635 million years ago. The Cambrian explosion then followed 541 million years ago with the rise of very complex carbon-based life that could move about in the water. For more on that see the SoftwarePaleontology section of SoftwareBiology. The first plants appeared on land around 470 million years ago, during the Ordovician period. Animals then left the sea for the land during the Devonian period around 360 million years ago as shown in Figure 1.

Figure 1 - Mobile Carbon-based life first left the sea for the land during the Devonian period around 360 million years ago. Carbon-based life had finally returned to the land from which it first emerged 4 billion years ago.

Being mobile on land had the advantage of not having to compete with the entire biosphere for the communally shared oxygen dissolved in seawater. That can become a serious problem for mobile complex carbon-based life because it cannot make its own oxygen when the atmospheric level of oxygen declines. In fact, there currently is a school of thought that maintains that mobile carbon-based animal life left the sea for the land for that very reason. But being mobile on land does have its drawbacks too, especially when you find yourself amongst a diverse group of friends and neighbors all competing for the very same resources and eyeing you with appeal as a nice course for their next family outing. Of course, the same challenges are also to be found when living in the sea, but while living in the sea, you do not have to ever worry about dying of thirst, freezing to death, dying of heat exhaustion, burning up in a forest fire, getting stepped on, falling off of a mountain, drowning in a flash flood, getting hit by lightning, dying in an earthquake, drowning from a tsunami, getting impaled by a tree limb thrown by a tornado or drowning in a hurricane. So there might be some real advantages to be gained by mobile carbon-based life forms that chose to return to the sea and take all of the advances that were made while on the land along with them. And, indeed, that is exactly what happened for several types of land-based mammals. The classic example is the return of the mammalian whales to the sea. The mammalian whales took all of the advances that were gained while on land, like directly breathing the air for oxygen, being warm-blooded, giving birth to live young and nursing them to adulthood - see Figure 2.

Figure 2 - Some mobile carbon-based life forms returned to the sea and took the advances that were made on land with them back to the sea.

Cloud Computing Software Followed a Very Similar Evolutionary Path Through Design Space

To begin our comparison of the origin and early evolution of Cloud Computing with the origin and evolution of carbon-based life, let's review the basics of Cloud Computing. The two key components of Cloud Computing are the virtualization of hardware and software and the pay-as-you-go timesharing of that virtual hardware and software. The aim of Cloud Computing is to turn computing into a number of public utilities like the electrical power grid, public water supply, natural gas and the public sewage utilities found in a modern city. Instead of having to worry about your own electrical generator, water well and septic field in a rural area, you can get all of these necessities as services from public utilities and only pay monthly fees to the public utilities based on your consumption of resources.

Figure 3 – Cloud Computing returns us to the timesharing days of the 1960s and 1970s by viewing everything as a service.

For example, a business might use SaaS (Software as a Service) where the Cloud provider provides all of the hardware and software necessary to perform an IT function, such as Gmail for email, Zoom for online meetings, Microsoft 365 or Google Apps for documents and spreadsheets. Similarly, a Cloud provider can provide all of the hardware and infrastructure software in a PaaS (Platform as a Service) layer to run the proprietary applications that are generated by the IT department of a business. The proprietary business applications run on virtual machines or in virtual containers that allow for easy application maintenance. This is where the Middleware from pre-Cloud days is run - the type of software that I supported for Discover back in 2016 and which was very much in danger of extinction. Finally, Cloud providers provide an IaaS (Infrastructure as a Service) layer consisting of virtual machines, virtual networks, and virtual data storage on virtual disk drives.

Figure 4 – Prior to Cloud Computing, applications were run on a very complicated infrastructure of physical servers that made the installation and maintenance of software nearly impossible. The Distributed Computing Platform of the 1990s became unsustainable when many hundreds of physical servers were needed to run complex high-volume web-based applications.

As I outlined in The Limitations of Darwinian Systems, the pre-Cloud Distributed Computing Platform that I left in 2016 consisted of many hundreds of physical servers and was so complicated that we could barely maintain it. Clearly, the Distributed Computing Platform of the 1990s was no longer sustainable. Something had to change to allow IT to advance. That's when IT decided to return to the sea by heading for the Clouds. Now let's see how that happened.

Pay-As-You-Go Timesharing is Quite Old

Let's begin with the pay-as-you-go characteristic of Cloud Computing. This characteristic of Cloud Computing is actually quite old. For example, back in 1968, my high school ran a line to the Illinois Institute of Technology to connect a local card reader and printer to the Institute's mainframe computer. We had our own keypunch machine to punch up the cards for Fortran programs that we could then submit to the distant mainframe which then printed back the results of our program runs on our local printer. The Illinois Institute of Technology also did the same for several other high schools in my area. So essentially, the Illinois Institute of Technology became a very early Cloud Provider. This allowed high school students in the area to gain some experience with computers even though their high schools could clearly not afford to maintain a mainframe infrastructure.

Figure 5 - An IBM 029 keypunch machine like the one installed in my high school in 1968.

Figure 6 - Each card could hold a maximum of 80 bytes. Normally, one line of code was punched onto each card.

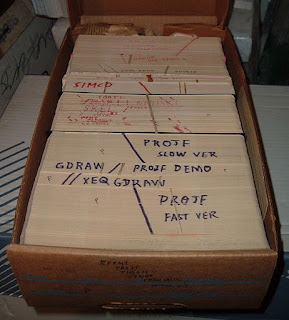

Figure 7 - The cards for a program were held together into a deck with a rubber band, or for very large programs, the deck was held in a special cardboard box that originally housed blank cards. Many times the data cards for a run followed the cards containing the source code for a program. The program was compiled and linked in two steps of the run and then the generated executable file processed the data cards that followed in the deck.

Figure 8 - To run a job, the cards in a deck were fed into a card reader, as shown on the left above, to be compiled, linked, and executed by a million-dollar mainframe computer back at the Illinois Institute of Technology. In the above figure, the mainframe is located directly behind the card reader.

Figure 9 - The output of Fortran programs run at the Illinois Institute of Technology was printed locally at my high school on a line printer.

The Slow Evolution of Hardware and Software Virtualization

Unfortunately, I did not take that early computer science class at my high school, but I did learn to write Fortran code at the University of Illinois at Urbana in 1972. At the time, I was also punching out Fortran programs on an old IBM 029 keypunch machine, and I soon discovered that writing code on an IBM 029 keypunch machine was even worse than writing out term papers on a manual typewriter! At least when you submitted a term paper with a few typos, your professor was usually kind enough not to abend your term paper right on the spot and give you a grade of zero. Sadly, I learned that Fortran compilers were not so forgiving. The first thing you did was to write out your code on a piece of paper as best you could back at the dorm. The back of a large stack of fanfold printer paper output was ideal for such purposes. In fact, as a physics major, I first got hooked by software while digging through the wastebaskets of DCL, the Digital Computing Lab, at the University of Illinois looking for fanfold listings of computer dumps that were about a foot thick. I had found that the backs of thick computer dumps were ideal for working on lengthy problems in my quantum mechanics classes.

It paid to do a lot of desk-checking of your code back at the dorm before heading out to the DCL. Once you got to the DCL, you had to wait your turn for the next available IBM 029 keypunch machine. This was very much like waiting for the next available washing machine on a crowded Saturday morning at a laundromat. When you finally got to your IBM 029 keypunch machine, you would load it up with a deck of blank punch cards and then start punching out your program. You would first press the feed button to have the machine pull your first card from the deck of blank cards and register the card in the machine. Fortran compilers required code to begin in column 7 of the punch card so the first thing you did was to press the spacebar 6 times to get to column 7 of the card. Then you would try to punch in the first line of your code. If you goofed and hit the wrong key by accident while punching the card, you had to eject the bad card and start all over again with a new card. Structured programming had not been invented yet, so nobody indented code at the time. Besides, trying to remember how many times to press the spacebar for each new card in a block of indented code was just not practical. Pressing the spacebar 6 times for each new card was hard enough! Also, most times we proofread our card decks by flipping through them before we submitted the card deck. Trying to proofread indented code in a card deck would have been rather disorienting, so nobody even thought of indenting code. Punching up lots of comment cards was also a pain, so most people got by with a minimum of comment cards in their program deck.

After you punched up your program on a card deck, you would then punch up your data cards. Disk drives and tape drives did exist in those days, but disk drive storage was incredibly expensive and tapes were only used for huge amounts of data. If you had a huge amount of data, it made sense to put it on a tape because if you had several feet of data on cards, there was a good chance that the operator might drop your data card deck while feeding it into the card reader. But usually, you ended up with a card deck that held the source code for your program and cards for the data to be processed too. You also punched up the JCL (Job Control Language) cards for the IBM mainframe that instructed the IBM mainframe to compile, link and then run your program all in one run. You then dropped your finalized card deck into the input bin so that the mainframe operator could load your card deck into the card reader for the IBM mainframe. After a few hours, you would then return to the output room of the DCL and go to the alphabetically sorted output bins that held all the jobs that had recently run. If you were lucky, in your output bin you found your card deck and the fan-folded computer printout of your last run. Unfortunately, normally you found that something probably went wrong with your job. Most likely you had a typo in your code that had to be fixed. If it was nighttime and the mistake in your code was an obvious typo, you probably still had time for another run, so you would get back in line for an IBM 029 keypunch machine and start all over again. You could then hang around the DCL working on the latest round of problems in your quantum mechanics course. However, machine time was incredibly expensive in those days and you had a very limited budget for machine charges. So if there was some kind of logical error in your code, many times you had to head back to the dorm for some more desk checking of your code before giving it another shot the next day.

My First Experiences with Interactive Computing

I finished up my B.S. in Physics at the University of Illinois at Urbana in 1973 and headed up north to complete an M.S. in Geophysics at the University of Wisconsin at Madison. I was working with a team of graduate students who were collecting electromagnetic data in the field on a DEC PDP-8/e minicomputer. The machine cost about $30,000 in 1973 (about $176,000 in 2020 dollars) and was about the size of a large side-by-side refrigerator. The machine had 32 KB of magnetic core memory, about 2 million times less memory than a modern 64 GB smartphone. This was my first experience with interactive computing. Previously, I had only written Fortran programs for batch processing on IBM mainframes. I wrote BASIC programs on the DEC PDP-8/e minicomputer using a teletype machine and a built-in line editor. The teletype machine was also used to print out program runs. My programs were saved to a tape and I could also read and write data from a tape as well. The neat thing about the DEC PDP-8/e minicomputer was there were no computer charges and I did not have to wait hours to see the output of a run. None of the department professors knew how to program computers, so there was plenty of free machine time because only about four graduate students knew how to program at the time. I also learned the time-saving trick of interactive programming. Originally, I would write a BASIC program and hard-code the data values for a run directly in the code and then run the program as a batch job. After the run, I would then edit the code to change the hard-coded data values and then run the program again. Then one of my fellow graduate students showed me the trick of how to add a very primitive interactive user interface to my BASIC programs. Instead of hard-coding data values, my BASIC code would now prompt me for values that I could enter on the fly on the teletype machine. This allowed me to create a library of "canned" BASIC programs on tape that I never had to change. I could just run my "canned" programs with new input data as needed.

We actually hauled this machine through the dirt-road lumber trails of the Chequamegon National Forest in Wisconsin and powered it with an old diesel generator to digitally record electromagnetic data in the field. For my thesis, I worked with a group of graduate students who were shooting electromagnetic waves into the ground to model the conductivity structure of the Earth’s upper crust. We were using the Wisconsin Test Facility (WTF) of Project Sanguine to send very low-frequency electromagnetic waves, with a bandwidth of about 1 – 20 Hz into the ground, and then we measured the reflected electromagnetic waves in cow pastures up to 60 miles away. All this information has been declassified and is available on the Internet, so any retired KGB agents can stop taking notes now and take a look at:

http://www.fas.org/nuke/guide/usa/c3i/fs_clam_lake_elf2003.pdf

Project Sanguine built an ELF (Extremely Low Frequency) transmitter in northern Wisconsin and another transmitter in northern Michigan in the 1970s and 1980s. The purpose of these ELF transmitters is to send messages to our nuclear submarine force at a frequency of 76 Hz. These very low-frequency electromagnetic waves can penetrate the highly conductive seawater of the oceans to a depth of several hundred feet, allowing the submarines to remain at depth, rather than coming close to the surface for radio communications. You see, normal radio waves in the Very Low Frequency (VLF) band, at frequencies of about 20,000 Hz, only penetrate seawater to a depth of 10 – 20 feet. This ELF communications system became fully operational on October 1, 1989, when the two transmitter sites began synchronized transmissions of ELF broadcasts to our submarine fleet.

I did all of my preliminary modeling work in BASIC on the DEC PDP-8/e without a hitch while the machine was sitting peacefully in an air-conditioned lab. So I did not have to worry about the underlying hardware at all. For me, the machine was just a big black box that processed my software as directed. However, when we dragged this poor machine through the bumpy lumber trails of the Chequamegon National Forest, all sorts of "software" problems arose that were really due to the hardware. For example, we learned that each time we stopped and made camp for the day that we had to reseat all of the circuit boards in the DEC PDP-8/e. We also learned that the transistors in the machine did not like it when the air temperature in our recording truck rose above 90 oF because we started getting parity errors. We also found that we had to let the old diesel generator warm up a bit before we turned on the DEC PDP-8/e to give the generator enough time to settle down into a nice, more-or-less stable, 60 Hz alternating voltage.

Figure 10 – Some graduate students huddled around a DEC PDP-8/e minicomputer. Notice the teletype machines in the foreground on the left that were used to input code and data into the machine and to print out results as well.

Back to Batch Programming on IBM Mainframes

Then from 1975 – 1979, I was an exploration geophysicist exploring for oil, first with Shell, and then with Amoco. I kept coding Fortran the whole time on IBM mainframes. In 1979, I made a career change into IT when I became an IT professional in Amoco's IT department. Structured programming had arrived by then, so we were now indenting code and adding comment statements to the code, but we were still programming on cards. We were now using IBM 129 keypunch machines that were a little bit more sophisticated than the old IBM 029 keypunch machines. However, the coding process was still very much the same. I worked on code at my desk and still spent a lot of time desk checking the code. When I was ready for my next run, I would get into an elevator and travel down to the basement of the Amoco Building where the IBM mainframes were located. Then I would punch my cards on one of the many IBM 129 keypunch machines but this time with no waiting for a machine. After I submitted my deck, I would travel up 30 floors to my cubicle to work on something else. After a couple of hours, I would head down to the basement again to collect my job. On a good day, I could manage to get 4 runs in. But machine time was still incredibly expensive. If I had a $100,000 project, $25,000 went for programming time, $25,000 went to IT overhead like integration management and data management services, and a full $50,000 went to machine costs for compiles and test runs!

This may all sound very inefficient and tedious today, but it can be even worse. I used to talk to the old-timers about the good old days of IT. They told me that when the operators began their shift on an old-time 1950s vacuum tube computer, the first thing they did was to crank up the voltage on the vacuum tubes to burn out the tubes that were on their last legs. Then they would replace the burned-out tubes to start the day with a fresh machine.

Figure 11 – In the 1950s, the electrical relays of the very ancient computers were replaced with vacuum tubes that were also very large, used lots of electricity and generated lots of waste heat too, but the vacuum tubes were 100,000 times faster than relays.

They also explained that the machines were so slow that they spent all day processing production jobs. Emergency maintenance work to fix production bugs was allowed at night, but new development was limited to one compile and test run per week! They also told me about programming the plugboards of electromechanical Unit Record Processing machines back in the 1950s by physically rewiring the plugboards. The Unit Record Processing machines would then process hundreds of punch cards per minute by routing the punch cards from machine to machine in processing streams.

Figure 12 – In the 1950s Unit Record Processing machines like this card sorter were programmed by physically rewiring a plugboard.

Figure 13 – The plugboard for a Unit Record Processing machine.

The House of Cards Finally Falls

But all of this was soon to change. In the early 1980s, the IT department of Amoco switched to using TSO running on dumb IBM 3278 terminals to access IBM MVS mainframes. Note that the IBM MVS operating system for mainframes is now called the z/OS operating system. We now used a full-screen editor called ISPF running under TSO on the IBM 3278 terminals to write code and submit jobs, and our development jobs usually ran in less than an hour. The source code for our software files was now on disk in partitioned datasets for easy access and updating. The data had moved to tapes and it was the physical process of mounting and unmounting tapes that now slowed down testing. For more on tape processing see: An IT Perspective on the Origin of Chromatin, Chromosomes and Cancer. Now I could run maybe 10 jobs in one day to test my code! However, machine costs were still incredibly high and still accounted for about 50% of project costs, so we still had to do a lot of desk checking to save on machine costs. At first, the IBM 3278 terminals appeared on the IT floor in "tube rows" like the IBM 029 keypunch machines of yore. But after a few years, each IT professional was given their own IBM 3278 terminal on their own desk. Finally, there was no more waiting in line for an input device!

Figure 14 - The IBM ISPF full-screen editor ran on IBM 3278 terminals connected to IBM mainframes in the late 1970s. ISPF was also a screen-based interface to TSO (Time Sharing Option) that allowed programmers to do things like copy files and submit batch jobs. ISPF and TSO running on IBM mainframes allowed programmers to easily reuse source code by doing copy/paste operations with the screen editor from one source code file to another. By the way, ISPF and TSO are still used today on IBM mainframe computers to support writing and maintaining software.

I found that the use of software to write and maintain software through the use of ISPF dramatically improved software development and maintenance productivity. It was like moving from typing term papers on manual typewriters to writing term papers on word processors. It probably improved productivity by a factor of at least 10 or more. In the early 1980s, I was very impressed by this dramatic increase in productivity that was brought on by using software to write and maintain software.

But I was still writing batch applications for my end-users that ran on a scheduled basis like once each day, week or month. My end-users would then pick up thick line-printer reports that were delivered from the basement printers to local output bins that were located on each floor of the building. They would then put the thick line-printer reports into special binders that were designed for thick line-printer reports. The reports were then stored in large locked filing cabinets that lined all of the halls of each floor. When an end-user needed some business information they would locate the appropriate report in one of the locked filing cabinets and leaf through the sorted report.

Figure 15 - Daily, weekly and monthly batch jobs were run on a scheduled basis to create thick line-printer reports that were then placed into special binders and stored in locked filing cabinets that lined all of the hallway walls.

This was clearly not the best way to use computers to support business processes. My end-users really needed interactive applications like I had on my DEC PDP-8/e minicomputer. But the personal computers of the day, like the Apple II, were only being used by hobbyists at the time and did not have enough power to process massive amounts of corporate data either. Buying everybody a very expensive minicomputer would certainly not do!

Virtual Machines Arrive at Amoco with IBM VM/CMS in the 1970s

Back in the late 1970s, the computing research group of the Amoco Tulsa Research Center was busily working with the IBM VM/CMS operating system. VM was actually a very early form of Cloud hypervisory software that ran on IBM mainframe computers. Under VM, a large number of virtual machines could be generated that ran different operating systems. IBM had created VM in the 1970s so that they could work on several versions of a mainframe operating system at the same time on one computer. The CMS (Conversational Monitor System) operating system was a command-based operating system, similar to Unix, that ran on VM virtual machines and was found to be so useful that IBM began selling VM/CMS as an interactive computing environment to customers. In fact, IBM still sells these products today as CMS running under z/VM. During the 1970s, the Amoco Tulsa Research Center began using VM/CMS as a platform to conduct an office automation research program. The purpose of that research program was to explore creative ways of using computers to interactively conduct business. They developed an office automation product called PROFS (Professional Office System) that was the killer App for VM/CMS. PROFS had email, calendaring and document creation and sharing capabilities that were hugely popular with end-users. In fact, many years later, IBM sold PROFS as a product called OfficeVision. The Tulsa Research Center decided to use

IBM DCF-Script for wordprocessing on virtual machines running VM/CMS. The computing research group of the Amoco Tulsa Research center also developed many other useful office automation products for VM/CMS. VM/CMS was then rolled out to the entire Amoco Research Center to conduct its business as a beta site for the VM/CMS office automation project.

Meanwhile, some of the other Amoco business units began to use VM/CMS on GE Timeshare for interactive programming purposes. I had also used VM/CMS on GE Timeshare while working for Shell in Houston as a geophysicist. GE Timeshare was running VM/CMS on IBM mainframes at GE datacenters. External users could connect to the GE Timeshare mainframes using a 300 baud acoustic coupler with a standard telephone. You would first dial GE Timeshare with your phone and when you heard the strange "BOING - BOING - SHHHHH" sounds from the mainframe modem, you would jam the telephone receiver into the acoustic coupler so that you could login to the mainframe over a "glass teletype" dumb terminal.

Figure 16 – To timeshare, we connected to GE Timeshare over a 300 baud acoustic coupler modem.

Figure 17 – Then we did interactive computing on VM/CMS using a dumb terminal.

Amoco Builds an Internal Cloud in the 1980s with an IBM VM/CMS Hypervisor Running on IBM Mainframes

But timesharing largely went out of style in the 1980s when many organizations began to create their own datacenters and ran their own mainframes within them. They did so because with timesharing you paid the timesharing provider by the CPU-second and by the byte for disk space, and that became expensive as timesharing consumption rose. Timesharing also limited flexibility because businesses were limited by the constraints of their timesharing providers. So in the early 1980s, Amoco decided to create its own private Cloud with 31 VM/CMS nodes running on a large number of datacenters around the world. All of these VM/CMS nodes were connected into a complex network via SNA. This network of 31 VM/CMS nodes was called the Corporate Timeshare System (CTS) and supported about 40,000 virtual machines, one for each employee.

When new employees arrived at Amoco, they were given their own VM/CMS virtual machine with 1 MB of memory and some virtual disk on their local CTS VM/CMS node. IT employees were given larger machines with 3 MB of memory and more disk. That might not sound like much, but recall that million-dollar mainframe computers in the 1970s only had about 1 MB of memory too. Similarly, I got my first PC at work in 1986. It was an IBM PC/AT with a 6 MHz Intel 80-286 processor and a 20 MB hard disk, with a total of 640 KB of memory. It cost about $1600 at the time - about $3,700 in 2020 dollars! So I had plenty of virtual memory and virtual disk space on my VM/CMS virtual machine to develop the text-based green-screen software of the day. My personal virtual machine was ZSCJ03 on the CTSVMD node in the Chicago Data Center. Most VM/CMS nodes had about 1,500 virtual machines running. For example, the Chicago Data Center at Amoco's corporate headquarters had VM/CMS nodes CTSVMA, CTSVMB, CTSVMC and CTSVMD to handle the 6,000 employees at headquarters. Your virtual disk was called your A-disk and your virtual machine had read and write access to the files on your A-disk. When I logged into my ZSCJ03 virtual machine there was a PROFILE EXEC A file that ran to connect me to all of the resources I needed on the CTSVMD node. The corporate utilities, like PROFS, were all stored on a shared Z-disk for all the virtual machines on CTSVMD. For example, there was a corporate utility called SHARE that would give me read access to the A-disk of any other virtual machine on CTSVMD. So if I typed in SHARE ZRJL01, my ZSCJ03 virtual machine would then have read access to the A-disk of machine ZRJL01 by running the corporate utility SHARE EXEC Z. My ZSCJ03 virtual machine also had a virtual card punch and a virtual card reader. This allowed me to PUNCH files to any of the other virtual machines on the 31 VM/CMS nodes. The PUNCHED files would then end up in the virtual card READER of the virtual machine that I sent them to and could be read in by its owner. Amoco provided a huge amount of Amoco-developed corporate software on the Z-disk to enable office automation and to make it easier for IT Applications Development to create new applications using the Amoco-developed corporate software as an application platform. For example, there was a SENDFILE EXEC Z that made it very easy to PUNCH files and a RDRLIST EXEC Z program to help manage the files in your READER. There was also a SUBMIT EXEC Z that allowed us to submit JCL streams to the IBM MVS mainframe datacenters in Chicago and Tulsa. We could also use TDISKGET EXEC Z to temporarily allocate additional virtual disk space for our virtual machines. The TLS EXEC Z software was a Tape Library System that allowed us to attach a virtual tape drive to our virtual machines. We could then dump all of the files on our virtual machine for backup purposes or write large amounts of data to tape. Essentially, Amoco had a SaaS layer running on our Z-disks and Paas and IaaS layers running on the 31 VM/CMS nodes of the Corporate Timeshare System. Individual business units were charged monthly for their virtual machines, virtual machine processing time, virtual disk space, virtual network usage and virtual tapes.

When I was about to install a new application, I would simply call the HelpDesk to have them create one or more service machines for the new application. This usually took less than an hour. For example, the most complicated VM/CMS application that I worked on was called ASNAP - the Amoco Self-Nomination Application. ASNAP allowed managers and supervisors to post job openings within Amoco. All Amoco employees could then look at the job openings around the world and post applications for the jobs. Job interviews could then be scheduled in their PROFS calendars. It was the most popular piece of software that I ever worked on because all of Amoco's 40,000 employees loved being able to find out about other jobs at Amoco and being able to apply for them. Amoco's CEO once even made a joke about using ASNAP to find another job at Amoco during a corporate town hall meeting.

Each of the 31 VM/CMS nodes had an ASNAP service machine running on it. To run the ASNAP software, end-users simply did a SHARE ASNAP and then entered ASNAP to start it up. The end-users would then have access to the ASNAP software on the ASNAP service machine and could then create a job posting or application on their own personal virtual machine. The

job posting or job application files were then sent to the "hot reader" of the ASNAP virtual machine on their VM/CMS node. When files were sent to the "hot reader" of the ASNAP virtual machine, they were immediately read in and processed. That ASNAP virtual machine would then SENDFILE all of the files to each of the other 30 VM/CMS nodes. The ASNAP job postings and applications were kept locally on flat files on each of the 31 ASNAP service machines. To push these files around the world, each ASNAP machine had a "hot reader". Whenever an ASNAP data file changed on one ASNAP machine, it was sent to the other 30 ASNAP machines via a SENDFILE. When the transmitted files reached the "hot readers" of the other 30 ASNAP machines, the files were read in by the ASNAP machine for processing. Every day, a synchronizing job was sent from the Master ASNAP machine running on CTSVMD to the "hot readers" of the other 30 ASNAP machines. Each ASNAP machine then sent a report file back to the "hot reader" on the Master ASNAP machine on CTSVMD. The synchronization files were then read in and processed for exceptions. Any missing or old files were then sent back to the ASNAP machine that was in trouble. We used the same trick to perform maintenance on ASNAP. To push out a new release of ASNAP to the 31 VM/CMS nodes, we just ran a job that did a SENDFILE of all the updated software files to the 31 VM/CMS nodes running ASNAP and the ASNAP virtual machines would read in the updated files and install them. Securing ASNAP data was a little tricky, but we were able to ensure that only the person who posted a job could modify or delete the job posting and was the only person who could see the applications for the job. Similarly, an end-user could only see, edit or withdraw their own job applications.

The Ease of Working in the Amoco Virtual Cloud Encouraged the Rise of Agile Programming and the Cloud Concept of DevOps

Because writing and maintaining software for the VM/CMS Cloud at Amoco during the 1980s was so much easier and simpler than doing the same thing on the Amoco IBM MVS datacenters in Chicago and Tulsa, Amoco Applications Development began to split into an MVS shop and a VM/CMS shop. The "real" programmers were writing heavy-duty batch applications or online Cobol/CICS/DB2 applications on the IBM mainframe MVS datacenters with huge budgets and development teams. Because these were all large projects, they used the Waterfall development model of the 1980s. With the Waterfall development model, Systems Analysts would first create a thick User Requirements document for a new project. After approval by the funding business unit, the User Requirements document was returned and the Systems Analysts then created Detail Design Specifications. The Detail Design Specifications were then turned over to a large team of Programmers to code up the necessary Cobol programs and CICS screens. After unit and integration testing on a Development LPAR on the mainframes and a Development CICS region. The Programmers would turn the updated code over to the MVS Implementation group. The MVS Implementation group would then schedule the change and perform the installation. MVS Operations would then take over the running of the newly installed software. Please see Agile vs. Waterfall Programming and the Value of Having a Theoretical Framework for more on the Waterfall development model.

But since each of the 40,000 virtual machines running on the Corporate Timeshare System was totally independent and could not cause problems for any of the other virtual machines, we did not have such a formal process for VM/CMS. For VM/CMS applications, Programmers simply copied over new or updated software to the service machines for the application. For ASNAP, we even automated the process because ASNAP was installed on 31 VM/CMS nodes. All we had to do was SENDFILE the updated ASNAP software files from the ASNAPDEV virtual machine on CTSVMD to the "hot readers" of the ASNAP service machines and they would read in the updated software files and install them. Because developing and running applications on VM/CMS was so easy, Amoco developed the "whole person" concept for VM/CMS Programmers which is very similar to the concept of Agile programming and DevOps in Cloud Computing. With the "whole person" concept, VM/CMS Programmers were expected to do the whole thing. VM/CMS Programmers would sit with end-users to try to figure out what they needed without the aid of a Systems Analyst. The VM/CMS Programmers would then code up a prototype application in an Agile manner on a Development virtual machine for the end-users to try out. When the end-users were happy with the results, we would simply copy the software from a Development virtual service machine to a Production virtual service machine. Because the virtual hardware of the VM/CMS CTS Cloud was so stable, whenever a problem did occur, it meant that there must be something wrong with the application software. Thus, Applications Development programmers on VM/CMS were also responsible for operating applications. In a dozen years of working on the Amoco VM/CMS Cloud, I never once had to work with the VM/CMS Operations group regarding an outage. The only time I ever worked with VM/CMS Operations was when they were performing an upgrade to the VM/CMS operating system on the VM/CMS Cloud.

The Agile programming model and DevOps just naturally grew from the ease of programming and supporting software in a virtual environment. It was the easygoing style of Agile programming and DevOps on the virtual hardware of the Amoco Corporate Timesharing System that ultimately led me to conclude that IT needed to stop "writing" software. Instead, IT needed to "grow" software from an "embryo" in an Agile manner with end-users. I then began the development of BSDE - the Bionic Systems Development Environment on VM/CMS - for more on that please see Agile vs. Waterfall Programming and the Value of Having a Theoretical Framework.

The Amoco VM/CMS Cloud Evaporates With the Arrival of the Distributed Computing Revolution of the Early 1990s

All during the 1980s, PCs kept getting cheaper, more powerful and commercial PC software improved as well as the power of PC hardware improved. The end-users were using WordPerfect for word processing and VisiCalc for spreadsheets running under Microsoft DOS on cheap PC-compatible personal computers. The Apple Mac arrived in 1984 with a GUI (Graphical User Interface) operating system, but Apple Macs were way too expensive, so all the Amoco business units continued to run on Microsoft DOS on cheap PC-compatible machines. All of this PC activity was done outside of the control of the Amoco IT Department. In most corporations, end-users treat the IT Department with disdain and being able to run PCs with commercial software gave the end-users a feeling of independence. But like IBM, Amoco's IT Department did not see the PCs as much of a risk. To understand why PCs were not at first seen as a threat, take a look at this YouTube video that describes the original IBM PC that appeared in 1981:

The Original IBM PC 5150 - the story of the world's most influential computer

https://www.youtube.com/watch?v=0PceJO3CAGI

and this YouTube video that shows somebody installing and using Windows 1.04 on a 1985 IBM PC/XT clone:

Windows1 (1985) PC XT Hercules

https://www.youtube.com/watch?v=xnudvJbAgI0

But then the end-user business units began to hook their cheap PCs up into LANs (Local Area Networks) that allowed end-users to share files and printers on a LAN network. That really scared Amoco's IT Department, so in 1986 all members of the IT Department were given PCs to replace their IBM 3278 terminals. But Amoco's IT Department mainly just used these PCs to run IBM 3278 emulation software to continue to connect to the IBM mainframes running MVS and VM/CMS. We did start to use WordPerfect and LAN printers for user requirements and design documents but not much else.

Then Microsoft released Windows 3.0 in 1990. Suddenly, we were able to run a GUI desktop on top of Microsoft DOS on our cheap PCs that had been running Microsoft DOS applications! To end-users, Windows 3.0 looked just like the expensive Macs that they were not supposed to use. This greatly expanded the number of Amoco end-users with cheap PCs running Windows 3.0. To further complicate the situation, some die-hard Apple II end-users bought expensive Apple Macs too! And because the Amoco IT Department had been running IBM mainframes for many decades, some Amoco IT end-users started running the IBM OS/2 GUI operating system on cheap PC-compatible machines on a trial basis. Now instead of running the heavy-duty commercial applications on IBM MVS and light-weight interactive applications on the Amoco IBM VM/CMS Corporate Timesharing Cloud, we had people trying to replace all of that with software running on personal computers running Microsoft DOS, Windows 3.0, Mac and OS/2. What a mess!

Just when you would think that it could not get worse, this all dramatically changed in 1992 when the Distributed Computing mass extinction hit IT. The end-user business units began to buy their own Unix servers and hired young computer science graduates to write two-tier C and C++ client-server applications running on Unix servers.

Figure 18 – The Distributed Computing Revolution hit IT in the early 1990s. Suddenly, people started writing two-tier client-server applications on Unix servers. The Unix servers ran RDBMS database software, like Oracle, and the end-users' PC ran "fat" client software on their PCs.

The Distributed Computing Revolution Proves Disappointing

I got reluctantly dragged into this Distributed Computing Revolution when it was decided that a client-server version of ASNAP was needed as part of the "VM Elimination Project" of one of Amoco's subsidiaries. The intent of the VM Elimination Project was to move all of the VM/CMS applications used by the subsidiary and move them all to a two-tier client-server architecture. Since this was a very powerful Amoco subsidiary, the Amoco IT Department decided that getting rid of the Corporate Timeshare System Cloud of 31 VM/CMS nodes was a good idea. The Amoco IT Department quickly decided that it would be better for the Amoco IT Department to take over the Distributed Computing Revolution by hosting Unix server farms for two-tier client-server applications, rather than let the end-user business units start to build their own Unix server farms. You see, Amoco's IT Department had been originally formed in the 1960s to prevent the end-user business units from going out and buying their own IBM mainframes.

IT was changing fast, and I could sense that there was danger in the air for VM/CMS Programmers. Even IBM was caught flat-footed and nearly went bankrupt in the early 1990s because nobody wanted their IBM mainframes any longer. Everybody wanted C/C++ Programmers working on Unix servers. So I had to teach myself Unix and C and C++ to survive. In order to do that, I bought my very first PC, an 80-386 machine running Windows 3.0 with 5 MB of memory and a 100 MB hard disk for $1500. I also bought the Microsoft C7 C/C++ compiler for something like $300. And that was in 1992 dollars! One reason for the added expense was that there were no Internet downloads in those days because there were no high-speed ISPs. PCs did not have CD/DVD drives either, so the software came on 33 diskettes, each with a 1.44 MB capacity, that had to be loaded one diskette at a time in sequence. The software also came with about a foot of manuals describing the C++ class libraries on very thin paper. Indeed, suddenly finding yourself to be obsolete is not a pleasant thing and calls for drastic action.

Unix was a big shock for me. The Unix operating system seemed like a very primitive 1970s-type operating system to me compared to the power of thousands of virtual machines running under VM/CMS. On the VM/CMS nodes, I was using REXX as the command-line interface to VM/CMS. REXX was a very powerful interpretive procedural language that was much like the IBM PL/I language that was developed by IBM to replace Fortran and COBOL back in the 1960s. However, I learned that by combining Unix KornShell with grep and awk one could write similar Unix scripts with some difficulty. The Unix vi editor was certainly not a match for IBM's ISPF editor either.

Unfortunately, the two-tier client-server Distributed Computing Revolution of the early 1990s proved to be a real nightmare for programmers. The "fat" client software running on the end-users Windows 3.0 PCs required many home-grown Windows .dll files, and it was found that the .dll files from one application could kill the .dll files from another application. In order to deal with the ".dll Hell" problem, Amoco created an IT Change Management department. I vividly remember my very first Amoco Change Management meeting for the two-tier distributed computing version of ASNAP. The two-tier version of ASNAP was being written by an outside development company, so I was not familiar with their code for the Distributed Computing version of ASNAP. At the Change Management meeting, I learned that Applications Development could no longer just slap in a new release of ASNAP like we had been doing for many years. Instead, we had to turn over the two-tier code to an implementation group for testing before it could be incorporated into the next Distributed Computing Package. Distributed Computing Packages were pushed to the end-users' PCs once per month! So instead of being able to send out an update to the ASNAP programs on 31 VM/CMS nodes in less than 10 minutes whenever we decided, we now sometimes had to wait a whole month to do so!

The Distributed Computing Platform Becomes Unsustainable

Fortunately, in 1995 the Internet Revolution hit. At first, it was just passive corporate websites that could only display static information, but soon people were building interactive corporate websites too. The "fat" client software on the end-users PCs was then reduced to "thin" client software in the form of a browser on their PC communicating with backend Unix webservers delivering the interactive content on the fly. But to allow heavy-duty corporate websites to scale with increasing loads, a third layer was soon added to the Distributed Computing Platform to form a 3-tier Client-Server model (Figure 18). The third layer ran Middleware software containing the business logic for applications and was positioned between the backend RDBMS database layer and the webservers dishing out dynamic HTML to the end-user browsers. The three-tier Distributed Computing Model finally put an end to the ".dll Hell" of the two-tier Distributed Computing Model. But the three-tier Distributed Computing Model introduced a new problem. In order to scale, the three-tier Distributed Computing Model required an ever-increasing number of "bare metal" servers in the Middleware layer. For example, in 2012 in my posting The Limitations of Darwinian Systems, I explained that it was getting nearly impossible for Middleware Operations at Discover to maintain the Middleware software on the Middleware layer of its three-tier Distributed Computing Platform because there were just too many Unix servers running in the Middleware layer. By the end of 2016 things were much worse. For a discussion of this complexity see Software Embryogenesis. Fortunately, when I left Discover in 2016 they were heading for the virtual servers of the Cloud.

The Power of Cloud Microservices

Before concluding, I would like to relay some of my experiences with the power of Cloud Microservices. The use of Microservices is another emerging technology in Cloud computing that extends our experiences with SOA. SOA (Service Oriented Architecture) arrived in 2005. With SOA, people started to introduce common services in the Middleware layer of the three-tier Distributed Computing Model. SOA allowed other Middleware application components to call a set of common SOA services for data. That eliminated the need for each application to reinvent the wheel each time for many common application data needs. Cloud Microservices take this one step further. Instead of SOA services running on bare-metal Unix servers, Cloud Microservices run in Cloud Containers and each Microservice provides a very primitive function. By using a large number of Cloud Microservices running in Cloud Containers, it is now possible to quickly throw together a new application and push it into Production.

I left Amoco in 1999 when BP bought Amoco and terminated most of Amoco's IT Department. For more on that see Hierarchiology and the Phenomenon of Self-Organizing Organizational Collapse. I then joined the IT Department of United Airlines working on the CIDB - Customer Interaction Data Base. The CIDB initially consisted of 10 C++ Tuxedo services running in a Tuxedo Domain on Unix servers. Tuxedo (Transactions Under Unix) was an early form of Middleware software developed in the 1980s to create a TPM (Transaction Processing Monitor) running under Unix that could perform the same kind of secured transaction processing that IBM's CICS (1968) provided on IBM MVS mainframes. The original 10 Tuxedo services allowed United's business applications and the www.united.com website to access the data stored on the CIDB Oracle database. We soon found that Tuxedo was very durable and robust. You could literally throw Tuxedo down the stairs without a dent! A Tuxedo Domain was very much like a Cloud Container. When you booted up a Tuxedo Domain, a number of virtual Tuxedo servers were brought up. We had each virtual Tuxedo server run just one primitive service. The Tuxedo Domain had a configuration file that allowed us to define each of the Tuxedo servers and the service that ran in it. For example, we could configure the Tuxedo Domain so that a minimum of 1 and a maximum of 10 instances of Tuxedo Server-A were brought up. So initially, only a single instance of Tuxedo Server-A would come up to receive traffic. There was a Tuxedo queue of incoming transactions that were fed to the Tuxedo Domain. If the first instance of Tuxedo Service-A was found to be busy, a second instance of Tuxedo Server-A would be automatically cranked up. The number of Tuxedo Server-A instances would then dynamically change as the Tuxedo load varied. Like most object-oriented code, the C++ code for our Tuxedo services had memory leaks, but that was not a problem for us. When one of the instances of Tuxedo Server-A ran out of memory, it would simply die and another instance of Tuxedo Service-A would be cranked up by Tuxedo. We could even change the maximum number of running Tuxedo Service-A instances on the fly without having to reboot the Tuxedo Domain.

United Airlines found the CIDB Tuxedo Domain to be so useful that we began to write large numbers of Tuxedo services. For example, we wrote many Tuxedo services that interacted with United's famous Apollo reservation system that first appeared in 1971, and also with many other United applications and databases. Soon United began to develop new applications that simply called many of our Tuxedo Microservices. We tried to keep our Tuxedo Microservices very atomic and simple. Rather than provide our client applications with an entire engine, we provided them with the parts for an engine, like engine blocks, pistons, crankshafts, water pumps, distributors, induction coils, intake manifolds, carburetors and alternators.

One day in 2002 this came in very handy. My boss called me into his office at 9:00 AM one morning and explained that United Marketing had come up with a new promotional campaign called "Fly Three - Fly Free". The "Fly Three - Fly Free" campaign worked like this. If a United customer flew three flights in one month, they would get an additional future flight for free. All the customer had to do was to register for the program on the www.united.com website. In fact, United Marketing had actually begun running ads in all of the major newspapers about the program that very day. The problem was that nobody in Marketing had told IT about the program and the www.united.com website did not have the software needed to register customers for the program. I was then sent to an emergency meeting of the Application Development team that supported the www.united.com website. According to the ads running in the newspapers, the "Fly Three - Fly Free" program was supposed to start at midnight, so we had less than 15 hours to design, develop, test and implement the necessary software for the www.united.com website! Amazingly, we were able to do this by having the www.united.com website call a number of our primitive Tuxedo Microservices that interacted with the www.united.com website and the Apollo reservation system.

The use of many primitive Microservices is also extensively used by carbon-based life on this planet. In Facilitated Variation and the Utilization of Reusable Code by Carbon-Based Life, I showcased the theory of facilitated variation by

Marc W. Kirschner and John C. Gerhart. In The Plausibility of Life (2005), Marc W. Kirschner and John C. Gerhart present their theory of facilitated variation. The theory of facilitated variation maintains that, although the concepts and mechanisms of Darwin's natural selection are well understood, the mechanisms that brought forth viable biological innovations in the past are a bit wanting in classical Darwinian thought. In classical Darwinian thought, it is proposed that random genetic changes, brought on by random mutations to DNA sequences, can very infrequently cause small incremental enhancements to the survivability of the individual, and thus provide natural selection with something of value to promote in the general gene pool of a species. Again, as frequently cited, most random genetic mutations are either totally inconsequential, or totally fatal in nature, and consequently, are either totally irrelevant to the gene pool of a species or are quickly removed from the gene pool at best. The theory of facilitated variation, like classical Darwinian thought, maintains that the phenotype of an individual is key, and not so much its genotype since natural selection can only operate upon phenotypes. The theory explains that the phenotype of an individual is determined by a number of 'constrained' and 'deconstrained' elements. The constrained elements are called the "conserved core processes" of living things that essentially remain unchanged for billions of years, and which are to be found to be used by all living things to sustain the fundamental functions of carbon-based life, like the generation of proteins by processing the information that is to be found in DNA sequences, and processing it with mRNA, tRNA and ribosomes, or the metabolism of carbohydrates via the Krebs cycle. The deconstrained elements are weakly-linked regulatory processes that can change the amount, location and timing of gene expression within a body, and which, therefore, can easily control which conserved core processes are to be run by a cell and when those conserved core processes are to be run by them. The theory of facilitated variation maintains that most favorable biological innovations arise from minor mutations to the deconstrained weakly-linked regulatory processes that control the conserved core processes of life, rather than from random mutations of the genotype of an individual in general that would change the phenotype of an individual in a purely random direction. That is because the most likely change of direction for the phenotype of an individual, undergoing a random mutation to its genotype, is the death of the individual.

Marc W. Kirschner and John C. Gerhart begin by presenting the fact that simple prokaryotic bacteria, like E. coli, require a full 4,600 genes just to sustain the most rudimentary form of bacterial life, while much more complex multicellular organisms, like human beings, consisting of tens of trillions of cells differentiated into hundreds of differing cell types in the numerous complex organs of a body, require only a mere 22,500 genes to construct. The baffling question is, how is it possible to construct a human being with just under five times the number of genes as a simple single-celled E. coli bacterium? The authors contend that it is only possible for carbon-based life to do so by heavily relying upon reusable code in the genome of complex forms of carbon-based life.

Figure 19 – A simple single-celled E. coli bacterium is constructed using a full 4,600 genes.

Figure 20 – However, a human being, consisting of about 100 trillion cells that are differentiated into the hundreds of differing cell types used to form the organs of the human body, uses a mere 22,500 genes to construct a very complex body, which is just slightly under five times the number of genes used by simple E. coli bacteria to construct a single cell. How is it possible to explain this huge dynamic range of carbon-based life? Marc W. Kirschner and John C. Gerhart maintain that, like complex software, carbon-based life must heavily rely on the microservices of reusable code.

Conclusion

Clearly, IT has now moved back to the warm, comforting, virtual seas of Cloud Computing that allow developers to spend less time struggling with the complexities of the Distributed Computing Platform that first arose in the 1990s. It appears that building new applications from Cloud-based Microservices running in Cloud containers in the Cloud will be the wave of the future for IT. If you are an IT professional and have not yet moved to the Cloud, now would be a good time to start. It is always hard to start over, but at least you should find that moving to Cloud development should make your life much easier.

Comments are welcome at scj333@sbcglobal.net

To see all posts on softwarephysics in reverse order go to:

https://softwarephysics.blogspot.com/

Regards,

Steve Johnston