In this post, I would like to discuss a possible new physical law of the Universe that should be of interest to all who have been following this blog on softwarephysics because it generalizes the concept of Universal Darwinism into a new physical law of the Universe. It is called the Law of Increasing Functional Information and is outlined in the paper below:

On the roles of function and selection in evolving systems

https://www.pnas.org/doi/epdf/10.1073/pnas.2310223120

and is also described in the YouTube video below:

Robert M. Hazen, PhD - The Missing Law: Is There a Universal Law of Evolving Systems?

https://youtu.be/TrNf62IGqM8?t=2114

The paper explains that four branches of classical 19th-century physics nearly explain all of the phenomena of our everyday lives:

1. Newton's laws of motion

2. Newton's law of gravitation

3. The classical electrodynamics of Maxwell and many others

4. The first and second laws of thermodynamics of James Prescott Joule and Rudolph Clausius

The authors explain that all of the above laws were discovered by the empirical recognition of the "conceptual equivalences" of several seemingly unrelated physical phenomena. For example, Newton's laws of motion arose from the recognition that the uniform motion of a body along a straight line and at a constant speed was conceptually equivalent to the accelerated motion of a body that is changing speed or direction if the concepts of mass, force and acceleration were related by three physical laws of motion. Similarly, an apple falling from a tree and the Moon constantly falling to the Earth in an elliptical orbit were also made to be conceptually equivalent by Newton's law of gravitation. Later, the many disparate phenomena of electricity and magnetism were made conceptually equivalent by means of Maxwell's equations. Finally, the many phenomena of kinetic, potential and heat energy were made conceptually equivalent by means of the first and second laws of thermodynamics.

The authors then go on to wonder if there is a similar conceptual equivalence for the many seemingly disparate systems that seem to evolve over time such as stars, atomic nuclei, minerals and living things. As a softwarephysicist, I would add software to that list as well. Is it possible that we have overlooked a fundamental physical law of the Universe that could explain the nature of all evolving systems? Or do evolving systems simply arise as emergent phenomena from the

four branches of classical physics outlined above? The authors point out that the very low entropy of the Universe immediately following the Big Bang could have taken a direct path to a very high-entropy patternless Universe without producing any complexity within it at all while still meticulously following all of the above laws of classical physics. But that is not what happened to our Universe. Something got in the way of a smooth flow of free energy dissipating from low to high entropy, like the disruption caused by many large rocks in a mountain stream, allowing for the rise of complex evolving systems far from thermodynamic equilibrium to form and persist.

Some have tried to lump all such evolving systems under the guise of Universal Darwinism. In this view, the Darwinian processes of inheritance, innovation and natural selection explain it all in terms of the current laws of classical physics. But is that true? Are we missing something? The authors propose that we are because all evolving systems seem to be conceptually equivalent in three important ways and that would suggest that there might exist a new underlying physical law guiding them all.

1. Each system is formed from numerous interacting units (e.g., nuclear particles, chemical elements, organic molecules, or cells) that result in combinatorially large numbers of possible configurations.

2. In each of these systems, ongoing processes generate large numbers of different configurations.

3. Some configurations, by virtue of their stability or other “competitive” advantage, are more likely to persist owing to selection for function.

The above is certainly true of software source code. Software source code consists of a huge number of interacting symbols that can be combined into a very large number of possible configurations to produce programs.

Figure 1 – Source code for a C program that calculates an average of several numbers entered at the keyboard.

There are also millions of programmers, and now LLM (Large Language Models) like Google Bard or OpenAI GPT-4, that have been generating these configurations over the past 82 years, or 2.6 billion seconds, ever since Konrad Zuse first cranked up his Z3 computer in May of 1941. And as programmers work on these very large configurations, they are constantly discarding "buggy" configurations that do not work. But even software that "works" just fine can easily become extinct when better configurations of the software evolve. Take for example the extinction of VisiCalc by Lotus 1-2-3 and finally Microsoft Excel in the 1980s.

These three characteristics - component diversity, configurational exploration, and selection - which we conjecture represent conceptual equivalences for all evolving natural systems, may be sufficient to articulate a qualitative law-like statement that is not implicit in the classical laws of physics. In all instances, evolution is a process by which configurations with a greater degree of function are preferentially selected, while nonfunctional configurations are winnowed out. We conclude:

Systems of many interacting agents display an increase in diversity, distribution, and/or patterned behavior when numerous configurations of the system are subject to selective pressure.

However, is there a universal basis for selection? And is there a more quantitative formalism underlying this conjectured conceptual equivalence - a formalism rooted in the transfer of information? We elaborate on these questions here and argue that the answer to both questions is yes.

The authors then go on to propose their new physical law:

The Law of Increasing Functional Information:

The Functional Information of a system will increase (i.e., the system will evolve) if many different configurations of the system are subjected to selection for one or more functions.

In their view, all evolving systems can be seen to be conceptually equivalent in terms of a universal driving force of increasing Functional Information in action by means of a Law of Increasing Functional Information. In many previous softwarephysics posts, I have covered the pivotal role that self-replicating information has played in the history of our Universe and also in the evolution of software. For more on that see A Brief History of Self-Replicating Information. But under the more generalized Law of Increasing Functional Information, self-replicating information becomes just a subcategory of the grander concept of Functional Information.

Figure 2 – Imagine a pile of DNA, RNA or protein molecules of all possible sequences, sorted by activity with the most active at the top. A horizontal plane through the pile indicates a given level of activity; as this rises, fewer sequences remain above it. The Functional Information required to specify that activity is -log2 of the fraction of sequences above the plane. Expressing this fraction in terms of information provides a straightforward, quantitative measure of the difficulty of a task.

But what is Functional Information? Basically, Functional Information is information that can do things. It has agency. The concept of Functional Information was first introduced in a one-page paper:

Functional Information: Molecular messages

https://www.nature.com/articles/423689a

and is basically the use of Leon Brillouin's 1953 concept of information being a form of negative entropy that he abbreviated as "negentropy", but with a slight twist. The above paper introduces the concept of Functional Information as:

By analogy with classical information, Functional Information is simply -log2 of the probability that a random sequence will encode a molecule with greater than any given degree of function. For RNA sequences of length n, that fraction could vary from 4-n if only a single sequence is active, to 1 if all sequences are active. The corresponding functional information content would vary from 2n (the amount needed to specify a given random RNA sequence) to 0 bits. As an example, the probability that a random RNA sequence of 70 nucleotides will bind ATP with

micromolar affinity has been experimentally determined to be about 10-11. This corresponds to a functional information content of about 37 bits, compared with 140 bits to specify a unique 70-mer sequence. If there are multiple sequences with a given activity, then the corresponding Functional Information will always be less than the amount of information required to specify any particular sequence. It is important to note that Functional Information is not a property of any one molecule, but of the ensemble of all possible sequences, ranked by activity.

Imagine a pile of DNA, RNA or protein molecules of all possible sequences, sorted by activity with the most active at the top. A horizontal plane through the pile indicates a given level of activity; as this rises, fewer sequences remain above it. The Functional Information required to specify that activity is -log2 of the fraction of sequences above the plane. Expressing this fraction in terms of information provides a straightforward, quantitative measure of the difficulty of a task. More information is required to specify molecules that carry out difficult tasks, such as high-affinity binding or the rapid catalysis of chemical reactions with high energy barriers, than is needed to specify weak binders or slow catalysts. But precisely how much more Functional Information is required to specify a given increase in activity is unknown. If the mechanisms involved in improving activity are similar over a wide range of activities, then power-law behaviour might be expected. Alternatively, if it becomes progressively harder to improve activity as activity increases, then exponential behaviour may be seen. An interesting question is whether the relationship between Functional Information and activity will be similar in many different systems, suggesting that common principles are at work, or whether each case will be unique.

Indeed, any programmer could also imagine a similar pile of programs consisting of all possible sequences of source code with the buggiest versions at the bottom. When you reach the level of the intersecting plane, you finally reach those versions of source code that produce a program that actually provides the desired function. However, many of those programs that actually worked might be very inefficient or hard to maintain because of a sloppy coding style. As you move higher in the pile, the number of versions decreases but these versions produce the desired function more efficiently or are composed of cleaner code. As outlined above, the Functional Information required to specify such a software activity is -log2 of the fraction of source code programs above the plane.

The Softwarephysics of it All

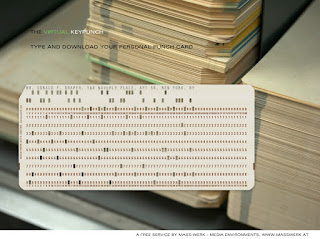

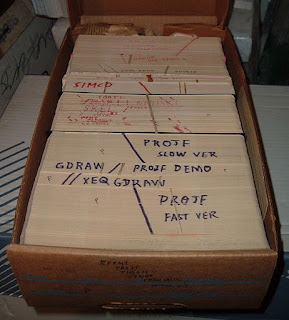

Before going on to explain how the Law of Increasing Functional Information has affected the evolution of software over the past 2.6 billion seconds, let me tell you a bit about the origin of softwarephysics. I started programming in 1972 and finished up my B.S. in Physics at the University of Illinois at Urbana in 1973. I then headed up north to complete an M.S. in Geophysics at the University of Wisconsin at Madison. From 1975 – 1979, I was an exploration geophysicist exploring for oil, first with Shell, and then with Amoco. Then in 1979, I made a career change to become an IT professional. One very scary Monday morning, I was conducted to my new office cubicle in Amoco’s IT department, and I immediately found myself surrounded by a large number of very strange IT people, all scurrying about in a near state of panic, like the characters in Alice in Wonderland. Suddenly, it seemed like I was trapped in a frantic computer simulation, buried in punch card decks and fan-fold listings. After nearly 40 years in the IT departments of several major corporations, I can now state with confidence that most corporate IT departments can best be described as “frantic” in nature. This new IT job was a totally alien experience for me, and I immediately thought that I had just made a very dreadful mistake because I soon learned that being an IT professional was a lot harder than being an exploration geophysicist.

Figure 3 - As depicted back in 1962, George Jetson was a computer engineer in the year 2062, who had a full-time job working 3 hours a day, 3 days a week, pushing the same buttons that I pushed for 40 years as an IT professional.

But it was not supposed to be that way. As a teenager growing up in the 1960s, I was led to believe that in the 21st century, I would be leading the life of George Jetson, a computer engineer in the year 2062, who had a full-time job working 3 hours a day, 3 days a week, pushing buttons. But as a newly minted IT professional, I quickly learned that all you had to do was push the right buttons, in the right sequence, at the right time, and with zero errors. How hard could that be? Well, it turned out to be very difficult indeed!

To try to get myself out of this mess, I figured that if you could apply physics to geology; why not apply physics to software? So like the exploration team at Amoco that I had just left, consisting of geologists, geophysicists, paleontologists, geochemists, and petrophysicists, I decided to take all the physics, chemistry, biology, and geology that I could muster and throw it at the problem of software. The basic idea was that many concepts in physics, chemistry, biology, and geology suggested to me that the IT community had accidentally created a pretty decent computer simulation of the physical Universe on a grand scale, a Software Universe so to speak, and that I could use this fantastic simulation in reverse, to better understand the behavior of commercial software by comparing software to how things behaved in the physical Universe. Softwarephysics depicts software as a virtual substance and relies on our understanding of the current theories in physics, chemistry, biology, and geology to help us model the nature of software behavior. So in physics, we use software to simulate the behavior of the Universe, while in softwarephysics we use the Universe to simulate the behavior of software.

After a few months on the job, I began to suspect that the second law of thermodynamics was largely to blame from the perspective of statistical mechanics. I was always searching for the small population of programs that could perform a given function out of a nearly infinite population of programs that could not. It reminded me very much of Boltzmann's concept of entropy in statistical mechanics. The relatively few functional programs that I was searching for had a very low entropy relative to the vast population of buggy programs that did not. Worse yet, it seemed as though the second law of thermodynamics was constantly trying to destroy my programs whenever I did maintenance on them. That was because the second law was trying to insert new bugs into my programs whenever I changed my code. There were nearly an infinite number of ways to do it wrong and only a very few to do it right. But I am getting ahead of myself. To better understand all of this, please take note of the following thought experiment.

Figure 4 - We begin with a left compartment containing cold slow-moving nitrogen molecules (white circles) and a right compartment with hot fast-moving nitrogen molecules (black circles).

Figure 5 - Next, we perforate the divider between the compartments and allow the hot and cold nitrogen molecules to bounce off each other and exchange energies.

Figure 6 - After a period of time the two compartments will equilibrate to the same average temperature, but we will always find some nitrogen molecules bouncing around faster (black dots) and some nitrogen molecules bouncing around slower (white dots) than the average.

Recall that in 1738 Bernoulli proposed that gasses were really composed of a very large number of molecules bouncing around in all directions. Gas pressure in a cylinder was simply the result of a huge number of molecular impacts from individual gas molecules striking the walls of a cylinder, and heat was just a measure of the kinetic energy of the molecules bouncing around in the cylinder. In 1859, physicist James Clerk Maxwell took Bernoulli’s idea one step further. He combined Bernoulli’s idea of a gas being composed of a large number of molecules with the new mathematics of statistics. Maxwell reasoned that the molecules in a gas would not all have the same velocities. Instead, there would be a distribution of velocities; some molecules would move very quickly while others would move more slowly, with most molecules having a velocity around some average velocity. Now imagine that the two compartments in Figure 4 are filled with nitrogen gas, but that the left compartment is filled with cold slow-moving nitrogen molecules (white dots), while the right compartment is filled with hot fast-moving nitrogen molecules (black dots). If we perforate the partition between compartments, as in Figure 5 above, we will observe that the fast-moving hot molecules on the right will mix with and collide with the slow-moving cold molecules on the left and will give up kinetic energy to the slow-moving molecules. Eventually, both compartments will be found to be at the same temperature as shown in Figure 6, but we will always find some molecules moving faster than the average (black dots), and some molecules moving slower than the average (white dots) just as Maxwell had determined. This is called a state of thermal equilibrium and demonstrates a thermal entropy increase. We never observe a gas in thermal equilibrium suddenly dividing itself into hot and cold compartments all by itself. The gas can go from Figure 5 to Figure 6 but never the reverse because such a process would also be a violation of the second law of thermodynamics.

In 1867, Maxwell proposed a paradox along these lines known as Maxwell’s Demon. Imagine that we place a small demon at the opening between the two compartments and install a small trap door at this location. We instruct the demon to open the trap door whenever he sees a fast-moving molecule in the left compartment approach the opening to allow the fast-moving molecule to enter the right compartment. Similarly, when he sees a slow-moving molecule from the right compartment approach the opening, he opens the trap door to allow the low-temperature molecule to enter the left compartment. After some period of time, we will find that all of the fast-moving high-temperature molecules are in the right compartment and all of the slow-moving low-temperature molecules are in the left compartment. Thus the left compartment will become colder and the right compartment will become hotter in violation of the second law of thermodynamics (the gas would go from Figure 6 to Figure 5 above). With the aid of such a demon, we could run a heat engine between the two compartments to extract mechanical energy from the right compartment containing the hot gas as we dumped heat into the colder left compartment. This really bothered Maxwell, and he never found a satisfactory solution to his paradox. This paradox also did not help 19th-century physicists become more comfortable with the idea of atoms and molecules.

In 1929, Leo Szilárd became an instructor and researcher at the University of Berlin. There he published a paper, On the Decrease of Entropy in a Thermodynamic System by the Intervention of Intelligent Beings in 1929.

Figure 7 – In 1929 Szilard published a paper in which he explained that the process of the Demon knowing which side of a cylinder a molecule was in must produce some additional entropy to preserve the second law of thermodynamics.

In Szilárd's 1929 paper, he proposed that using Maxwell’s Demon, you could indeed build a 100% efficient steam engine in conflict with the second law of thermodynamics. Imagine a cylinder with just one water molecule bouncing around in it as in Figure 7(a). First, the Demon figures out if the water molecule is in the left half or the right half of the cylinder. If he sees the water molecule in the right half of the cylinder as in Figure 7(b), he quickly installs a piston connected to a weight via a cord and pulley. As the water molecule bounces off the piston in Figure 7(c) and moves the piston to the left, it slowly raises the weight and does some useful work on it. In the process of moving the piston to the left, the water molecule must lose kinetic energy in keeping with the first law of thermodynamics and slow down to a lower velocity and temperature than the atoms in the surrounding walls of the cylinder. When the piston has finally reached the far left end of the cylinder it is removed from the cylinder in preparation for the next cycle of the engine. The single water molecule then bounces around off the walls of the cylinder as in Figure 7(a), and in the process picks up additional kinetic energy from the jiggling atoms in the walls of the cylinder as they kick the water molecule back into the cylinder each time it bounces off the cylinder walls. Eventually, the single water molecule will once again be in thermal equilibrium with the jiggling atoms in the walls of the cylinder and will be on average traveling at the same velocity it originally had before it pushed the piston to the left. So this proposed engine takes the ambient high-entropy thermal energy of the cylinder’s surroundings and converts it into the useful low-entropy potential energy of a lifted weight. Notice that the first law of thermodynamics is preserved. The engine does not create energy; it simply converts the high-entropy thermal energy of the random motions of the atoms in the cylinder walls into useful low-entropy potential energy, but that does violate the second law of thermodynamics. Szilárd's solution to this paradox was simple. He proposed that the process of the Demon figuring out if the water molecule was in the left-hand side of the cylinder or the right-hand side of the cylinder must cause the entropy of the Universe to increase. So “knowing” which side of the cylinder the water molecule was in must come with a price; it must cause the entropy of the Universe to increase.

S = S0 - I A generalized second principle states that S must always increase. If an experiment yields an increase ΔI of the Information concerning a physical system, it must be paid for by a larger increase ΔS0 in the entropy of the system and its surrounding laboratory. The efficiency ε of the experiment is defined as ε = ΔI/ΔS0 ≤ 1. Moreover, there is a lower limit k ln2 (k, Boltzmann's constant) for the ΔS0 required in an observation. Some specific examples are discussed: length or distance measurements, time measurements, observations under a microscope. In all cases it is found that higher accuracy always means lower efficiency. The Information ΔI increases as the logarithm of the accuracy, while ΔS0 goes up faster than the accuracy itself. Exceptional circumstances arise when extremely small distances (of the order of nuclear dimensions) have to be measured, in which case the efficiency drops to exceedingly low values. This stupendous increase in the cost of observation is a new factor that should probably be included in the quantum theory.

Finally, in 1953 Leon Brillouin published a paper with a thought experiment explaining that Maxwell’s Demon required some Information to tell if a molecule was moving slowly or quickly. Brillouin defined this Information as negentropy, or negative entropy, and found that Information about the velocities of the oncoming molecules could only be obtained by the demon by bouncing photons off the moving molecules. Bouncing photons off the molecules increased the total entropy of the entire system whenever the demon determined if a molecule was moving slowly or quickly. So Maxwell's Demon was really not a paradox after all since even the Demon could not violate the second law of thermodynamics. Leon Brillouin's 1953 paper is available for purchase at:

Brillouin, L. (1953) The Negentropy Principle of Information. Journal of Applied Physics, 24, 1152-1163

https://doi.org/10.1063/1.1721463

But for the frugal folk, here is the abstract for Leon Brillouin’s famous 1953 paper:

The Negentropy Principle of Information

Abstract

The statistical definition of Information is compared with Boltzmann's formula for entropy. The immediate result is that Information I corresponds to a negative term in the total entropy S of a system.

In the equation above, Brillouin proposed that Information was a negative form of entropy. When an experiment yields some Information about a system, the total amount of entropy in the Universe must increase. Information is then essentially the elimination of microstates that a system can be found to exist in. From the above analysis, a change in Information ΔI is then the difference between the initial and final entropies of a system after a determination about the system has been made.

ΔI = Si - Sf

Si = initial entropy

Sf = final entropy

using the definition of entropy from the statistical mechanics of Ludwig Boltzmann. So we need to back up in time a bit and take a look at that next.

Beginning in 1866, Ludwig Boltzmann began work to extend Maxwell’s statistical approach. Boltzmann’s goal was to be able to explain all the macroscopic thermodynamic properties of bulk matter in terms of the statistical analysis of microstates. Boltzmann proposed that the molecules in a gas occupied a very large number of possible energy states called microstates, and for any particular energy level of a gas, there were a huge number of possible microstates producing the same macroscopic energy. The probability that the gas was in any one particular microstate was assumed to be the same for all microstates. In 1872, Boltzmann was able to relate the thermodynamic concept of entropy to the number of these microstates with the formula:

S = k ln(N)

S = entropy

N = number of microstates

k = Boltzmann’s constant

These ideas laid the foundations of statistical mechanics and its explanation of thermodynamics in terms of the statistics of the interactions of many tiny things.

The Physics of Poker

Boltzmann’s logic might be a little hard to follow, so let’s use an example to provide some insight by delving into the physics of poker. For this example, we will bend the formal rules of poker a bit. In this version of poker, you are dealt 5 cards as usual. The normal rank of the poker hands still holds and is listed below. However, in this version of poker, all hands of a similar rank are considered to be equal. Thus a full house consisting of a Q-Q-Q-9-9 is considered to be equal to a full house consisting of a 6-6-6-2-2 and both hands beat any flush. We will think of the rank of a poker hand as a macrostate. For example, we might be dealt 5 cards, J-J-J-3-6, and end up with the macrostate of three of a kind. The particular J-J-J-3-6 that we hold, including the suit of each card, would be considered a microstate. Thus for any particular rank of hand or macrostate, such as three of a kind, we would find a number of microstates. For example, for the macrostate of three of a kind, there are 54,912 possible microstates or hands that constitute the macrostate of three of a kind.

Rank of Poker Hands

Royal Flush - A-K-Q-J-10 all the same suit

Straight Flush - All five cards are of the same suit and in sequence

Four of a Kind - Such as 7-7-7-7

Full House - Three cards of one rank and two cards of another such as K-K-K-4-4

Flush - Five cards of the same suit, but not in sequence

Straight - Five cards in sequence, but not the same suit

Three of a Kind - Such as 5-5-5-7-3

Two Pair - Such as Q-Q-7-7-4

One Pair - Such as Q-Q-3-J-10

Next, we create a table using Boltzmann’s equation to calculate the entropy of each hand. For this example, we set Boltzmann’s constant k = 1, since k is just a “fudge factor” used to get the units of entropy using Boltzmann’s equation to come out to those used by the thermodynamic formulas of entropy.

Thus for three of a kind where N = 54,912 possible microstates or hands:

S = ln(N)

S = ln(54,912) = 10.9134872

| Hand | Number of Microstates N | Probability | Entropy = LN(N) | Information Change = Initial Entropy - Final Entropy |

| Royal Flush | 4 | 1.54 x 10-06 | 1.3862944 | 13.3843291 |

| Straight Flush | 40 | 1.50 x 10-05 | 3.6888795 | 11.0817440 |

| Four of a Kind | 624 | 2.40 x 10-04 | 6.4361504 | 8.3344731 |

| Full House | 3,744 | 1.44 x 10-03 | 8.2279098 | 6.5427136 |

| Flush | 5,108 | 2.00 x 10-03 | 8.5385632 | 6.2320602 |

| Straight | 10,200 | 3.90x 10-03 | 9.2301430 | 5.5404805 |

| Three of a Kind | 54,912 | 2.11 x 10-02 | 10.9134872 | 3.8571363 |

| Two Pairs | 123,552 | 4.75 x 10-02 | 11.7244174 | 3.0462061 |

| Pair | 1,098,240 | 4.23 x 10-01 | 13.9092195 | 0.8614040 |

| High Card | 1,302,540 | 5.01 x 10-01 | 14.0798268 | 0.6907967 |

| Total Hands | 2,598,964 | 1.00 | 14.7706235 | 0.0000000 |

Figure 8 – In the table above, each poker hand is a macrostate that has a number of microstates that all define the same macrostate. Given N, the number of microstates for each macrostate, we can then calculate its entropy using Boltzmann's definition of entropy S = ln(N) and its Information content using Leon Brillouin’s concept of Information ΔI = Si - Sf. The above table is available as an Excel spreadsheet on my Microsoft One Drive at Entropy .

Examine the above table. Note that higher-ranked hands have more order, less entropy, and are less probable than the lower-ranked hands. For example, a straight flush with all cards the same color, same suit, and in numerical order has an entropy = 3.6889, while a pair with two cards of the same value has an entropy = 13.909. A hand that is a straight flush appears more orderly than a hand that contains only a pair and is certainly less probable. A pair is more probable than a straight flush because more microstates produce the macrostate of a pair (1,098,240) than there are microstates that produce the macrostate of a straight flush (40). In general, probable things have lots of entropy and disorder, while improbable things, like perfectly bug-free software, have little entropy or disorder. In thermodynamics, entropy is a measure of the depreciation of a macroscopic system like how well mixed two gases are, while in statistical mechanics entropy is a measure of the microscopic disorder of a system, like the microscopic mixing of gas molecules. A pure container of oxygen gas will mix with a pure container of nitrogen gas because there are more arrangements or microstates for the mixture of the oxygen and nitrogen molecules than there are arrangements or microstates for one container of pure oxygen and the other of pure nitrogen molecules. In statistical mechanics, a neat room tends to degenerate into a messy room and increase in entropy because there are more ways to mess up a room than there are ways to tidy it up. In statistical mechanics, the second law of thermodynamics results because systems with lots of entropy and disorder are more probable than systems with little entropy or disorder, so entropy naturally tends to increase with time.

Getting back to Leon Brillouin’s concept of Information as a form of negative entropy, let’s compute the amount of Information you convey when you tell your opponent what hand you hold. When you tell your opponent that you have a straight flush, you eliminate more microstates than when you tell him that you have a pair, so telling him that you have a straight flush conveys more Information than telling him you hold a pair. For example, there are a total of 2,598,964 possible poker hands or microstates for a 5 card hand, but only 40 hands or microstates constitute the macrostate of a straight flush.

Strait Flush ΔI = Si – Sf = ln(2,598,964) – ln(40) = 11.082

For a pair we get:

Pair ΔI = Si – Sf = ln(2,598,964) – ln(1,098,240) = 0.8614040

When you tell your opponent that you have a straight flush you deliver 11.082 units of Information, while when you tell him that you have a pair you only deliver 0.8614040 units of Information. Clearly, when your opponent knows that you have a straight flush, he knows more about your hand than if you tell him that you have a pair.

Comparing Leon Brillouin’s Concept of Information to the Concept of Functional Information

From the above, we see that Leon Brillouin’s concept of Information dealt with determining how rare the results of a particular measurement were by determining how far the measurement was from the normal situation. This would essentially be the height of the intersecting plane in Figure 2. On the other hand, Functional Information is a measurement of the volume of the cone above the intersecting plane in Figure 2.

But before doing so, let's do a few mathematical operations on the definition of Functional Information. Recall that the concept of Functional Information is defined as the fraction of RNA strands or programs that can perform a given function. It is the fraction of things above the intersecting plane in Figure 2:

Functional Information = - log2( Na / (Nt )

where Na = number of RNA strands or programs above the intersecting plane of Figure 2

where Nt = total number of RNA strands or programs in Figure 2

Now using the magic of logarithms:

Functional Information = - log2 ( Na / Nt ) = - ( log2 ( Na) - log2 ( Nt ) ) = log2 ( Nt ) - log2 ( Na )

Now there really is nothing special about using the natural base-e logarithm ln(x) or the base-2 logarithm log2(x). Today, people sometimes like to use the base-2 logarithm log2(x) because we have computers that use base-2 arithmetic. But Boltzmann did not have a computer back in the 19th century so he used the common base-e natural logarithm ln(x) of the day. The mathematical constant e was first discovered in 1683 by Jacob Bernoulli while he was studying compound interest. He wondered what would happen if interest was compounded continuously, meaning an infinite number of times per year. The limit of this process led to the value of e, approximately 2.71828.

Now since ln(x) = 0.6931471806 log2(x) we can rewrite the equation as:

Functional Information = 0.6931471806 ( ln ( Nt ) - ln ( Na )

Since the 0.6931471806 is just a fudge factor to convert log2(x) to ln(x) we can just set it to "1" to obtain:

Functional Information = ln ( Nt ) - ln ( Na )

Now we can see that Functional Information is very similar to Brillouin's Information for poker:

Brillouin Information = ln ( Ntotal hands ) - ln ( Nyour hand )

Functional Information essentially compares the number of poker hands that are equal to or greater than your particular poker hand relative to all other possible poker hands, while Brillouin Information just compares your particular poker hand to all possible poker hands. The good news is that Functional Information does not get tangled up with the ideas of entropy and information used by network people.

The Very Sordid History of Entropy and Information in the Information Theory Used by Telecommunications

Claude Shannon went to work at Bell Labs in 1941 where he worked on cryptography and secret communications for the war effort. Claude Shannon was a true genius and is credited as being the father of Information Theory. But Claude Shannon was really trying to be the father of digital Communication Theory. In 1948, Claude Shannon published a very famous paper that got it all started.

A Mathematical Theory of Communication

https://people.math.harvard.edu/~ctm/home/text/others/shannon/entropy/entropy.pdf

Here is the very first paragraph from that famous paper:

Introduction

The recent development of various methods of modulation such as PCM and PPM which exchange bandwidth for signal-to-noise ratio has intensified the interest in a general theory of communication. A basis for such a theory is contained in the important papers of Nyquist and Hartley on this subject. In the present paper, we will extend the theory to include a number of new factors, in particular the effect of noise in the channel, and the savings possible due to the statistical structure of the original message and due to the nature of the final destination of the Information. The fundamental problem of communication is that of reproducing at one point either exactly or approximately a message selected at another point. Frequently the messages have meaning; that is they refer to or are correlated according to some system with certain physical or conceptual entities. These semantic aspects of communication are irrelevant to the engineering problem. The significant aspect is that the actual message is one selected from a set of possible messages. The system must be designed to operate for each possible selection, not just the one which will actually be chosen since this is unknown at the time of design. If the number of messages in the set is finite then this number or any monotonic function of this number can be regarded as a measure of the Information produced when one message is chosen from the set, all choices being equally likely.

Figure 9 – Above is the very first figure in Claude Shannon's very famous 1948 paper A Mathematical Theory of Communication.

Notice that the title of the paper is A Mathematical Theory of Communication and the very first diagram in the paper describes the engineering problem he was trying to solve. Claude Shannon was trying to figure out a way to send digital messages containing electrical bursts of 1s and 0s over a noisy transmission line. As shown in the red text above, Claude Shannon did not care at all about the Information in the message. The message could be the Gettysburg Address or pure jibberish. It did not matter. What mattered was being able to manipulate the noisy message of 1s and 0s so that the received message exactly matched the transmitted message. You see, at the time, AT&T was essentially only transmitting analog telephone conversations. A little noise on an analog telephone line is just like listening to an old scratchy vinyl record. It might be a little bothersome, but still understandable. However, error correction is very important when transmitting digital messages consisting of binary 1s and 0s. For example, both of the messages down below are encoded with a total of 16 1s and 0s:

0000100000000000

1001110010100101

However, the first message consists mainly of 0s, so it seems that it should be easier to apply some kind of error detection and correction scheme to the first message, compared to the second message, because the 1s are so rare in the first message. Doing the same thing for the second message should be much harder because the second message is composed of eight 0s and eight 1s. For example, simply transmitting the 16-bit message 5 times over and over should easily do the trick for the first message. But for the second message, you might have to repeat the 16 bits 10 times to make sure you could figure out the 16 bits in the presence of noise that could sometimes flip a 1 to a 0. This led Shannon to conclude that the second message must contain more Information than the first message. He also concluded that the 1s in the first message must contain more Information than the 0s because the 1s were much less probable than the 0s, and consequently, the arrival of a 1 had much more significance than the arrival of a 0 in the message. Using this line of reasoning, Shannon proposed that if the probability of receiving a 0 in a message was p and the probability of receiving a 1 in a message was q, then the Information H in the arrival of a single 1 or 0 must not simply be one bit of Information. Instead, it must depend upon the probabilities p and q of the arriving 1s and 0s:

H(p) = - p log2p - q log2q

Since in this case the message is only composed of 1s and 0s, it follows that:

q = 1 - p

Figure 10 shows a plot of the Information H(p) of the arrival of a 1 or 0 as a function of p the probability of a 0 arriving in a message when the message is only composed of 1s and 0s:

Figure 10 - A plot of Shannon’s Information Entropy equation H(p) versus the probability p of finding a 0 in a message composed solely of 1s and 0s

Notice that the graph peaks to a value of 1.0 when p = 0.50 and has a value of zero when p = 0.0 or p = 1.0. Now if p = 0.50 that means that q = 0.50 too because:

q = 1 - p

Substituting p = 0.50 and q = 0.50 into the above equation yields the Information content of an arriving 0 or 1 in a message, and we find that it is equal to one full bit of Information:

H(0.50) = -(0.50) log2(0.50) - (0.50) log2(0.50) = -log2(0.50) = 1

And we see that value of H(0.50) on the graph in Figure 10 does indeed have a value of 1 bit.

Now suppose the arriving message consists only of 0s. In that case, p = 1.0 and q = 0.0, and the Information content of an incoming 0 or 1 is H(1.0) and calculates out to a value of 0.0 in our equation and also in the plot of H(p) in Figure 10. This simply states that a message consisting simply of arriving 0s contains no Information at all. Similarly, a message consisting only of 1s would have a p = 0.0 and a q = 1.0, and our equation and plot calculate a value of H(0.0) = 0.0 too, meaning that a message simply consisting of 1s conveys no Information at all as well. What we see here is that seemingly a “messy” message consisting of many 1s and 0s conveys lots of Information, while a “neat” message consisting solely of 1s or 0s conveys no Information at all. When the probability of receiving a 1 or 0 in a message is 0.50 – 0.50, each arriving bit contains one full bit of Information, but for any other mix of probabilities, like 0.80 – 0.20, each arriving bit contains less than a full bit of Information. From the graph in Figure 10, we see that when a message has a probability mix of 0.80 – 0.20 that each arriving 1 or 0 only contains about 0.72 bits of Information. The graph also shows that it does not matter whether the 1s or the 0s are the more numerous bits because the graph is symmetric about the point p = 0.50, so a 0.20 – 0.80 mix of 1s and 0s also only delivers 0.72 bits of Information for each arriving 1 or 0.

Claude Shannon went on to generalize his formula for H(p) to include cases where there were more than two symbols used to encode a message:

H(p) = - Σ p(x) log2 p(x)

The above formula says that if you use 2, 3, 4, 5 …. different symbols to encode Information, just add up the probability of each symbol multiplied by the log2 of the probability of each symbol in the message. For example, suppose we choose the symbols 00, 01, 10, and 11 to send messages and that the probability of sending a 1 or a 0 are both 0.50. That means the probability p for each symbol 00, 01, 10 and 11 is 0.25 because each symbol is equally likely. So how much Information does each of these two-digit symbols now contain? If we substitute the values into Shannon’s equation we get an answer of 2 full bits of Information:

H(0.25, 0.25, 0.25, 0.25) = - 0.25 log2(0.25) - 0.25 log2(0.25) - 0.25 log2(0.25) - 0.25 log2(0.25) =

- log2(0.25) = 2

which makes sense because each symbol is composed of two one-bit symbols. In general, if all the symbols we use are N bits long, they will then all contain N bits of Information each. For example, in biology genes are encoded in DNA using four bases A, C, T and G. A codon consists of 3 bases and each codon codes for a particular amino acid or is an end of file Stop codon. On average, prokaryotic bacterial genes code for about 400 amino acids using 1200 base pairs. If we assume that the probability distribution for all four bases, A, C, T and G are the same for all the bases in a gene, namely a probability of 0.25, then we can use our analysis above to conclude that each base contains 2 bits of Information because we are using 4 symbols to encode the Information. That means a 3-base codon contains 6 bits of Information and a protein consisting of 400 amino acids contains 2400 bits of Information or 300 bytes of Information in IT speak.

Entropy and Information Confusion Now here is where the confusion comes in about the nature of Information. The story goes that Claude Shannon was not quite sure what to call his formula for H(p). Then one day in 1949 he happened to visit the mathematician and early computer pioneer John von Neumann, and that is when Information and entropy got mixed together in communications theory:

”My greatest concern was what to call it. I thought of calling it ‘Information’, but the word was overly used, so I decided to call it ‘uncertainty’. When I discussed it with John von Neumann, he had a better idea. Von Neumann told me, ‘You should call it entropy, for two reasons. In the first place, your uncertainty function has been used in statistical mechanics under that name, so it already has a name. In the second place, and more important, nobody knows what entropy really is, so in a debate you will always have the advantage.”

Unfortunately, with that piece of advice, we ended up equating Information with entropy in communications theory.

So in Claude Shannon's Information Theory people calculate the entropy, or Information content of a message, by mathematically determining how much “surprise” there is in a message. For example, in Claude Shannon's Information Theory, if I transmit a binary message consisting only of 1s or only of 0s, I transmit no useful Information because the person on the receiving end only sees a string of 1s or a string of 0s, and there is no “surprise” in the message. For example, the messages “1111111111” or “0000000000” are both equally boring and predictable, with no real “surprise” or Information content at all. Consequently, the entropy, or Information content, of each bit in these messages is zero, and the total Information of all the transmitted bits in the messages is also zero because they are both totally predictable and contain no “surprise”. On the other hand, if I transmit a signal containing an equal number of 1s and 0s, there can be lots of “surprise” in the message because nobody can really tell in advance what the next bit will bring, and each bit in the message then has an entropy, or Information content, of one full bit of Information.

This concept of entropy and Information content is very useful for people who work with transmission networks and on error detection and correction algorithms for those networks, but it is not very useful for IT professionals. For example, suppose you had a 10-bit software configuration file and the only “correct” configuration for your particular installation consisted of 10 1s in a row like this “1111111111”. In Claude Shannon's Information Theory that configuration file contains no Information because it contains no “surprise”. However, in Leon Brillouin’s formulation of Information there would be a total of N = 210 possible microstates or configuration files for the 10-bit configuration file, and since the only “correct” version of the configuration file for your installation is “1111111111” there are only N = 1 microstates that meet that condition.

Using the formulas above we can now calculate the entropy of our single “correct” 10-bit configuration file and the entropy of all possible 10-bit configuration files:

Boltzman's Definition of Entropy

S = ln(N)

N = Number of microstates

Leon Brillouin’s Definition of Information

∆Information = Si - Sf

Si = initial entropy

Sf = final entropy

as:

Sf = ln(1) = 0

Si = ln(210) = ln (1024) = 6.93147

So using Leon Brillouin’s formulation for the concept of Information the Information content of a single “correct” 10-bit configuration file is:

Si - Sf = 6.93147 – 0 = 6.93147

which, if you look at the table in Figure 8, contains a little more Information than drawing a full house in poker without drawing any additional cards and would be even less likely for you to stumble upon by accident than drawing a full house.

So in Claude Shannon's Information Theory, a very “buggy” 10 MB executable program file would contain just as much Information and would require just as many network resources to transmit as transmitting a bug-free 10 MB executable program file. Clearly, Claude Shannon's Information Theory formulations for the concepts of Information and entropy are less useful for IT professionals than are Leon Brillouin’s formulations for the concepts of Information and entropy.

What John von Neumann was trying to tell Claude Shannon was that his formula for H(p) looked very much like Boltzmann’s equation for entropy:

S = k ln(N)

The main difference was that Shannon was using a base 2 logarithm, log2 in his formula, while Boltzmann used a base e natural logarithm ln or loge in his formula for entropy. But given the nature of logarithms, that really does not matter much.

The main point of confusion arises because in communications theory the concepts of Information and entropy pertain to encoding and transmitting Information, while in IT and many other disciplines, like biology, we are more interested in the amounts of useful and useless Information in a message. For example, in communications theory, the code for a buggy 300,000-byte program contains just as much Information as a totally bug-free 300,000-byte version of the same program and would take just as much bandwidth and network resources to transmit accurately over a noisy channel as transmitting the bug-free version of the program. Similarly, in communications theory, a poker hand consisting of four Aces and a 2 of clubs contains just as much Information and is just as “valuable” as any other 5-card poker hand because the odds of being dealt any particular card is 1/52 for all the cards in a deck, and therefore, all messages consisting of 5 cards contain exactly the same amount of Information. Similarly, all genes that code for a protein consisting of 400 amino acids all contain exactly the same amount of Information, no matter what those proteins might be capable of doing. However, in both biology and IT we know that just one incorrect amino acid in a protein or one incorrect character in a line of code can have disastrous effects, so in those disciplines, the quantity of useful Information is much more important than the number of bits of data to be transmitted accurately over a communications channel.

Of course, the concepts of useful and useless Information lie in the eye of the beholder to some extent. Brillouin’s formula attempts to quantify this difference, but his formula relies upon Boltzmann’s equation for entropy, and Boltzmann’s equation has always had the problem of how do you define a macrostate? There really is no absolute way of defining one. For example, suppose I invented a new version of poker in which I defined the highest ranking hand to be an Ace of spades, 2 of clubs, 7 of hearts, 10 of diamonds and an 8 of spades. The odds of being dealt such a hand are 1 in 2,598,964 because there are 2,598,964 possible poker hands, and using Boltzmann’s equation that hand would have a very low entropy of exactly 0.0 because N = 1 and ln(1) = 0.0. Necessarily, the definition of a macrostate has to be rather arbitrary and tailored to the problem at hand. But in both biology and IT we can easily differentiate between macrostates that work as opposed to macrostates that do not work, like comparing a faulty protein or a buggy program with a functional protein or program.

My hope is that by now I have totally confused you about the true nature of entropy and Information with my explanations of both! If I have been truly successful, it now means that you have joined the intellectual elite who worry about such things. For most people

Information = Something you know

and that says it all.

For more on the above see Entropy - the Bane of Programmers, The Demon of Software, Some More Information About Information and The Application of the Second Law of Information Dynamics to Software and Bioinformatics.

Like Most Complex Systems - Software Displays Nonlinear Behavior

With a firm understanding of how Information behaves in our Universe, my next challenge as a softwarephysicist was to try to explain why the stack of software in Figure 2 was shaped like a cone. Why did the Universe demand near perfection in order for software to work? Why didn't the Universe at least offer some partial credit on my programs as my old physics professors did in college? When I made a little typo on a final exam, my professors usually did not tear up the whole exam and then give me an "F" for the entire course. But as a budding IT professional, I soon learned that computer compilers were not so kind. If I had one little typo in 100,000 lines of code, the compiler would happily abend my entire compile! Worse yet, when I did get my software to finally compile and link into an executable file that a computer could run, I always found that my software contained all sorts of little bugs that made it not run properly. Usually, my software would immediately crash and burn, but sometimes it would seem to run just fine for many weeks in Production and then suddenly crash and burn later for no apparent reason. This led me to realize that software generally exhibited nonlinear behavior, but with careful testing (selection), software could be made to operate in a linear manner.

Linear systems are defined by linear differential equations that can be solved using calculus. Linear systems are generally well-behaved meaning that a slight change to a linear system produces a well-behaved response. Nonlinear systems are defined by nonlinear differential equations that cannot be solved using calculus. Nonlinear differential equations can only be solved numerically by computers. Nonlinear systems are generally not well-behaved. A small change to a nonlinear system can easily produce disastrous results. This is true of both software and carbon-based life running on DNA. The mutation of a single character in 100,000 lines of code can easily produce disastrous results and so too can the mutation of a single base pair in the three billion base pairs that define a human being. The Law of Increasing Functional Information explains that evolving systems overcome this problem by generating large numbers of similar configurations that are later honed by selection processes that remove defective configurations.

Now it turns out that all of the fundamental classical laws of physics listed above are defined by linear differential equations. So you would think that this should not be a problem. And before we had computers that could solve nonlinear differential equations that is what everybody thought. But then in the 1950s, we started building computers that could solve nonlinear differential equations and that is when the trouble started. We slowly learned that nonlinear systems did not behave at all like their well-behaved cousins. With the aid of computer simulations, we learned that when large numbers of components were assembled, they began to follow nonlinear differential equations and exhibited nonlinear behaviors. True, each little component in the assemblage would faithfully follow the linear differential equations of the fundamental classical laws of physics, but when large numbers of components came together and began to interact, those linear differential equations went out the window. The result was the arrival of Chaos Theory in the 1970s. For more on that see Software Chaos.

Figure 11 – The orbit of the Earth about the Sun is an example of a linear system that is periodic and predictable. It is governed by the linear differential equations that define Newton's laws of motion and by his equation for the gravitational force.

Nonlinear systems are deterministic, meaning that once you set them off in a particular direction they always follow exactly the same path or trajectory, but they are not predictable because slight changes to initial conditions or slight perturbations can cause nonlinear systems to dramatically diverge to a new trajectory that leads to a completely different destination. Even when nonlinear systems are left to themselves and not perturbed in any way, they can appear to spontaneously jump from one type of behavior to another.

Figure 12 – Above is a very famous plot of the solution to three nonlinear differential equations developed by Ed Lorenz. Notice that like the orbit of the Earth about the Sun, points on the solution curve follow somewhat periodic paths about two strange attractors. Each attractor is called an attractor basin because points orbit the attractor basins like marbles in a bowl.

Figure 13 – But unlike the Earth orbiting the Sun, points in the attractor basins can suddenly jump from one attractor basin to another. High-volume corporate websites normally operate in a normal operations attractor basin but sometimes can spontaneously jump to an outage attractor basin even when they are only perturbed by a small processing load disturbance.

Figure 14 – The top-heavy SUVs of yore also had two basins of attraction and one of them was upside down.

For more on the above see Software Chaos.

The Fundamental Problem of Software

From the above analysis, I came to the realization in the early 1980s that my fundamental problem was that the second law of thermodynamics was constantly trying to destroy the useful information in my programs with small bugs, and because our Universe is largely nonlinear in nature, these small bugs could produce disastrous results when software was in Production. Now I would say that the second law of thermodynamics was constantly trying to destroy the Functional Information in my programs.

But the idea of destroying information causes some real problems for physicists, and as we shall see, the solution to that problem is that we need to make a distinction between useful information and useless information. Here is the problem that physicists have with destroying information. Recall, that a reversible process is a process that can be run backwards in time to return the Universe back to the state that it had before the process even began as if the process had never even happened in the first place. For example, the collision between two molecules at low energy is a reversible process that can be run backwards in time to return the Universe to its original state because Newton’s laws of motion are reversible. Knowing the position of each molecule at any given time and also its momentum, a combination of its speed, direction, and mass, we can predict where each molecule will go after a collision between the two, and also where each molecule came from before the collision using Newton’s laws of motion. For a reversible process such as this, the information required to return a system back to its initial state cannot be destroyed, no matter how many collisions might occur, in order for it to be classified as a reversible process that is operating under reversible physical laws.

Figure 15 – The collision between two molecules at low energy is a reversible process because Newton’s laws of motion are reversible (click to enlarge)

Currently, all of the effective theories of physics, what many people mistakenly now call the “laws” of the Universe, are indeed reversible, except for the second law of thermodynamics, but that is because, as we saw above, the second law is really not a fundamental “law” of the Universe at all. The second law of thermodynamics just emerges from the statistics of a large number of interacting particles. Now in order for a law of the Universe to be reversible, it must conserve information. That means that two different initial microstates cannot evolve into the same microstate at a later time. For example, in the collision between the blue and pink molecules in Figure 15, the blue and pink molecules both begin with some particular position and momentum one second before the collision and end up with different positions and momenta at one second after the collision. In order for the process to be reversible and Newton’s laws of motion to be reversible too, this has to be unique. A different set of identical blue and pink molecules starting out with different positions and momenta one second before the collision could not end up with the same positions and momenta one second after the collision as the first set of blue and pink molecules. If that were to happen, then one second after the collision, we would not be able to tell what the original positions and momenta of the two molecules were one second before the collision since there would now be two possible alternatives, and we would not be able to uniquely reverse the collision. We would not know which set of positions and momenta the blue and pink molecules originally had one second before the collision, and the information required to reverse the collision would be destroyed. And because all of the current effective theories of physics are time reversible in nature that means that information cannot be destroyed. So if someday information were indeed found to be destroyed in an experiment, the very foundations of physics would collapse, and consequently, all of science would collapse as well.

So if information cannot be destroyed, but Leon Brillouin’s reformulation of the second law of thermodynamics does imply that the total amount of information in the Universe must decrease (dS/dt > 0 implies that dI/dt < 0), what is going on? The solution to this problem is that we need to make a distinction between useful information and useless information. Recall that the first law of thermodynamics maintains that energy, like information, also cannot be created nor destroyed by any process. Energy can only be converted from one form of energy into another form of energy by any process. For example, when you drive to work, you convert all of the low entropy chemical energy in gasoline into an equal amount of useless waste heat energy by the time you hit the parking lot of your place of employment, but during the entire process of driving to work, none of the energy in the gasoline is destroyed, it is only converted into an equal amount of waste heat that simply diffuses away into the environment as your car cools down to be in thermal equilibrium with the environment. So why cannot I simply drive home later in the day using the ambient energy found around my parking spot? The reason you cannot do that is that pesky old second law of thermodynamics. You simply cannot turn the useless high-entropy waste heat of the molecules bouncing around near your parked car into useful low-entropy energy to power your car home at night. And the same goes for information. Indeed, the time reversibility of all the current effective theories of physics may maintain that you cannot destroy information, but that does not mean that you cannot change useful information into useless information.

But for all practical purposes from an IT perspective, turning useful information into useless information is essentially the same as destroying information. For example, suppose you take the source code file for a bug-free program and scramble its contents. Theoretically, the scrambling process does not destroy any information because theoretically it can be reversed. But in practical terms, you will be turning a low-entropy file into a useless high-entropy file that only contains useless information. So effectively you will have destroyed all of the useful information in the bug-free source code file. Here is another example. Suppose you are dealt a full house, K-K-K-4-4, but at the last moment a misdeal is declared and your K-K-K-4-4 gets shuffled back into the deck! Now the K-K-K-4-4 still exists as scrambled hidden information in the entropy of the entire deck, and so long as the shuffling process can be reversed, the K-K-K-4-4 can be recovered, and no information is lost, but that does not do much for your winnings. Since all the current laws of physics are reversible, including quantum mechanics, we should never see information being destroyed. In other words, because entropy must always increase and never decreases, the hidden information of entropy cannot be destroyed.

The Solution to the Fundamental Problem of Software

Again, in physics, we use software to simulate the behavior of the Universe, while in softwarephysics we use the Universe to simulate the behavior of software. So in the early 1980s, I asked myself, "Are there any very complicated systems in our Universe that seem to deal well with the second law of thermodynamics and nonlinearity?". I knew that living things did a great job with handling both, but at first, I did not know how to harness the power of living things to grow software instead of writing software. Then through a serendipitous accident, I began to do so by working on some software that I later called the Bionic Systems Development Environment (BSDE) back in 1985. BSDE was an early IDE (Integrated Development Environment) that ran on VM/CMS and grew applications from embryos in a biological manner. For more on BSDE see the last part of Programming Biology in the Biological Computation Group of Microsoft Research. During the 1980s, BSDE was used by about 30 programmers to put several million lines of code into Production. Here is a 1989 document on my Microsoft One Drive that was used by the IT Training department of Amoco in their BSDE class:

BSDE – A 1989 document describing how to use BSDE - the Bionic Systems Development Environment - to grow applications from genes and embryos within the maternal BSDE software.

Could the Physical Laws of our Universe Have also Arisen From the Law of Increasing Functional Information in Action?

Before embarking on the rather lengthy section below on the evolution of computer software and hardware, I would like to put in a plug for Lee Smolin's cosmological natural selection hypothesis that proposes that the physical laws of our Universe evolved from an infinitely long chain of previous Universes to produce a Universe that is complex enough to easily form black holes. In Lee Smolin's cosmological natural selection hypothesis, black holes in one universe produce white holes in new Universes beyond the event horizons of the originating black holes. These new Universes experience these new white holes as their own Big Bangs and then go on to produce their own black holes if possible. Thus, Universes that have physical laws that are good at making black holes are naturally selected for over Universes that do not and soon come to dominate the Multiverse. Lee Smolin's cosmological natural selection hypothesis meets all of the necessary requirements of the Law of Increasing Functional Information for the cosmic evolution of a Multiverse. For more on that see The Self-Organizing Recursive Cosmos.

The Evolution of Software as a Case Study of the Law of Increasing Functional Information in Action

In this rather long-winded tale, try to keep in mind the three required factors that the Law of Increasing Functional Information needs for a system to evolve:

1. Each system is formed from numerous interacting units (e.g., nuclear particles, chemical elements, organic molecules, or cells) that result in combinatorially large numbers of possible configurations.

2. In each of these systems, ongoing processes generate large numbers of different configurations.

3. Some configurations, by virtue of their stability or other “competitive” advantage, are more likely to persist owing to selection for function.

Also, take note of the coevolution of computer hardware and software. It is very similar to the coevolution of the rocks and minerals of the Earth's crust and carbon-based life over the past 4.0 billion years. Please feel free to skim over the details that only IT old-timers may find interesting.

The evolution of software provides a valuable case study for the Law of Increasing Functional Information because software has been evolving about 100 million times faster than did carbon-based life on this planet. This has been going on for the past 82 years, or 2.6 billion seconds, ever since Konrad Zuse first cranked up his Z3 computer in May of 1941. For more on the computational adventures of Konrad Zuse please see So You Want To Be A Computer Scientist?. More importantly, all of this software evolution has occurred within a single human lifetime and is well documented. So during a typical 40-year IT career of 1.26 billion seconds, one should expect to see some great changes take place as software rapidly evolves. In fact, all IT professionals find that they have to constantly retrain themselves to remain economically viable in the profession in order to keep up with the frantic pace of software evolution. Job insecurity due to technical obsolescence has always added to the daily mayhem of life in IT, especially for those supporting "legacy" software for a corporation. So as an IT professional, not only will you gain an appreciation for geological Deep Time, but you will also live through Deep Time as you observe software rapidly evolving during your career. To sample what might yet come, let us take a look at how software and hardware have coevolved over the past 2.6 billion seconds.

SoftwarePaleontology

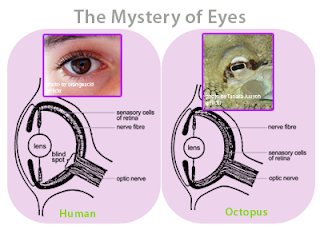

Since the very beginning, the architecture of software has evolved through the Darwinian processes of inheritance, innovation and natural selection and has followed a path very similar to the path followed by the carbon-based living things on the Earth. I believe this has been due to what evolutionary biologists call convergence. For example, the concept of the eye has independently evolved at least 40 different times in the past 600 million years, and there are many examples of “living fossils” showing the evolutionary path. For example, the camera-like structures of the human eye and the eye of an octopus are nearly identical, even though each structure evolved totally independent of the other.

Figure 16 - The eye of a human and the eye of an octopus are nearly identical in structure, but evolved totally independently of each other. As Daniel Dennett pointed out, there are only a certain number of Good Tricks in Design Space and natural selection will drive different lines of descent towards them.

Figure 17 – There are many living fossils that have left behind signposts along the trail to the modern camera-like eye. Notice that the human-like eye on the far right is really that of an octopus (click to enlarge).

An excellent treatment of the significance that convergence has played in the evolutionary history of life on Earth, and possibly beyond, can be found in Life’s Solution (2003) by Simon Conway Morris. The convergent evolution for carbon-based life on the Earth to develop eyes was driven by the hardware fact of life that the Earth is awash in solar photons.

Programmers and living things both have to deal with the second law of thermodynamics and nonlinearity, and there are only a few optimal solutions. Programmers try new development techniques, and the successful techniques tend to survive and spread throughout the IT community, while the less successful techniques are slowly discarded. Over time, the population distribution of software techniques changes. As with the evolution of living things on Earth, the evolution of software has been greatly affected by the physical environment, or hardware, upon which it ran. Just as the Earth has not always been as it is today, the same goes for computing hardware. The evolution of software has been primarily affected by two things - CPU speed and memory size. As I mentioned in So You Want To Be A Computer Scientist?, the speed and memory size of computers have both increased by about a factor of a billion since Konrad Zuse built the Z3 in the spring of 1941, and the rapid advances in both and the dramatic drop in their costs have shaped the evolutionary history of software greatly.

Figure 18 - The Geological Time Scale of the Phanerozoic Eon is divided into the Paleozoic, Mesozoic and Cenozoic Eras by two great mass extinctions - click to enlarge.

Figure 19 - Life in the Paleozoic, before the Permian-Triassic mass extinction, was far different than life in the Mesozoic.

Figure 20 - In the Mesozoic the dinosaurs ruled after the Permian-Triassic mass extinction, but small mammals were also present.

Figure 21 - Life in the Cenozoic, following the Cretaceous-Tertiary mass extinction, has so far been dominated by the mammals. This will likely soon change as software becomes the dominant form of self-replicating information on the planet, ushering in a new geological Era that has yet to be named.

Currently, it is thought that these mass extinctions arise from two different sources. One type of mass extinction is caused by the impact of a large comet or asteroid and has become familiar to the general public as the Cretaceous-Tertiary (K-T) mass extinction that wiped out the dinosaurs at the Mesozoic-Cenozoic boundary 65 million years ago. An impacting mass extinction is characterized by a rapid extinction of species followed by a corresponding rapid recovery in a matter of a few million years. An impacting mass extinction is like turning off a light switch. Up until the day the impactor hits the Earth, everything is fine and the Earth has a rich biosphere. After the impactor hits the Earth, the light switch turns off and there is a dramatic loss of species diversity. However, the effects of the incoming comet or asteroid are geologically brief and the Earth’s environment returns to normal in a few decades or less, so within a few million years or so, new species rapidly evolve to replace those that were lost.

The other kind of mass extinction is thought to arise from an overabundance of greenhouse gases and a dramatic drop in oxygen levels and is typified by the Permian-Triassic (P-T) mass extinction at the Paleozoic-Mesozoic boundary 250 million years ago. Greenhouse extinctions are thought to be caused by periodic flood basalts, like the Siberian Traps flood basalt of the late Permian. A flood basalt begins as a huge plume of magma several hundred miles below the surface of the Earth. The plume slowly rises and eventually breaks the surface of the Earth, forming a huge flood basalt that spills basaltic lava over an area of millions of square miles to a depth of several thousand feet. Huge quantities of carbon dioxide bubble out of the magma over a period of several hundreds of thousands of years and greatly increase the ability of the Earth’s atmosphere to trap heat from the Sun. For example, during the Permian-Triassic mass extinction, carbon dioxide levels may have reached a level as high as 3,000 ppm, much higher than the current 420 ppm. Most of the Earth warms to tropical levels with little temperature difference between the equator and the poles. This shuts down the thermohaline conveyor that drives the ocean currents.

The Evolution of Software Over the Past 2.6 Billion Seconds Has Also Been Heavily Influenced by Mass Extinctions

Similarly, IT experienced a similar devastating mass extinction during the early 1990s when we experienced an environmental change that took us from the Age of the Mainframes to the Distributed Computing Platform. Suddenly mainframe Cobol/CICS and Cobol/DB2 programmers were no longer in demand. Instead, everybody wanted C and C++ programmers who worked on cheap Unix servers. This was a very traumatic time for IT professionals. Of course, the mainframe programmers never went entirely extinct, but their numbers were greatly reduced. The number of IT workers in mainframe Operations also dramatically decreased, while at the same time the demand for Operations people familiar with the Unix-based software of the new Distributed Computing Platform skyrocketed. This was around 1992, and at the time I was a mainframe programmer used to working with IBM's MVS and VM/CMS operating systems, writing Cobol, PL-1 and REXX code using DB2 databases. So I had to teach myself Unix and C and C++ to survive. In order to do that, I bought my very first PC, an 80-386 machine running Windows 3.0 with 5 MB of memory and a 100 MB hard disk for $1500. I also bought the Microsoft C7 C/C++ compiler for something like $300. And that was in 1992 dollars! One reason for the added expense was that there were no Internet downloads in those days because there were no high-speed ISPs. PCs did not have CD/DVD drives either, so the software came on 33 diskettes, each with a 1.44 MB capacity, that had to be loaded one diskette at a time in sequence. The software also came with about a foot of manuals describing the C++ class library on very thin paper. Indeed, suddenly finding yourself to be obsolete is not a pleasant thing and calls for drastic action.

Figure 22 – An IBM OS/360 mainframe from 1964. The IBM OS/360 mainframe caused commercial software to explode within corporations and gave IT professionals the hardware platform that they were waiting for.

Figure 23 – The Distributed Computing Platform replaced a great deal of mainframe computing with a large number of cheap self-contained servers running software that tied the servers together.