In the early 1980s, I strongly advocated taking a biological approach to the development and maintenance of software while in the IT department of Amoco. At the time, I was actively working on a simulated science that I called softwarephysics for my own software development purposes while I was holding down a development job in the IT department of Amoco, and looking back, I must now thank the long-gone IT Management of Amoco for patiently tolerating my efforts at the time. For more on that please see Some Thoughts on the Origin of Softwarephysics and Its Application Beyond IT and Agile vs. Waterfall Programming and the Value of Having a Theoretical Framework. The relatively new field of computational biology, on the other hand, advocates just the reverse. Computational biology uses techniques from computer science to help unravel the mysteries of biology. I just watched a very interesting TED presentation on the computational biology efforts at Microsoft Research by Dr. Sara-Jane Dunn:

The next software revolution: programming biological cells

https://www.ted.com/talks/sara_jane_dunn_the_next_software_revolution_programming_biological_cells?utm_source=newsletter_weekly_2019-11-01&utm_campaign=newsletter_weekly&utm_medium=email&utm_content=top_left_image#t-1

in which she explains how the Biological Computation research group at Microsoft Research is applying standard IT development and maintenance techniques to discover the underlying biological programming code that runs the very complex biological processes of cells and organisms. In her TED talk, Dr. Sara-Jane Dunn maintains that the last half of the 20th century was dominated by the rise of software on electronic hardware, but that the early 21st century will be dominated by being able to first understand, and then later manipulate, the software running on biological hardware through the new science of biological computation. Dr. Sara-Jane Dunn is a member of the Microsoft Research Biological Computation group. The homepage for the Microsoft Biological Computation group is at:

Microsoft Biological Computation

https://www.microsoft.com/en-us/research/group/biological-computation/

The mission statement for the Microsoft Research Biological Computation group is:

Our group is developing theory, methods and software for understanding and programming information processing in biological systems. Our research currently focuses on three main areas: Molecular Programming, Synthetic Biology and Stem Cell Biology. Current projects include designing molecular circuits made of DNA, and programming synthetic biological devices to perform complex functions over time and space. We also aim to understand the computation performed by cells during development, and how the adaptive immune system detects viruses and cancers. We are tackling these questions through the development of computational models and domain-specific computational tools, in close collaboration with leading scientific research groups. The tools we develop are being integrated into a common software environment, which supports simulation and analysis across multiple scales and domains. This environment will serve as the foundation for a biological computation platform.

In her TED Talk, Dr. Sara-Jane Dunn explains that in many ways biologists are like new members of an Applications Development group supporting the biological software that runs on living cells and organisms. Yes, biologists certainly do know a lot about cells and living organisms and also many details about cellular structure. They even have access to the DNA source code of many cells at hand. The problem is that like all new members of an Applications Development group, they just do not know how the DNA source code works. Dr. Sara-Jane Dunn then goes on to explain that by using standard IT systems analysis techniques, it should be possible to slowly piece together the underlying biological software that runs the cells that living things are all made of. Once that underlying software has been pieced together, it should then be possible to perform maintenance on the underlying biological code to fix disease-causing bugs in biological cells and organisms, and also to add functional enhancements to them. For readers not familiar with doing maintenance on large-scale commercial software, let's spend some time describing the daily mayhem of life in IT.

The Joys of Software Maintenance

As I explained in A Lesson for IT Professionals - Documenting the Flowcharts of Carbon-Based Life in the KEGG Online Database many times IT professionals in Applications Development will experience the anxiety of starting out on a new IT job or moving to a new area within their current employer's IT Department. Normally, the first thing your new Applications Development group will do is to show you a chart similar to Figure 1. Figure 1 shows a simplified flowchart for the NAS system. Of course, being new to the group, you may have at least heard about the NAS system, but in truth, you barely even know what the NAS system does! Nonetheless, the members of your new Applications Development group will assume that you are completely familiar with all of the details of the NAS system. Even though you have barely even heard of the NAS system, they will naturally act as if you are a NAS system expert like themselves. For example, they will expect that you will easily be able to estimate the work needed to perform large-scale maintenance efforts to the NAS system, create detailed project plans for large-scale maintenance efforts to the NAS system, perform the necessary code changes needed for large-scale maintenance efforts to the NAS system and easily put large-scale maintenance efforts for the NAS system into production. In truth, during that very first terrifying meeting with your new Applications Development group, the only thing that you will honestly be thankful for is that your new Applications Development group started you out by showing you a simplified flowchart of the NAS system instead of a detailed flowchart of the NAS system! Worse yet, when later you are tasked with heading up a large-scale maintenance effort for the NAS system, you will, unfortunately, discover that a detailed flowchart of the NAS system does not even exist! Instead, you will sadly discover that the excruciatingly complex details of the NAS system can only be found within the minds of the current developers in the Applications Development group supporting the NAS system as a largely unwritten oral history. You will sadly learn that this secret, and largely unwritten, oral history of the NAS system can only be slowly learned by patiently attending many meetings with members of your new Applications Development group and by slowly learning parts of the code for the NAS system by working on portions of the NAS system on a trial and error basis.

Normally, as a very frightened and very timid new member of the Applications Development group supporting the NAS system, you will soon discover that most of the knowledge about the NAS system that you quickly gain will result from goofing around with the code for the NAS system in an IDE (Integrated Development Environment), like Eclipse or Microsoft's Visual Studio in the development computing environment that your IT Department has set up for coding and testing software. In such a development environment, you can run many experimental runs on portions of the NAS system and watch the code execute line-by-line.

Figure 1 – Above is a simplified flowchart for the NAS system.

The Maintenance Plight of Biologists

Now over the past 100 years, biologists have also been trying to figure out the processing flowcharts of carbon-based life by induction, deduction and by performing experiments. This flowcharting activity has been greatly enhanced by the rise of bioinformatics. A fine example of this is the KEGG (Kyoto Encyclopedia of Genes and Genomes). The KEGG is an online collection of databases detailing genomes, biochemical pathways, diseases, drugs, and biochemical molecules. KEGG is available at:

https://www.genome.jp/kegg/kegg2.html

A good description of the KEGG is available in the Wikipedia at:

https://en.wikipedia.org/wiki/KEGG

Figure 2 – Above is a simplified flowchart of the metabolic pathways used by carbon-based life.

Figure 3 – Above is a high-level flowchart of the metabolic pathways used by carbon-based life as presented in the KEGG online database. You can use the KEGG online database to drill down into the above chart and to dig down into the flowcharts for many other biological processes.

I encourage all IT professionals to try out the KEGG online database to drill down into the documented flowcharts of carbon-based life to appreciate the complexities of carbon-based life and to see an excellent example of the documentation of complex information flows. Softwarephysics has long suggested that a biological approach to software was necessitated to progress to such levels of complexity. For more on that see Agile vs. Waterfall Programming and the Value of Having a Theoretical Framework. The challenge for biologists is to piece together the underlying software that runs these complicated flowcharts.

Computer Science Comes to the Rescue in the Form of Computational Biology

IT professionals in Applications Development face a similar challenge when undertaking a major refactoring effort of a large heavy-duty commercial software system. In a refactoring effort, ancient software that was written in the deep past using older software technologies and programming techniques is totally rewritten using modern software technologies and programming techniques. In order to do that, the first step is to create flowcharts and Input-Output Diagrams to describe the logical flow of the software to be rewritten. In a sense, the Microsoft Research Biological Computation group is trying to do the very same thing by refactoring the underlying biological software that runs carbon-based life.

In Agile vs. Waterfall Programming and the Value of Having a Theoretical Framework, I described the Waterfall project management development model that was so popular during the 1970s, 1980s and 1990s. In the classic Waterfall project management development model, detailed user requirements and code specification documents were formulated before any coding began. These user requirements and coding specification documents formed the blueprints for the software to be developed. One of the techniques frequently used in preparing user requirements and coding specification documents was to begin by creating a number of high-level flowcharts of the processing flows and also a series of Input-Output Diagrams that described in more detail the high-level flowcharts of the software to be developed.

Figure 4 – Above is the general form of an Input-Output Diagram. Certain Data is Input to a software Process. The software Process then processes the Input Data and may read or write data to storage. When the software Process completes, it passes Output Information to the next software Process as Input.

Figure 5 – Above is a more realistic Input-Output Diagram. In general, a hierarchy of Input-Output Diagrams was generated to break down the proposed software into a number of modules that could be separately coded and unit tested. For example, the above Input-Output Diagram is for Sub-module 2.1 of the proposed software. For coding specifications, the Input-Output Diagram for Sub-module 2.1 would be broken down into more detailed Input-Output Diagrams like for Sub-module 2.1.1, Sub-module 2.1.2, Sub-module 2.1.3 ...

When undertaking a major software refactoring project, frequently high-level flowcharts and Input-Output Diagrams are produced to capture the functions of the current software to be refactored. When trying to uncover the underlying biological software that runs on carbon-based life, computational biologists need to do the very same thing. The Microsoft Research Biological Computation group is working on a number of large projects in this effort and I would like to highlight two of them.

(RE:IN) - the Reasoning Engine for Interaction Networks

In order to perform a similar software analysis for the functions of living cells and organisms, the Microsoft Research Biological Computation group developed some software called the (RE:IN) - Reasoning Engine for Interaction Networks. The purpose of the (RE:IN) software is to produce a number of possible models that describe the underlying biological software that runs certain biological processes. The (RE:IN) software essentially outputs possible models consisting of flowcharts and Input-Output Diagrams that explain the input data provided to the (RE:IN) software. To use the (RE:IN) software you first enter a number of components. A component can be a gene, a protein or any interacting molecule. The user then enters a number of known interactions between the components. Finally, the user enters a number of experimental results that have been performed on the entire network of components and interactions. The (RE:IN) software then calculates a number of logical models that explain the results of the experimental observations in terms of the components and the interactions amongst the components. I imagine the (RE:IN) software could also be used to analyze commercial software in terms of data components and processing interactions to produce logical models of software behavior for major software refactoring efforts too. The homepage for the (RE:IN) software is at:

https://www.microsoft.com/en-us/research/project/reasoning-engine-for-interaction-networks-rein/

You can download the documentation for the (RE:IN) at:

https://www.microsoft.com/en-us/research/uploads/prod/2016/06/reintutorial.docx

From the (RE:IN) homepage you can run a link that will start up the (RE:IN) software in your browser, or you can use this link:

https://rein.cloudapp.net/

The (RE:IN) homepage also has a couple of examples that have already been filled in with components, interactions and experimental observations such as this one:

The network governing naive pluripotency in mouse embryonic stem cells:

https://rein.cloudapp.net/?pluripotency

It takes a bit of time to run a Solution to the model, so click on the Options Tab and set the Solutions Limit to "1" instead of "10". Then click on the "Run Analysis" button and wait while the processing wheel turns. Eventually, the processing will complete and display a message box telling you that the process has completed.

Station B - Microsoft's Integrated Development Environment for Programming Biology

Station B is Microsoft's project for creating an IDE for programming biology that is similar to Microsoft's Visual Studio IDE for computer software. The homepage for Microsoft's Station B is at:

https://www.microsoft.com/en-us/research/project/stationb/

The general idea behind Station B is to build an IDE for biological programming like we have with Microsoft's Visual Studio IDE for computer software development and maintenance. Here is the Overview from the Station B homepage:

Building a platform for programming biology

The ability to program biology could enable fundamental breakthroughs across a broad range of industries, including medicine, agriculture, food, construction, textiles, materials and chemicals. It could also help lay the foundation for a future bioeconomy based on sustainable technology. Despite this tremendous potential, programming biology today is still done largely by trial-and-error. To tackle this challenge, the field of synthetic biology has been working collectively for almost two decades to develop new methods and technology for programming biology. Station B is part of this broader effort, with a focus on developing an integrated platform that enables selected partners to improve productivity within their own organisations, in line with Microsoft’s core mission. The Station B project builds on over a decade of research at Microsoft on understanding and programming information processing in biological systems, in collaboration with several leading universities. The name Station B is directly inspired by Station Q, which launched Microsoft’s efforts in quantum computing, but focuses instead on biological computing.

The Station B platform is being developed at Microsoft Research in Cambridge, UK, which houses Microsoft’s first molecular biology laboratory. The platform aims to improve all phases of the Design-Build-Test-Learn workflow typically used for programming biological systems:

• The Design phase will incorporate biological programming languages that operate at the molecular, genetic and network levels. These languages can in turn be compiled to a hierarchy of biological abstractions, each with their associated analysis methods, where different abstractions can be selected depending on the biological question being addressed. For example, a Continuous Time Markov Chain can be used to determine how random noise affects system function, using stochastic simulation or probabilistic model-checking methods.

• The Build phase will incorporate compilers that translate high-level programs to DNA code, together with a digital encoding of the biological experiments to be performed.

• The Test phase will execute biological experiments using lab robots in collaboration with technology partner Synthace, by using their award-winning Antha software, a powerful software platform built on Azure Internet of Things that gives biologists sophisticated control over lab hardware.

• The Learn phase will incorporate a range of methods for extracting biological knowledge from experimental data, including Bayesian inference, symbolic reasoning and deep learning methods, running at scale on Azure.

These phases will be integrated with a biological knowledge base that stores computational models representing the current understanding of the biological systems under consideration. As new experiments are performed, the knowledge base will be updated via automated learning.

For those of you not familiar with Microsoft's Visual Studio IDE, you can read about it and download a free community version of Visual Studio for your own personal use at:

https://visualstudio.microsoft.com/vs/

When you crank up Microsoft's Visual Studio, you will find all of the software tools that you need to develop new software or maintain old software. The Visual Studio IDE allows software developers to perform development and maintenance chores that took days or weeks back in the 1960s, 1970s and 1980s in a matter of minutes.

Figure 6 – Above is a screenshot of Microsoft's Visual Studio IDE. It assists developers with writing new software or maintaining old software by automating many of the labor-intensive and tedious chores of working on software. The intent of Project B is to do the same for biologists.

Before you download a free community version of Visual Studio be sure to watch some of the Visual Studio 2019 Launch videos at the bottom of the Visual Studio 2019 download webpage to get an appreciation for what a modern IDE can do.

Some of My Adventures with Programming Software in the 20th Century

But to really understand the significance of an IDE for biologists like Station B, we need to look back a bit to the history of writing software in the 20th century. Like the biological labwork of today, writing and maintaining software in the 20th century was very inefficient, time-consuming and tedious. For example, when I first learned to write Fortran code at the University of Illinois at Urbana in 1972, we were punching out programs on an IBM 029 keypunch machine, and I discovered that writing code on an IBM 029 keypunch machine was even worse than writing term papers on a manual typewriter. At least when you submitted a term paper with a few typos, your professor was usually kind enough not to abend your term paper right on the spot and give you a grade of zero. Sadly, I learned that such was not the case with Fortran compilers! The first thing you did was to write out your code on a piece of paper as best you could back at the dorm. The back of a large stack of fanfold printer paper output was ideal for such purposes. In fact, as a physics major, I first got hooked by software while digging through the wastebaskets of DCL, the Digital Computing Lab, at the University of Illinois looking for fanfold listings of computer dumps that were about a foot thick. I had found that the backs of thick computer dumps were ideal for working on lengthy problems in my quantum mechanics classes.

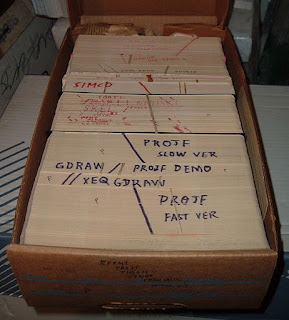

It paid to do a lot of desk-checking of your code back at the dorm before heading out to the DCL. Once you got to the DCL, you had to wait your turn for the next available IBM 029 keypunch machine. This was very much like waiting for the next available washing machine on a crowded Saturday morning at a laundromat. When you finally got to your IBM 029 keypunch machine, you would load it up with a deck of blank punch cards and then start punching out your program. You would first press the feed button to have the machine pull your first card from the deck of blank cards and register the card in the machine. Fortran compilers required code to begin in column 7 of the punch card so the first thing you did was to press the spacebar 6 times to get to column 7 of the card. Then you would try to punch in the first line of your code. If you goofed and hit the wrong key by accident while punching the card, you had to eject the bad card and start all over again with a new card. Structured programming had not been invented yet, so nobody indented code at the time. Besides, trying to remember how many times to press the spacebar for each new card in a block of indented code was just not practical. Pressing the spacebar 6 times for each new card was hard enough! Also, most times we proofread our card decks by flipping through them before we submitted the card deck. Trying to proofread indented code in a card deck would have been rather disorienting, so nobody even thought of indenting code. Punching up lots of comment cards was also a pain, so most people got by with a minimum of comment cards in their program deck.

After you punched up your program on a card deck, you would then punch up your data cards. Disk drives and tape drives did exist in those days, but disk drive storage was incredibly expensive and tapes were only used for huge amounts of data. If you had a huge amount of data, it made sense to put it on a tape because if you had several feet of data on cards, there was a good chance that the operator might drop your data card deck while feeding it into the card reader. But usually, you ended up with a card deck that held the source code for your program and cards for the data to be processed too. You also punched up the JCL (Job Control Language) cards for the IBM mainframe that instructed the IBM mainframe to compile, link and then run your program all in one run. You then dropped your finalized card deck into the input bin so that the mainframe operator could load your card deck into the card reader for the IBM mainframe. After a few hours, you would then return to the output room of the DCL and go to the alphabetically sorted output bins that held all the jobs that had recently run. If you were lucky, in your output bin you found your card deck and the fanfolded computer printout of your last run. Unfortunately, normally you found that something probably went wrong with your job. Most likely you had a typo in your code that had to be fixed. If it was nighttime and the mistake in your code was an obvious typo, you probably still had time for another run, so you would get back in line for an IBM 029 keypunch machine and start all over again. You could then hang around the DCL working on the latest round of problems in your quantum mechanics course. However, machine time was incredibly expensive in those days and you had a very limited budget for machine charges. So if there was some kind of logical error in your code, many times you had to head back to the dorm for some more desk checking of your code before giving it another shot the next day.

Figure 7 - An IBM 029 keypunch machine like the one I first learned to program on at the University of Illinois in 1972.

Figure 8 - Each card could hold a maximum of 80 bytes. Normally, one line of code was punched onto each card.

Figure 9 - The cards for a program were held together into a deck with a rubber band, or for very large programs, the deck was held in a special cardboard box that originally housed blank cards. Many times the data cards for a run followed the cards containing the source code for a program. The program was compiled and linked in two steps of the run and then the generated executable file processed the data cards that followed in the deck.

Figure 10 - To run a job, the cards in a deck were fed into a card reader, as shown on the left above, to be compiled, linked, and executed by a million-dollar mainframe computer with a clock speed of about 750 KHz and about 1 MB of memory.

Figure 11 - The output of a run was printed on fanfolded paper and placed into an output bin along with your input card deck.

I finished up my B.S. in Physics at the University of Illinois at Urbana in 1973 and headed up north to complete an M.S. in Geophysics at the University of Wisconsin at Madison. Then from 1975 – 1979, I was an exploration geophysicist exploring for oil, first with Shell, and then with Amoco. I kept coding Fortran the whole time. In 1979, I made a career change into IT and spent about 20 years in development. For the last 17 years of my career, I was in IT operations, supporting middleware on WebSphere, JBoss, Tomcat, and ColdFusion. In 1979, when I became an IT professional in Amoco's IT department, I noticed that not much had changed with the way software was developed and maintained. Structured programming had arrived, so we were now indenting code and adding comment statements to the code, but I was still programming on cards. We were now using IBM 129 keypunch machines that were a little bit more sophisticated than the old IBM 029 keypunch machines. However, the coding process was still very much the same. I worked on code at my desk and still spent a lot of time desk checking the code. When I was ready for my next run, I would get into an elevator and travel down to the basement of the Amoco Building where the IBM mainframes were located. Then I would punch my cards on one of the many IBM 129 keypunch machines but this time with no waiting for a machine. After I submitted my deck, I would travel up 30 floors to my cubicle to work on something else. After a couple of hours, I would head down to the basement again to collect my job. On a good day, I could manage to get 4 runs in. But machine time was still incredibly expensive. If I had a $100,000 project, $25,000 went for programming time, $25,000 went to IT overhead like management and data management services costs, and a full $50,000 went to machine costs for compiles and test runs!

This may all sound very inefficient and tedious today, but it can be even worse. When I first changed careers to become an IT professional in 1979, I used to talk to the old-timers about the good old days of IT. They told me that when the operators began their shift on an old-time 1950s vacuum tube computer, the first thing they did was to crank up the voltage on the vacuum tubes to burn out the tubes that were on their last legs. Then they would replace the burned-out tubes to start the day with a fresh machine. They also explained that the machines were so slow that they spent all day processing production jobs. Emergency maintenance work to fix production bugs was allowed at night, but new development was limited to one compile and test run per week! They also told me about programming the plugboards of electromechanical Unit Record Processing machines back in the 1950s by physically rewiring the plugboards. The Unit Record Processing machines would then process hundreds of punch cards per minute by routing the punch cards from machine to machine in processing streams.

Figure 12 – In the 1950s Unit Record Processing machines like this card sorter were programmed by physically rewiring a plugboard.

Figure 13 – The plugboard for a Unit Record Processing machine.

Using Software to Write Software

But all of this was soon to change. In the early 1980s, the IT department of Amoco switched to using TSO running on dumb IBM 3278 terminals to access IBM mainframes. We now used a full-screen editor called ISPF running under TSO on the IBM 3278 terminals to write code and submit jobs, and our development jobs usually ran in less than an hour. The source code for our software files was now on disk in partitioned datasets for easy access and updating. The data had moved to tapes and it was the physical process of mounting and unmounting tapes that now slowed down testing. For more on tape processing see: An IT Perspective on the Origin of Chromatin, Chromosomes and Cancer. Now I could run maybe 10 jobs in one day to test my code! However, machine costs were still incredibly high and still accounted for about 50% of project costs, so we still had to do a lot of desk checking to save on machine costs. At first, the IBM 3278 terminals appeared on the IT floor in "tube rows" like the IBM 029 keypunch machines of yore. But after a few years, each IT professional was given their own IBM 3278 terminal on their own desk. Finally, there was no more waiting in line for an input device!

Figure 14 - The IBM ISPF full-screen editor ran on IBM 3278 terminals connected to IBM mainframes in the late 1970s. ISPF was also a screen-based interface to TSO (Time Sharing Option) that allowed programmers to do things like copy files and submit batch jobs. ISPF and TSO running on IBM mainframes allowed programmers to easily reuse source code by doing copy/paste operations with the screen editor from one source code file to another. By the way, ISPF and TSO are still used today on IBM mainframe computers to support writing and maintaining software.

I found that the use of software to write and maintain software through the use of ISPF dramatically improved software development and maintenance productivity. It was like moving from typing term papers on manual typewriters to writing term papers on word processors. It probably improved productivity by a factor of at least 10 or more. In the early 1980s, I was very impressed by this dramatic increase in productivity that was brought on by using software to write and maintain software. I was working on softwarephysics at the time, and the findings of softwarephysics led me to believe that what programmers really needed was an integrated software tool that would help to automate all of the tedious and repetitious activities of writing and maintaining software. This would allow programmers to overcome the effects of the second law of thermodynamics in a highly nonlinear Universe - for more on that see The Fundamental Problem of Software. But how?

BSDE - An Early Software IDE Founded On Biological Principles

I first began by slowly automating some of my mundane programming activities with ISPF edit macros written in REXX. In SoftwarePhysics I described how this automation activity slowly grew into the development of BSDE - the Bionic Systems Development Environment back in 1985 while in the IT department of Amoco. I am going to spend a bit of time on BSDE because I think it highlights the similarities between writing and maintaining computer software and biological software and the value of having an IDE for each.

BSDE slowly evolved into a full-fledged mainframe-based IDE, like Microsoft's modern Visual Studio, over a number of years at a time when software IDEs did not exist. During the 1980s, BSDE was used to grow several million lines of production code for Amoco by growing applications from embryos. For an introduction to embryology see Software Embryogenesis. The DDL statements used to create the DB2 tables and indexes for an application were stored in a sequential file called the Control File and performed the functions of genes strung out along a chromosome. Applications were grown within BSDE by turning their genes on and off to generate code. BSDE was first used to generate a Control File for an application by allowing the programmer to create an Entity-Relationship diagram using line printer graphics on an old IBM 3278 terminal.

Figure 15 - BSDE was run on IBM 3278 terminals, using line printer graphics, and in a split-screen mode. The embryo under development grew within BSDE on the top half of the screen, while the code generating functions of BSDE were used on the lower half of the screen to insert code into the embryo and to do compiles on the fly while the embryo ran on the upper half of the screen. Programmers could easily flip from one session to the other by pressing a PF key.

After the Entity-Relationship diagram was created, the programmer used a BSDE option to create a skeleton Control File with DDL statements for each table on the Entity-Relationship diagram and each skeleton table had several sample columns with the syntax for various DB2 datatypes. The programmer then filled in the details for each DB2 table. When the first rendition of the Control File was completed, another BSDE option was used to create the DB2 database for the tables and indexes on the Control File. Another BSDE option was used to load up the DB2 tables with test data from sequential files. Each DB2 table on the Control File was considered to be a gene. Next, a BSDE option was run to generate an embryo application. The embryo was a 10,000 line of code PL/1, Cobol or REXX application that performed all of the primitive functions of the new application. The programmer then began to grow the embryo inside of BSDE in a split-screen mode. The embryo ran on the upper half of an IBM 3278 terminal and could be viewed in real-time, while the code generating options of BSDE ran on the lower half of the IBM 3278 terminal. BSDE was then used to inject new code into the embryo's programs by reading the genes in the Control File for the embryo in a real-time manner while the embryo was running in the top half of the IBM 3278 screen. BSDE had options to compile and link modified code on the fly while the embryo was still executing. This allowed for a tight feedback loop between the programmer and the application under development. In fact many times BSDE programmers sat with end-users and co-developed software together on the fly in a very Agile manner. When the embryo had grown to full maturity, BSDE was then used to create online documentation for the new application and was also used to automate the install of the new application into production. Once in production, BSDE generated applications were maintained by adding additional functions to their embryos.

Since BSDE was written using the same kinds of software that it generated, I was able to use BSDE to generate code for itself. The next generation of BSDE was grown inside of its maternal release. Over a period of seven years, from 1985 – 1992, more than 1,000 generations of BSDE were generated, and BSDE slowly evolved in an Agile manner into a very sophisticated tool through small incremental changes. BSDE dramatically improved programmer efficiency by greatly reducing the number of buttons programmers had to push in order to generate software that worked.

Figure 15 - Embryos were grown within BSDE in a split-screen mode by transcribing and translating the information stored in the genes in the Control File for the embryo. Each embryo started out very much the same but then differentiated into a unique application based upon its unique set of genes.

Figure 16 – BSDE appeared as the cover story of the October 1991 issue of the Enterprise Systems Journal

BSDE had its own online documentation that was generated by BSDE. Amoco's IT department also had a class to teach programmers how to get started with BSDE. As part of the curriculum Amoco had me prepare a little cookbook on how to build an application using BSDE:

BSDE – A 1989 document describing how to use BSDE - the Bionic Systems Development Environment - to grow applications from genes and embryos within the maternal BSDE software.

I wish that I could claim that I was smart enough to have sat down and thought up all of this stuff from first principles, but that is not what happened. It all just happened through small incremental changes in a very Agile manner over a very long period of time and most of the design work was done subconsciously, if at all. Even the initial BSDE ISPF edit macros happened through serendipity. When I first started programming DB2 applications, I found myself copying in the DDL CREATE TABLE statements from the file I used to create the DB2 database into the program that I was working on. This file, with the CREATE TABLE statements, later became the Control File used by BSDE to store the genes for an application. I would then go through a series of editing steps on the copied in data to transform it from a CREATE TABLE statement into a DB2 SELECT, INSERT, UPDATE, or DELETE statement. I would do the same thing all over again to declare the host variables for the program. Being a lazy programmer, I realized that there was really no thinking involved in these editing steps and that an ISPF edit macro could do the job equally as well, only very quickly and without error, so I went ahead and wrote a couple of ISPF edit macros to automate the process. I still remember the moment when it first hit me. For me, it was very much like the scene in 2001 - A Space Odyssey when the man-ape picks up a wildebeest thighbone and starts to pound the ground with it. My ISPF edit macros were doing the same thing that happens when the information in a DNA gene is transcribed into a protein! A flood of biological ideas poured into my head over the next few days, because at last, I had a solution for my pent-up ideas about nonlinear systems and the second law of thermodynamics that were making my life so difficult as a commercial software developer. We needed to "grow" code – not write code!

BSDE began as a few simple ISPF edit macros running under ISPF edit. ISPF is the software tool that mainframe programmers still use today to interface to the IBM MVS and VM/CMS mainframe operating systems and contains an editor that can be greatly enhanced through the creation of edit macros written in REXX. I began BSDE by writing a handful of ISPF edit macros that could automate some of the editing tasks that a programmer needed to do when working on a program that used a DB2 database. These edit macros would read a Control File, which contained the DDL statements to create the DB2 tables and indexes. The CREATE TABLE statements in the Control File were the equivalent of genes, and the Control File itself performed the functions of a chromosome. For example, a programmer would retrieve a skeleton COBOL program, with the bare essentials for a COBOL/DB2 program, from a stock of reusable BSDE programs. The programmer would then position their cursor in the code to generate a DB2 SELECT statement and hit a PFKEY. The REXX edit macro would read the genes in the Control File and would display a screen listing all of the DB2 tables for the application. The programmer would then select the desired tables from the screen, and the REXX edit macro would then copy the selected genes to an array (mRNA). The mRNA array was then sent to a subroutine that inserted lines of code (tRNA) into the COBOL program. The REXX edit macro would also declare all of the SQL host variables in the DATA DIVISION of the COBOL program and would generate code to check the SQLCODE returned from DB2 for errors and take appropriate actions. A similar REXX ISPF edit macro was used to generate screens. These edit macros were also able to handle PL/1 and REXX/SQL programs. They could have been altered to generate the syntax for any programming language such as C, C++, or Java. As time progressed, BSDE took on more and more functionality via ISPF edit macros. Finally, there came a point where BSDE took over and ISPF began to run under BSDE. This event was very similar to the emergence of the eukaryotic architecture for cellular organisms. BSDE consumed ISPF like the first eukaryotic cells that consumed prokaryotic bacteria and used them as mitochondria and chloroplasts. With continued small incremental changes, BSDE continued to evolve.

I noticed that I kept writing the same kinds of DB2 applications, with the same basic body plan, over and over. At the time I did not know it, but these were primarily applications using the Model-View-Controller (MVC) design pattern. The idea of using design patterns had not yet been invented in computer science and IT, so my failing to take note of this is understandable. For more on the MVC design pattern please see Software Embryogenesis. From embryology, I got the idea of using BSDE to read the Control File for an application and to generate an "embryo" for the application based on its unique set of genes. The embryo would perform all of the things I routinely programmed over and over for a new application. Once the embryo was generated for a new application from its Control File, the programmer would then interactively "grow" code and screens for the application. With time, each embryo differentiated into a unique individual application in an Agile manner until the fully matured application was delivered into production by BSDE. At this point, I realized that I could use BSDE to generate code for itself, and that is when I started using BSDE to generate the next generation of BSDE. This technique really sped up the evolution of BSDE because I had a positive feedback loop going. The more powerful BSDE became, the faster I could add improvements to the next generation of BSDE through the accumulated functionality inherited from previous generations.

Embryos were grown within BSDE using an ISPF split-screen mode. The programmer would start up a BSDE session and run Option 4 – Interactive Systems Development from the BSDE Master Menu. This option would look for an embryo, and if it did not find one, it would offer to generate an embryo for the programmer. Once an embryo was implanted, the option would turn the embryo on and the embryo would run inside of the BSDE session with whatever functionality it currently had. The programmer would then split his screen with PF2 and another BSDE session would appear in the lower half of his terminal. The programmer could easily toggle control back and forth between the upper and lower sessions with PF9. The lower session of BSDE was used to generate code and screens for the embryo on the fly, while the embryo in the upper BSDE session was fully alive and functional. This was possible because BSDE generated applications that used ISPF Dialog Manager for screen navigation, which was an interpretive environment, so compiles were not required for screen changes. If your logic was coded in REXX, you did not have to do compiles for logic changes either, because REXX was an interpretive language. If PL/1 or COBOL were used for logic, BSDE had facilities to easily compile code for individual programs after a coding change, and ISPF Dialog Manager would simply load the new program executable when that part of the embryo was exercised. These techniques provided a tight feedback loop so that programmers and end-users could immediately see the effects of a change as the embryo grew and differentiated.

Unfortunately, the early 1990s saw the downfall of BSDE. The distributed computing model hit with full force, and instead of deploying applications on mainframe computers, we began to distribute applications across a network of servers and client PCs. Since BSDE generated applications for mainframe computers, it could not compete, and BSDE quickly went extinct in the minds of Amoco IT management. I was left with just a theory and no tangible product, and it became much harder to sell softwarephysics at that point. So after a decade of being considered a little "strange", I decided to give it a rest, and I began to teach myself how to write C and C++ software for Unix servers and PCs. I started out with the Microsoft C/C++ C7 compiler which was a DOS application for writing C/C++ DOS applications. But I converted to Microsoft Visual C/C++ when it first came out in 1993. Microsoft Visual C/C++ was Microsoft's first real IDE and the predecessor to Microsoft's modern Visual Studio IDE. Visual C/C++ was so powerful that I knew that a PC version of BSDE could never compete, so I abandoned all thoughts of producing a PC version of BSDE.

Conclusion

I think that the impact that software development IDEs had on the history of software development and maintenance in the 20th century strongly suggests that Microsoft's Project B to build an IDE for biological programming will surely provide a similar dramatic leap in biological programming productivity in the 21st century, and this will allow for the harnessing of the tremendous power that self-replicating biological systems can provide.

Comments are welcome at scj333@sbcglobal.net

To see all posts on softwarephysics in reverse order go to:

https://softwarephysics.blogspot.com/

Regards,

Steve Johnston