In 1979 I made a career change from being an exploration geophysicist exploring for oil with Amoco to become an IT professional in Amoco’s IT department. At the time, I figured if you could apply physics to geology, why not apply physics to software? That is when I first started working on softwarephysics to help me cope with the daily mayhem of life in IT. Since I had only taken one computer science class in college back in 1972, I was really starting from scratch in this new career, but since I had been continuously programming geophysical models for my thesis and for oil companies during that period, I did have about seven years of programming experience as a start. When I made this career change into IT, I quickly realized that all IT jobs essentially boiled down to simply pushing buttons. All you had to do was push the right buttons, in the right sequence, at the right time, and with nearly zero defects. How hard could that be? Well, as we all know that is indeed a very difficult thing to do.

When I first started programming in 1972, as a physics major at the University of Illinois in Urbana, I was pushing the buttons on an IBM 029 keypunch machine to feed cards into a million dollar mainframe computer with a single CPU running with a clock speed of about 750 KHz and about 1 MB of memory.

Figure 1 - An IBM 029 keypunch machine like the one I first learned to program on at the University of Illinois in 1972.

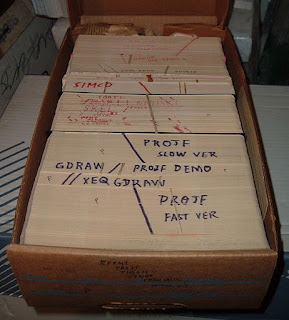

Figure 2 - Each card could hold a maximum of 80 bytes. Normally, one line of code or one 80 byte data record was punched onto each card.

Figure 3 - The cards for a program were held together into a deck with a rubber band, or for very large programs, the deck was held in a special cardboard box that originally housed blank cards. Many times the data cards for a run followed the cards containing the source code for a program. The program was compiled and linked in two steps of the run and then the generated executable file processed the data cards that followed in the deck.

Figure 4 - To run a job, the cards in a deck were fed into a card reader, as shown on the left above, to be compiled, linked, and executed by a million dollar mainframe computer with a clock speed of about 750 KHz and about 1 MB of memory.

Now I push these very same buttons for a living on a $500 laptop with 2 CPUs running with a clock speed of 2.5 GHz and 8 GB of memory. So hardware has improved by a factor of over 10 million since 1972.

Figure 5 - Now I push these very same buttons for a living that I pushed on IBM 029 keypunch machines, the only difference is that I now push them on a $500 machine with 2 CPUs running with a clock speed of 2.5 GHz and 8 GB of memory.

Now how much progress have we seen in our ability to develop, maintain and support software over this very same period of time? I would estimate that our ability to develop, maintain and support software has only increased by a factor of about 10 – 100 times since 1972, and I think that I am being very generous here. In truth, it is probably much closer to being a factor of 10, rather than to being a factor of 100. Here is a simple thought experiment. Imagine assigning two very good programmers the same task of developing some software to automate a very simple business function. Imagine that one programmer is a 1972 COBOL programmer using an IBM 029 keypunch machine, while the other programmer is a 2013 Java programmer using the latest Java IDE (Integrated Development Environment software) to write and debug a Java program. Now set them both to work. Hopefully, the Java programmer would win the race, but by how much? I think that the 2013 Java programmer, armed with all of the latest tools of IT, would be quite pleased if she could finish the project 10 times faster than the 1972 programmer cutting cards on an IBM 029 keypunch machine. This would indeed be a very revealing finding. Why has the advancement of hardware outpaced the advancement of software by nearly a factor of a million since 1972? How can we possibly account for this vast difference between the advancement of hardware and the advancement of software over a span of 40 years that is on the order of 6 orders of magnitude? Clearly, two very different types of processes must be at work to account for such a dramatic disparity.

My suggestion would be that hardware advanced so quickly because it was designed using science, while the advancement of software was left to evolve more or less on its own. You see, nobody really sat back and designed the very complex worldwide software architecture that we see today, it just sort of evolved on its own through small incremental changes brought on by many millions of independently acting programmers, through a process of trial and error. In this view, software itself can be thought of as a form of self-replicating information, trying to survive by replicating before it disappears into extinction, and evolving over time on its own. Software is certainly not alone in this regard. There currently are three forms of self-replicating information on the planet – the genes, memes, and software, with software rapidly becoming the dominant form of self-replicating information on the Earth. (see A Brief History of Self-Replicating Information for more details).

The evolution of all three forms of self-replicating information seems to be primarily driven by two factors - the second law of thermodynamics and nonlinearity. Before diving into the second law of thermodynamics again, let us first review the first law of thermodynamics. The first law of thermodynamics describes the conservation of energy. Energy cannot be created nor destroyed; it can only be transformed from one form of energy into another form of energy, and none is created or lost in the process. For example, when you drive to work, you convert the chemical energy in gasoline, which originally came from sunlight that was captured by single-celled life forms many millions of years ago. These organisms were later deposited in shallow-sea mud that subsequently turned into shale, as the carbon-rich mud was pushed down, compressed and heated by the accumulation of additional overlying sediments. The heat and pressure at depth cooked the shale enough to turn the single-celled organisms into oil, which then later migrated into overlying sandstone and limestone reservoir rock. When the gasoline is burned in your car engine, about 85% of the energy is immediately turned into waste heat energy, leaving about 15% left to be turned into the kinetic energy of your moving car. This remaining 15% is also eventually turned into waste heat energy too by the conclusion of your trip by wind resistance, rolling friction, and your brake linings. So when all is said and done, the solar energy that was released many millions of years ago by the Sun finally ends up as heat energy with 100% efficiency, none of the energy is lost during any of the steps of the process. It is just as if the million-year-old sunlight had just now fallen upon the asphalt parking lot of where you work and heated its surface. (see A Lesson From Steam Engines, Computer Science as a Technological Craft, and Entropy - the Bane of Programmers for more details)

There is a very similar effect in place for information. It turns out that information cannot be created nor destroyed either; it can only be converted from one form of information into another. It is now thought that information, like energy, is conserved because all of the current theories of physics are both deterministic and reversible. By deterministic, we mean that given the initial state of a system, a deterministic theory guarantees that one, and only one, possible outcome will result. Similarly, reversible theories describe interactions between objects in terms of reversible processes. A reversible process is a process that can be run backwards in time to return the Universe back to the initial state that it had before the process even began as if the process had never even happened in the first place. For example, the collision between two perfectly elastic balls at low energy is a reversible process that can be run backwards in time to return the Universe to its original state because Newton’s laws of motion are reversible. Knowing the position of each ball at any given time and also its momentum, a combination of its speed, direction, and mass, we can predict where each ball will go after a collision between the two, and also where each ball came from before the collision as well, using Newton’s laws of motion. For a deterministic reversible process such as this, the information required to return a system back to its initial state cannot be destroyed, no matter how many collisions might occur, in order to be classified as a reversible process that is operating under reversible physical laws.

Figure 6 - The collision between two perfectly elastic balls at low energy is a reversible process because Newton’s laws of motion are deterministic and reversible.

The fact that all of the current theories of physics, including quantum mechanics, are both deterministic and reversible, is a heavy blow to philosophy because it means that once a universe such as ours spuds off from the multiverse, all the drama is already over – all that will happen is already foreordained to happen, so there is no free will to worry about. Luckily, we do have the illusion that free will really exists because our Universe is largely composed of nonlinear systems and chaos theory has shown that even though nonlinear systems behave in a deterministic manner, they are not predictable because very small changes to initial conditions of a nonlinear system can produce huge changes to the final outcome of the system. This chaotic behavior makes the Universe appear to be random to us and gives us a false sense of security that all is not already foreordained (see Software Chaos for more on this). Also, remember that all of the current theories of physics are only effective theories. An effective theory is an approximation of reality that only holds true over a certain restricted range of conditions and only provides for a certain depth of understanding of the problem at hand. All effective theories are just approximations of reality and are not really the fundamental “laws” of the Universe, but these effective theories make exceedingly good predictions of the behavior of physical systems over the limited ranges in which they apply. We keep coming up with better ones all the time. For example, if the above collision between two perfectly elastic balls were conducted at a very high energy, meaning that the balls were traveling close to the speed of light, Newton’s laws of motion would no longer work, and we would need to use another effective theory called the special theory of relativity to perform the calculations. However, we know that the special theory of relativity is also just an approximation because it cannot explain the behavior of very small objects like the electrons in atoms, where we use another effective theory called quantum mechanics. So perhaps one day we will come up with a more complete effective theory of physics that is not deterministic and reversible. It’s just that all of the ones we have come up with so far always are deterministic and reversible.

In Entropy - the Bane of Programmers

we went on to describe the second law of thermodynamics as the propensity of isolated macroscopic systems to run down or depreciate with time, as first proposed by Rudolph Clausius in 1850. Clausius observed that the Universe is constantly smoothing out differences. For example, his second law of thermodynamics proposed that spontaneous changes tend to smooth out differences in temperature, pressure, and density. Hot objects tend to cool off, tires under pressure leak air, and the cream in your coffee will stir itself if you are patient enough. Clausius defined the term entropy to measure this amount of smoothing-out or depreciation of a macroscopic system, and with the second law of thermodynamics, proposed that entropy always increased whenever a change was made. In The Demon of Software, we drilled down deeper still and explored Ludwig Boltzmann’s statistical mechanics, developed in 1872, in which he viewed entropy from the perspective of the microstates that a large number of molecules could exist in. For any given macrostate of a gas in a cylinder, Boltzmann defined the entropy of the system in terms of the number N of microstates that could produce the observed macrostate as:

S = k ln(N)

For example, air is about 78% nitrogen, 21% oxygen and 1% other gasses. The macrostate of finding all the oxygen molecules on one side of a container and all of the nitrogen molecules on the other side has a much lower number of microstates N than the macrostate of finding the nitrogen and oxygen thoroughly mixed together, so the entropy of a uniform mixture is much greater than the entropy of finding the oxygen and nitrogen separated. We used poker to clarify these concepts with the hope that you would come to the conclusion that the macrostate of going broke in Las Vegas had many more microstates than the macrostate of breaking the bank at one of the casinos.

We also discussed the apparent paradox of Maxwell’s Demon and how Leon Brillouin solved the mystery with his formulation of information as the difference between the initial and final entropies of a system after a determination of the state of the system had been made.

∆I = Si - Sf

Si = initial entropy

Sf = final entropy

Since the second law of thermodynamics demands that the entropy of the Universe must constantly increase, it also implies that the total amount of useful information in the Universe must constantly decrease. The number of low-entropy macrostates of a system, with very few contributing microstates, will always be much smaller than the number of high-entropy macrostates of a system with a large number of microstates. That is why a full house in poker or a bug-free program are rare, while a single pair or a slightly buggy program are much more common. So low-entropy forms of energy and information will always be much rarer in the Universe than high-entropy forms of energy and information. What this means is that the second law of thermodynamics demands that whenever we do something, like push a button, the total amount of useful energy and useful information in the Universe must decrease! Now, of course, the local amount of useful energy and information of a system can always be increased with a little work. For example, you can charge up your cell phone and increase the amount of useful energy in it, but in doing so, you will also create a great deal of waste heat when the coal that generated the electricity was burned because not all of the energy in the coal can be converted to electricity. Similarly, if you rifle through a deck of cards, you can always manage to deal yourself a full house, but in doing so, you will still decrease the total amount of useful information in the Universe. If you later shuffle your full house back into the deck of cards, your full house still exists in a disordered shuffled state with increased entropy, but the information necessary to reverse the shuffling process cannot be destroyed, so your full house could always be reclaimed by exactly reversing the shuffling process. It is just not a very practical thing to do, and that is why it appears that the full house has been destroyed by the shuffle.

The actions of the second law naturally lead to both the mutation of self-replicating information and to the natural selection of self-replicating information as well. This is because the second law guarantees that errors, or mutations, will always occur in all copying processes, and also limits the existence of the low-entropy resources, like a useful source of energy, that are required by all forms of self-replicating information to replicate. The existence of a limited resource base naturally leads to the selection pressures of natural selection because there simply are not enough resources to go around for all of the consumers of the resource base. Any form of self-replicating information that is better adapted to its environment will have a better chance at obtaining the resources it needs to replicate, and will, therefore, have a greater chance of passing that trait on to its offspring. Since all forms of self-replicating information are just one generation away from extinction, natural selection plays a very significant role in the evolution of all forms of self-replicating information.

The fact that the Universe is largely nonlinear in nature, meaning that very small changes to the initial state of a system can cause very large changes to the final state of a system as it moves through time, also means that small copying errors usually lead to disastrous, and many times lethal, consequences for all forms of self-replicating information (see Software Chaos for more details). The idea that the second law of thermodynamics, coupled with nonlinearity, are the fundamental problems facing all forms of self-replicating information, and therefore, are the driving forces behind evolution, are covered in greater detail in The Fundamental Problem of Software.

In addition to the second law of thermodynamics and nonlinearity, the evolution of software over the past 70 years has also shown that there have been several additional driving forces operating one level higher than the fundamental driving forces of the second law of thermodynamics and nonlinearity. Since the only form of self-replicating information that we have a good history of is software, I think the evolutionary history of software provides a wonderful model to study for those researchers looking into the origin and evolution of early life on Earth and also for those involved in the search for life elsewhere in the field of astrobiology. So let us outline those forces below for their benefit.

The Additional Driving Forces of Evolution

1. Darwin’s concept of evolution by means of inheritance and innovation honed by natural selection has certainly played the greatest role in the evolution of software over the past 70 years (2.2 billion seconds). All software evolves by means of these processes. In softwarephysics, we extend the concept of natural selection to include all selection processes that are not supernatural in nature. So a programmer making a selection decision after testing his latest iteration of code is considered to be a part of nature, and is therefore, a form of natural selection. Actually, the selection process for code is really performed by a number of memes residing within the mind of a programmer. Software is currently the most recent form of self-replicating information on the planet and it is currently exploiting the memes of various meme-complexes on the planet to survive. Like all of its predecessors, software first emerged as a pure parasite in May of 1941 on Konrad Zuse’s Z3 computer. Initially, software could not transmit memes, it could only perform calculations, like a very fast adding machine, so it was a pure parasite. But then the business and military meme-complexes discovered that software could be used to store and transmit memes, and software then quickly entered into a parasitic/symbiotic relationship with the memes. Today, software has formed strong parasitic/symbiotic relationships with just about every meme-complex on the planet. In the modern day, the only way memes can now spread from mind to mind without the aid of software is when you directly speak to another person next to you. Even if you attempt to write a letter by hand, the moment you drop it into a mailbox, it will immediately fall under the control of software. The poor memes in our heads have become Facebook and Twitter addicts (see How Software Evolves for more details).

2. Stuart Kauffman’s concept of "order for free", like the emergent order found within a phospholipid bilayer that is simply seeking to minimize its free energy, and which forms the foundation upon which all biological membranes are built, or the formation of a crystalline lattice out of a melt, leading to Alexander Graham Cairns-Smith’s theory, first proposed in 1966, that there was a clay microcrystal precursor to RNA (see The Origin of Software the Origin of Life and Programming Clay for more details).

3. Lynn Margulis’s discovery that the formation of parasitic/symbiotic relationships between organisms is a very important driving force of evolution (see Software Symbiogenesis for more details).

4. Stephen Jay Gould’s concept of exaptation – the reuse of code originally meant for one purpose, but later put to use for another. We do that all the time with the reuse of computer code (see When Toasters Fly for more details).

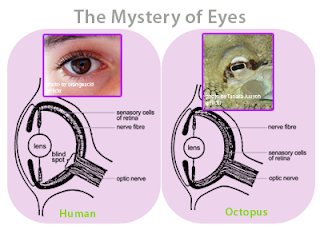

5. Simon Conway Morris’s contention that convergence is a major driving force in evolution, where organisms in different evolutionary lines of descent evolve similar solutions to solve the same problems, as outlined in his book Life’s Solution (2003). We have seen this throughout the evolutionary history of software architecture, as software has repeatedly recapitulated the architectural design history of living things on Earth. The very lengthy period of unstructured code (1941 – 1972) was similar to the very lengthy dominance of the prokaryotic architecture of early life on Earth. This was followed by the dominance of structured programming (1972 – 1992), which was very similar to the rise of eukaryotic single-celled life. Object-oriented programming took off next, primarily with the arrival of Java in 1995. Object-oriented programming is the implementation of multicellular organization in software. Finally, we are currently going through a Cambrian explosion in IT with the SOA (Service Oriented Architecture) revolution, where consumer objects (somatic cells) make HTTP SOAP calls on service objects (organ cells) residing within organ service JVMs to provide webservices (see the SoftwarePaleontology section of SoftwareBiology for more details).

Figure 7 - The eye of a human and the eye of an octopus are nearly identical in structure, but evolved totally independently of each other. As Daniel Dennett pointed out, there are only a certain number of Good Tricks in Design Space and natural selection will drive different lines of descent towards them.

Figure 8 – Computer simulations reveal how a camera-like eye can easily evolve from a simple light-sensitive spot on the skin.

Figure 9 – We can actually see this evolutionary history unfold in the evolution of the camera-like eye by examining modern-day mollusks such as the octopus.

6. Peter Ward’s observation that mass extinctions are key to clearing out ecological niches through dramatic environmental changes which additionally open other niches for exploitation. We have seen this throughout the evolutionary history of software as well. The distributed computing revolution of the early 1990s was a good example when people started hooking up cheap PCs into LANs and WANs and moved to a client/server architecture that threatened the existence of the old mainframe software. The arrival of the Internet explosion in 1995 opened a whole new environmental niche too for web-based software, and today we are going through a wireless-mobile computing revolution which is also opening entirely new environmental niches for software that might threaten the old stationary PC software we use today (see How to Use Your IT Skills to Save the World and Is Self-Replicating Information Inherently Self-Destructive? for more details).

7. The dynamite effect, where a new software architectural element spontaneously arises out of nothing, but its significance is not recognized at the time, and then it just languishes for many hundreds of millions of seconds, hiding in the daily background noise of IT. And then just as suddenly, after perhaps 400 – 900 million seconds, the idea finally catches fire and springs into life and becomes ubiquitous. What seems to happen with most new technologies, like eyeballs or new forms of software architecture, is that the very early precursors do not provide that much bang for the buck so they are like a lonely stick of dynamite with an ungrounded blasting cap stuck into it, waiting for a stray voltage to finally come along and set it off (see An IT Perspective of the Cambrian Explosion for more details).

Currently, researchers working on the origin of life and astrobiology are trying to produce computer simulations to help investigate how life could originate and evolve at its earliest stages. As you can see, trying to incorporate all of the above elements into a computer simulation would be a very daunting task indeed. The good news is that over the past 70 years the IT community has spent over $10 trillion building this computer simulation for them, and has already run it for over 2.2 billion seconds. It has been hiding there in plain sight the whole time for anybody with a little bit of daring and flair to explore.

Comments are welcome at scj333@sbcglobal.net

To see all posts on softwarephysics in reverse order go to:

https://softwarephysics.blogspot.com/

Regards,

Steve Johnston

No comments:

Post a Comment