Earlier this year the Middleware Operations group of my current employer was reorganized, and all of the onshore members, including myself, were transferred to another related middleware group. This other middleware group puts together the designs for middleware components that Middleware Operations then installs. So I had a lot to learn from the old-timers in this new middleware group. Now the way we do IT training these days is for the trainer to share their laptop desktop with the trainee, using some instant messaging software. That way the trainee can see what the trainer is doing, while the trainer explains what he is doing over some voice over IP telephony software. This was a very enlightening experience for me, because not only was it an opportunity to learn some new IT skills, but it also provided me with some first-hand observations of how other IT professionals did their day-to-day work for the first time in many years, and it also brought back some long-forgotten memories as well. The one thing that really stuck out for me was that the IT professionals in my new middleware group were pushing way too many buttons to get their work done, and I knew that was a very bad thing. Now personally, I consider the most significant finding of softwarephysics to be an understanding of the impact that self-replicating information has had upon the surface of the Earth over the past 4.0 billion years and also of the possibilities of its ongoing impact for the entire Universe - see the Introduction to Softwarephysics for more on that. But from a purely practical IT perspective, the most important finding of softwarephysics is certainly that pushing lots of buttons to get IT work done is a very dangerous thing. Now, why is pushing buttons so bad? To understand why we need to go back a few years.

In 1979 I made a career change from being an exploration geophysicist, exploring for oil with Amoco, to become an IT professional in Amoco's IT department. As I explained in the Introduction to Softwarephysics, this was quite a shock. The first thing I noticed was that, unlike other scientific and engineering professionals, IT professionals seemed to be always working in a frantic controlled state of panic, reacting to very unrealistic expectations from an IT Management structure that seemed to constantly change direction from one week to the next. And everything was always a "Top Priority" so that all work done by IT professionals seemed to consist of juggling a large number of equally important "Top Priority" activities all at the same time. My hope through the ensuing years was always that IT would one day mature with time, but that never seemed to have happened. In fact, I think things have actually gotten worse over the past 36 years, and some of these bad habits have even seemed to have diffused outside of IT into the general public, as software began to take over the world. This observation is based upon working in a number of corporate IT departments throughout the years and under a large number of different CIOs. The second thing I noticed back in 1979 was that, unlike all of the other scientific and engineering disciplines, IT seemingly had no theoretical framework to work from. I think this second observation may help to explain the first observation.

So why is it important to have a theoretical framework? To understand the importance, let's compare the progress that the hardware guys made since I started programming back in 1972 with the progress that the software guys made during this very same period of time. When I first started programming back in 1972, I was working on a million-dollar mainframe computer with about 1 MB of memory and clock speed of 750 KHz. Last spring I bought a couple of low-end Toshiba laptops for my wife and myself, each with two Intel Celeron 2.16 GHz CPUs, 4 GB of memory, a 500 GB disk drive and a 15.6 inch HD LED screen for $224 each. I call these low-end laptops because they were the cheapest available online at Best Buy, yet they were both many thousands of times more powerful than the computers that were used to design the current U.S. nuclear arsenal. Now if you work out the numbers, you will find that the hardware has improved by at least a factor of 10 million since 1972. Now how much progress have we seen in our ability to develop, maintain and support software over this very same period of time? I would estimate that our ability to develop, maintain and support software has only increased by a factor of about 10 – 100 times since 1972, and I think that I am being very generous here. In truth, it is probably much closer to being a factor of 10, rather than to being a factor of 100. Here is a simple thought experiment. Imagine assigning two very good programmers the same task of developing some software to automate a very simple business function. Imagine that one programmer is a 1972 COBOL programmer using an IBM 029 keypunch machine, while the other programmer is a 2015 Java programmer using the latest Java IDE (Integrated Development Environment) like Eclipse to write and debug a Java program. Now set them both to work. Hopefully, the Java programmer would win the race, but by how much? I think that the 2015 Java programmer, armed with all of the latest tools of IT, would be quite pleased if she could finish the project 10 times faster than the 1972 programmer cutting cards on an IBM 029 keypunch machine. This would indeed be a very revealing finding. Why has the advancement of hardware outpaced the advancement of software by nearly a factor of a million since 1972? How can we possibly account for this vast difference between the advancement of hardware and the advancement of software over a span of 43 years that is on the order of 6 orders of magnitude? Clearly, two very different types of processes must be at work to account for such a dramatic disparity.

Figure 1 - An IBM 029 keypunch machine like the one I first learned to program on at the University of Illinois in 1972.

Figure 2 - Each card could hold a maximum of 80 bytes. Normally, one line of code was punched onto each card.

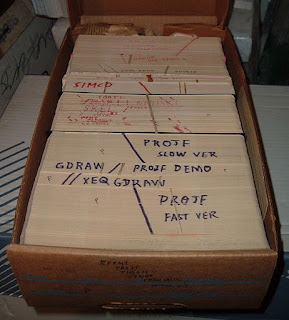

Figure 3 - The cards for a program were held together into a deck with a rubber band, or for very large programs, the deck was held in a special cardboard box that originally housed blank cards. Many times the data cards for a run followed the cards containing the source code for a program. The program was compiled and linked in two steps of the run and then the generated executable file processed the data cards that followed in the deck.

Figure 4 - To run a job, the cards in a deck were fed into a card reader, as shown on the left above, to be compiled, linked, and executed by a million-dollar mainframe computer with a clock speed of about 750 KHz and about 1 MB of memory.

Figure 5 - Now I push these very same buttons that I pushed on IBM 029 keypunch machines, the only difference is that I now push them on a $224 machine with 2 CPUs running with a clock speed of 2.16 GHz and 4 GB of memory.

My suggestion would be that the hardware advanced so quickly because it was designed using a theoretical framework based upon physics. Most of this advancement was based upon our understanding of the behavior of electrons. Recently, we have also started using photons in fiber optic devices too, but photons are created by manipulating electrons, so it all goes back to understanding how electrons behave. Now here is the strange thing. Even though we have improved hardware by a factor of 10 million since 1972 by tricking electrons into doing things, we really do not know what electrons are. Electrons are certainly not little round balls with a negative electrical charge. However, we do have a very good effective theory called QED (Quantum Electrodynamics) that explains all that we need to know to build very good hardware. QED (1948) is an effective theory formed by combining two other very good effective theories - quantum mechanics (1926) and the special theory of relativity (1905). For more about quantum field theories like QED see The Foundations of Quantum Computing. QED has predicted the gyromagnetic ratio of the electron, a measure of its intrinsic magnetic field, to 11 decimal places. This prediction has been validated by rigorous experimental data. As Richard Feynman has pointed out, this is like predicting the exact distance between New York and Los Angeles to within the width of a human hair! Recall that an effective theory is an approximation of reality that only holds true over a certain restricted range of conditions and only provides for a certain depth of understanding of the problem at hand. For example, QED cannot explain how electrons behave in very strong gravitational fields, like those close to the center of a black hole. For that we would need a quantum theory of gravity, and after nearly 90 years of work we still do not have one. So although QED is enormously successful and makes extraordinarily accurate predictions of the behavior of electrons and photons in normal gravitational fields, it is fundamentally flawed and therefore can only be an approximation of reality because it cannot deal with gravity. This is an incredibly important idea to keep in mind because it means that we are all indeed lost in space and time because all of the current theories of physics, such as Newtonian mechanics, Newtonian gravity, classical electrodynamics, thermodynamics, statistical mechanics, the special and general theories of relativity, quantum mechanics, and quantum field theories like QED are all just effective theories that are based upon models of reality, and all of these models are approximations - all of these models are fundamentally "wrong", but at the same time, these effective theories make exceedingly good predictions of the behavior of physical systems over the limited ranges in which they apply. Think of it like this. For most everyday things in life you can get by just fine by using a model of the Earth that says that the Earth is flat and at the center of the Universe. After all, this model worked just great for most of human history. For example, you certainly can build a house assuming that the Earth is flat. If you use a plumb-bob on the left and right sides of your home to ensure that you are laying bricks vertically, you will indeed find that to a very good approximation the left and right sides of your home will end up being parallel and not skewed, and that is similar to the current state of physics as we know it. It is all just a collection of very useful approximations of reality, and that is what softwarephysics is all about. Softwarephysics is just a useful collection of effective theories and models that try to explain how software seems to behave for the benefit of IT professionals.

Applying Physics to Software

In the The Fundamental Problem of Software I explained that the fundamental problem IT professionals faced when dealing with software was that the second law of thermodynamics caused small bugs in software when we pushed buttons while working on software and that because the Universe is largely nonlinear in nature, these small bugs oftentimes caused severe and sometimes lethal outcomes when the software was run. I also proposed that to overcome these effects IT should adopt a biological approach to software, using the techniques developed by the biosphere to overcome the adverse effects of the second law of thermodynamics in a nonlinear Universe. This all stems from the realization that all IT jobs essentially boil down to simply pushing buttons. All you have to do is push the right buttons, in the right sequence, at the right time, and with zero errors. How hard can that be? Well, the second law makes it very difficult to push the right buttons in the right sequence and at the right time because there are so many erroneous combinations of button pushes. Writing and maintaining software is like looking for a needle in a huge utility phase space. There are just too many ways of pushing the buttons “wrong”. For example, my laptop has 86 buttons on it that I can push in many different combinations. Now suppose I need the following 10-character line of code:

i = j*k-1;

I can push 10 buttons on my laptop to get that line of code. The problem is that I can also push 10 buttons on my 86-button keyboard 8610 = 2.214 x 1019 other ways and only one out of that huge number will do the job. Physicists use the concepts of information and entropy to describe this situation.

In The Demon of Software we saw that entropy is a measure of the amount of disorder, or unknown information, that a system contains. Systems with lots of entropy are disordered and also contain lots of useless unknown information, while systems with little entropy are orderly in nature and also contain large amounts of useful information. The laws of thermodynamics go on to explain that information is very much like energy. The first law of thermodynamics states that energy cannot be created nor destroyed. Energy can only be transformed from one form of energy into another form of energy. Similarly, information cannot be destroyed because all of the current effective theories of physics are both deterministic and reversible, meaning that they work just as well moving backwards in time as they do moving forwards in time, and if it were possible to destroy the information necessary to return a system to its initial state, then the Universe would not be reversible in nature as the current theories of physics predict, and the current theories of physics would then collapse. However, as IT professionals we all know that it is indeed very easy to destroy information, like destroying the code for a functional program by simply including a few typos, so what is going on? Well, the second law of thermodynamics states that although you cannot destroy energy, it is possible to transform useful energy, like the useful chemical energy in gasoline, into useless waste heat energy at room temperature that cannot be used to do useful work. The same thing goes for information. Even though you cannot destroy information, you can certainly turn useful information into useless information. In softwarephysics we exclusively use Leon Brillouin’s formulation for the concept of information as negative entropy or negentropy to explain why. In Brillouin’s formulation of information it is very easy to turn useful low-entropy information into useless high-entropy information by simply scrambling the useful information into useless information. For example, if you take the source code file for an apparently bug-free program and scramble it with some random additions and deletions, the odds are that you will most likely end up with a low-information high-entropy mess. That is because the odds of creating an even better version of the program by means of inserting random additions and deletions are quite low, while turning it into a mess are quite high. There are simply too many ways of messing up the program to win at that game. As we have seen this is simply the second law of thermodynamics in action. The second law of thermodynamics is constantly degrading low-entropy useful information into high-entropy useless information.

What this means is that the second law of thermodynamics demands that whenever we do something, like pushing a button, the total amount of useful energy and useful information in the Universe must decrease! Now, of course, the local amount of useful energy and information of a system can always be increased with a little work. For example, you can charge up your cell phone and increase the amount of useful energy in it, but in doing so, you will also create a great deal of waste heat when the coal that generated the electricity is burned back at the power plant because not all of the energy in the coal can be converted into electricity. Similarly, if you rifle through a deck of cards, you can always manage to deal yourself a full house, but in doing so, you will still decrease the total amount of useful information in the Universe. If you later shuffle your full house back into the deck of cards, your full house still exists in a disordered shuffled state with increased entropy, but the information necessary to reverse the shuffling process cannot be destroyed, so your full house could always be reclaimed by exactly reversing the shuffling process. That is just not a very practical thing to do, and that is why it appears that the full house has been destroyed by the shuffle.

Now since all of the current effective theories of physics are both deterministic and reversible in time, the second law of thermodynamics is the only effective theory in physics which differentiates between the past and the future. The future will always contain more entropy and less useful information than the past. Thus physicists claim that the second law of thermodynamics defines the arrow of time, and so the second law essentially defines the river of time that we experience in our day-to-day lives. Now whenever you start pushing lots of buttons to do your IT job, you are essentially trying to swim upstream against the river of time. You are trying to transform useless information into useful information, like pulling a full house out of a shuffled deck of cards, while the Universe is constantly trying to do the opposite by turning useful information into useless information. The other problem we have is that we are working in a very nonlinear utility phase space, meaning that pushing just one button incorrectly usually brings everything crashing down. For more about nonlinear systems see Software Chaos.

Living things have to deal with this very same set of problems. Living things have to arrange 92 naturally occurring atoms into complex molecules that perform the functions of life, and the slightest error can lead to dramatic and oftentimes lethal effects as well. So I always try to pretend that pushing the correct buttons in the correct sequence is like trying to string the correct atoms into the correct sequence to make a molecule in a biochemical reaction that can do things. Living things are experts at this, and apparently seem to overcome the second law of thermodynamics by dumping entropy into heat as they build low-entropy complex molecules from high-entropy simple molecules and atoms. I always try to do the same thing by having computers dump entropy into heat as they degrade low-entropy electrical energy into high-entropy heat energy. I do this by writing software that generates the software that I need to do my job as much as possible. That dramatically reduces the number of buttons I need to push to accomplish a task and reduces the chance of making an error too. I also think of each line of code that I write as a step in a biochemical pathway. The variables are like organic molecules composed of characters or “atoms” and the operators are like chemical reactions between the molecules in the line of code. The logic in several lines of code is the same thing as the logic found in several steps of a biochemical pathway, and a complete function is the equivalent of a full-fledged biochemical pathway in itself.

So how do you apply all this physics to software? In How to Think Like a Softwarephysicist I provided a general framework on how to do so. But I also have some very good case studies of how I have done so during my IT career. For example, in SoftwarePhysics I described how I started working on BSDE - the Bionic Systems Development Environment back in 1985 while in the IT department of Amoco. BSDE was an early mainframe-based IDE at a time when there were no IDEs. During the 1980s BSDE was used to grow several million lines of production code for Amoco by growing applications from embryos. For an introduction to embryology see Software Embryogenesis. The DDL statements used to create the DB2 tables and indexes for an application were stored in a sequential file called the Control File and performed the functions of genes strung out along a chromosome. Applications were grown within BSDE by turning their genes on and off to generate code. BSDE was first used to generate a Control File for an application by allowing the programmer to create an Entity-Relationship diagram using line printer graphics on an old IBM 3278 terminal.

Figure 6 - BSDE was run on IBM 3278 terminals, using line printer graphics, and in a split-screen mode. The embryo under development grew within BSDE on the top half of the screen, while the code generating functions of BSDE were used on the lower half of the screen to insert code into the embryo and to do compiles on the fly while the embryo ran on the upper half of the screen. Programmers could easily flip from one session to the other by pressing a PF key.

After the Entity-Relationship diagram was created, the programmer used a BSDE option to create a skeleton Control File with DDL statements for each table on the Entity-Relationship diagram and each skeleton table had several sample columns with the syntax for various DB2 datatypes. The programmer then filled in the details for each DB2 table. When the first rendition of the Control File was completed, another BSDE option was used to create the DB2 database for the tables and indexes on the Control File. Another BSDE option was used to load up the DB2 tables with test data from sequential files. Each DB2 table on the Control File was considered to be a gene. Next a BSDE option was run to generate an embryo for the application. The embryo was a 10,000 line of code PL/I, Cobol or REXX application that performed all of the primitive functions of the new application. The programmer then began to grow his embryo inside of BSDE in a split-screen mode. The embryo ran on the upper half of an IBM 3278 terminal and could be viewed in real-time, while the code generating options of BSDE ran on the lower half of the IBM 3278 terminal. BSDE was then used to inject new code into the embryo's programs by reading the genes in the Control File for the embryo in a real-time manner while the embryo was running in the top half of the IBM 3278 screen. BSDE had options to compile and link modified code on the fly while the embryo was still executing. This allowed for a tight feedback loop between the programmer and the application under development. In fact, BSDE programmers sometimes sat with end-users and co-developed software together on the fly. When the embryo had grown to full maturity, BSDE was used to create online documentation for the new application and was also used to automate the install of the new application into production. Once in production, BSDE generated applications were maintained by adding additional functions to their embryos. Since BSDE was written using the same kinds of software that it generated, I was able to use BSDE to generate code for itself. The next generation of BSDE was grown inside of its maternal release. Over a period of seven years, from 1985 – 1992, more than 1,000 generations of BSDE were generated, and BSDE slowly evolved into a very sophisticated tool through small incremental changes. BSDE dramatically improved programmer efficiency by greatly reducing the number of buttons programmers had to push in order to generate software that worked.

Figure 7 - Embryos were grown within BSDE in a split-screen mode by transcribing and translating the information stored in the genes in the Control File for the embryo. Each embryo started out very much the same but then differentiated into a unique application based upon its unique set of genes.

Figure 8 – BSDE appeared as the cover story of the October 1991 issue of the Enterprise Systems Journal

BSDE had its own online documentation that was generated by BSDE. Amoco's IT department also had a class to teach programmers how to get started with BSDE. As part of the curriculum Amoco had me prepare a little cookbook on how to build an application using BSDE:

BSDE – A 1989 document describing how to use BSDE - the Bionic Systems Development Environment - to grow applications from genes and embryos within the maternal BSDE software.

Similarly in MISE in the Attic I described how softwarephysics could also be used in an operations support setting. MISE (Middleware Integrated Support Environment) is a toolkit of 1688 commands that call Unix Korn shell scripts via Unix aliases, that I wrote to allow Middleware IT professionals to quickly do their jobs without pushing lots of buttons. Working in the high-pressure setting of Middleware Operations, it is also very important to be able to rapidly find information quickly in a matter of a few seconds or less, so MISE also has a couple of commands that let IT professionals quickly search for MISE commands using keyword searches. Under less stressful conditions, MISE was also designed to be an interactive toolkit of commands that assists in the work that Middleware IT professionals need to perform all day long. Whenever I find myself pushing lots of buttons to complete an IT task, I simply spend a few minutes to create a new MISE command that automates the button pushing process. Now that I have a rather large library of MISE commands, I can usually steal some old MISE code and exapt it into performing another function in about 30 minutes or so. Then I can use a few MISE commands to push out the new MISE command to the 550 servers I support. These MISE commands can push out the new code in about 5 minutes and can be run in parallel to push out several files at the same time. I use MISE all day long, and the MISE commands greatly reduce the number of buttons I have to push each day.

Another way that MISE eliminates button pushing is by relying heavily on copy/paste operations. Many of the MISE commands will print out valuable information, like all of the Websphere Appservers in each node of a Websphere Cell and all of the Applications installed in each Appserver. MISE users can then highlight the printed text with their mouse to get the printed strings into their Windows clipboard. The information can then be pasted into other MISE commands or into other software tools or documents that the MISE user is working on. This greatly reduces the number of buttons to be pushed to accomplish a task, which improves productivity but mainly reduces errors. Living things rely heavily on copy/paste operations too in order to reduce the impact of the second law of thermodynamics in a nonlinear Universe. For example, all of the operations involved with the transcription and the translation of the information in DNA into proteins is accomplished with a series of copy/paste operations. This begins with the transcription of the information in DNA to a strand of mRNA in a copy/paste operation performed by an enzyme called RNA polymerase. Living things use templates to copy/paste information. They do not push buttons.

Figure 8 - The information in DNA is transcribed to mRNA via a copy/paste operation that reduces errors.

But why create a toolkit? Well, living things really love took kits. For example, prokaryotic bacteria and archaea evolved huge toolkits of biochemical pathways during the first billion years of life on the Earth and these biochemical toolkits were later used by all of the higher forms of eukaryotic life. Similarly, in the 1980s biologists discovered that all forms of complex multicellular organisms used an identical set of Hox genes to control the head to tail development of complex multicellular organisms. These Hox genes must have appeared some time prior to the Cambrian explosion 541 million years ago to perform some other functions at the time. During the Cambrian explosion these Hox genes were then exapted into building complex multicellular organisms and have done so ever since. Similarly, toolkits are also very useful for IT professionals. For example, in my new middleware group I am still using MISE all day long to assist in my daily work to dramatically reduce the number of buttons I need to push each day. Since moving to this new middleware group I have also been adding new functions to MISE that help me in this new position, and I also have been trying to get the other members of my new middleware group to try using MISE. Getting IT professionals to think like a softwarephysicist is always difficult. What I did with BSDE and MISE was to simply use softwarephysics to create software tools for my own use. Then I made these software tools available to all the members of my IT group and anybody else who might be interested. It's like walking around the office in 1985 with a modern smartphone. People begin to take notice and soon want one of their own, even if they are not at first interested in how the smartphone works. With time people begin to notice that their smartphones do not work very well in basements or in forests far from cities, and they want to know why. So I then slowly begin to slip in a little softwarephysics to explain to them how smartphones work, and that knowing how they work makes it easier to use them.

How to Work with IT Management in a Positive Manner While Innovating

If any of you ever do become inspired to become a softwarephysicist, with the intent of spreading softwarephysics throughout your IT department, be sure to move forward cautiously. Don't expect IT Management to greet such efforts with open arms. Always remember that IT Management is charged with preserving the meme-complex that is your IT department and that meme-complexes are very wary of new memes contaminating the meme-complex. See How to Use Softwarephysics to Revive Memetics in Academia for more about memes. For the past 36 years my experience has been that IT Management always tries to suppress innovation. I don't fault IT Management for this because I think this is just the way all human hierarchies work, even scientific hierarchies like physics - see Lee Smolin's The Trouble with Physics (2006) for an interesting discussion of the problems he has encountered due to the overwhelming dominance of string theory in physics today. Hierarchies do not foster innovation, hierarchies always try to suppress innovation. That is why innovation usually comes from entrepreneurs and not from large hierarchical organizations. The way I get around this challenge is to first build some software that is based upon softwarephysics, but I don't tell people why the software works so well. Once people get hooked into using the software, I then slowly begin to explain the theory behind why the software makes their IT job so much easier. So I slip in the softwarephysics very slowly. I also follow a bacterial approach when writing the software tools that are based upon softwarephysics, meaning that I write the software tools using the most primitive of software components available because those are the software components that IT Management cannot easily take away. For example, MISE is written entirely using Unix Korn shell scripts. My current IT Management cannot easily shut down Unix Korn shell scripts because our whole IT infrastructure runs on them. Unix Korn shell scripts are essential to day-to-day operations, and like many bacteria, are simply too primitive to stamp out without killing the whole IT infrastructure. Now I could have written much of MISE using Jython wsadmin programs to talk to Websphere directly. But I knew that someday somebody would write a Jython wsadmin program that would take down a whole production Websphere Cell, and sure enough, that day came to pass. In response, our IT Management immediately placed a ban on running Jython wsadmin programs during the day, and we now can only run them at night using an approved change ticket. Getting an approved change ticket takes about a week. That would have killed MISE because MISE users would no longer be able to run MISE commands all day long to do their jobs. But because MISE just harmlessly reads deployed Websphere .xml files on servers, the ban had no effect on MISE. I also wrote MISE so that it primarily just reads things and provides valuable information. MISE will also help you do things like restart Websphere Appservers, but it does so in a very passive manner. MISE will make sure you are using the proper production ID for the restart, and will put you into the proper directory to do the restart, and will also print out the command to do the restart, but the MISE user must copy/paste the printed command into their Unix session and hit the Enter key to pull the trigger. So MISE is designed to never do anything that IT Management might consider as dangerous. On the contrary, MISE dramatically reduces the chance of an IT professional making a mistake that could cause a production outage.

Conclusion

So whenever you find yourself pushing lots of buttons to get an IT task accomplished, think back to what softwarephysics has to say on the subject. There must be an easier way to get the job done with fewer buttons. Try reading some biology books for tips.

Comments are welcome at scj333@sbcglobal.net

To see all posts on softwarephysics in reverse order go to:

https://softwarephysics.blogspot.com/

Regards,

Steve Johnston

No comments:

Post a Comment