I started this blog on softwarephysics about 10 years ago with the hopes of helping the IT community to better deal with the daily mayhem of life in IT, after my less than stunning success in doing so back in the 1980s when I first began developing softwarephysics for my own use. My original purpose for softwarephysics was to formulate a theoretical framework that was independent of both time and technology, and which therefore could be used by IT professionals to make decisions in both tranquil times and in times of turmoil. Having a theoretical framework in turbulent times is an especially good thing to have because it helps to make sense of things when dramatic changes are happening. Geologists have plate tectonics, biologists have Darwinian thought and chemists have the atomic theory to fall back on when the going gets tough, and a firm theoretical framework provides the necessary comfort and support to get through them. With the advent of Cloud Computing, we once again seem to be heading into turbulent times within the IT industry that will have lasting effects on the careers of many IT professionals, and that will be the subject of this posting.

One of the fundamental tenants of softwarephysics is that software and living things are both forms of self-replicating information and that both of them have converged upon very similar solutions to common data processing problems. This convergence means that they are also both subject to common experiences. Consequently, there is much that IT professionals can learn by studying living things, and also much that biologists can learn by studying the evolution of software over the past 75 years, or 2.4 billion seconds, ever since Konrad Zuse first cranked up his Z3 computer in May of 1941. For example, the success of both software and living things is very dependent upon environmental factors, and when the environment dramatically changes, it can lead to mass extinctions of both software and living things. In fact, the geological timescale during the Phanerozoic, when complex multicellular life first came to be, is divided by two great mass extinctions into three segments - the Paleozoic or "old life", the Mesozoic or "middle life", and the Cenozoic or "new life". The Paleozoic-Mesozoic boundary is defined by the Permian-Triassic greenhouse gas mass extinction 252 million years ago, while the Mesozoic-Cenozoic boundary is defined by the Cretaceous-Tertiary mass extinction caused by the impact of a small 6 mile in diameter asteroid with the Earth 65 million years ago. During both of these major mass extinctions, a large percentage of the extant species went extinct and the look and feel of the entire biosphere dramatically changed. By far,

the Permian-Triassic greenhouse gas mass extinction 252 million years ago was the worst. A massive flood basalt known as the Siberian Traps covered an area about the size of the continental United States with several thousand feet of basaltic lava, with eruptions that lasted for about one million years. Flood basalts, like the Siberian Traps, are thought to arise when large plumes of hotter than normal mantle material rise from near the mantle-core boundary of the Earth and break to the surface. This causes a huge number of fissures to open over a very large area that then begin to disgorge massive amounts of basaltic lava over a very large region. After the eruptions of basaltic lava began, it took about 100,000 years for the carbon dioxide that bubbled out of the basaltic lava to dramatically raise the level of carbon dioxide in the Earth's atmosphere and initiate the greenhouse gas mass extinction. This led to an Earth with a daily high of 140 oF and purple oceans choked with hydrogen-sulfide producing bacteria, producing a dingy green sky over an atmosphere tainted with toxic levels of hydrogen sulfide gas and an oxygen level of only about 12%. The Permian-Triassic greenhouse gas mass extinction killed off about 95% of marine species and 70% of land-based species, and dramatically reduced the diversity of the biosphere for about 10 million years. It took a full 100 million years to recover from it.

Figure 1 - The geological timescale of the Phanerozoic Eon is divided into the Paleozoic, Mesozoic and Cenozoic Eras by two great mass extinctions - click to enlarge.

Figure 2 - Life in the Paleozoic, before the Permian-Triassic mass extinction, was far different than life in the Mesozoic.

Figure 3 - In the Mesozoic the dinosaurs ruled after the Permian-Triassic mass extinction, but small mammals were also present.

Figure 4 - Life in the Cenozoic, following the Cretaceous-Tertiary mass extinction, has so far been dominated by the mammals. This will likely soon change as software becomes the dominant form of self-replicating information on the planet, ushering in a new geological Era that has yet to be named.

Similarly, IT experienced a similar devastating mass extinction about 25 years ago when we experienced an environmental change that took us from the Age of the Mainframes to the Distributed Computing Platform. Suddenly mainframe Cobol/CICS and Cobol/DB2 programmers were no longer in demand. Instead, everybody wanted C and C++ programmers who worked on cheap Unix servers. This was a very traumatic time for IT professionals. Of course, the mainframe programmers never went entirely extinct, but their numbers were greatly reduced. The number of IT workers in mainframe Operations also dramatically decreased, while at the same time the demand for Operations people familiar with the Unix-based software of the new Distributed Computing Platform skyrocketed. This was around 1992, and at the time I was a mainframe programmer used to working with IBM's MVS and VM/CMS operating systems, writing Cobol, PL-1 and REXX code using DB2 databases. So I had to teach myself Unix and C and C++ to survive. In order to do that, I bought my very first PC, an 80-386 machine running Windows 3.0 with 5 MB of memory and a 100 MB hard disk for $1500. I also bought the Microsoft C7 C/C++ compiler for something like $300. And that was in 1992 dollars! One reason for the added expense was that there were no Internet downloads in those days because there were no high-speed ISPs. PCs did not have CD/DVD drives either, so the software came on 33 diskettes, each with a 1.44 MB capacity, that had to be loaded one diskette at a time in sequence. The software also came with about a foot of manuals describing the C++ class library on very thin paper. Indeed, suddenly finding yourself to be obsolete is not a pleasant thing and calls for drastic action.

Figure 5 – An IBM OS/360 mainframe from 1964.

Figure 6 – The Distributed Computing Platform replaced a great deal of mainframe computing with a large number of cheap self-contained servers running software that tied the servers together.

The problem with the Distributed Computing Platform was that although the server hardware was cheaper than mainframe hardware, the granular nature of the Distributed Computing Platform meant that it created a very labor-intensive infrastructure that was difficult to operate and support, and as the level of Internet traffic dramatically expanded over the past 20 years, the Distributed Computing Platform became nearly impossible to support. For example, I have been in Middleware Operations with my current employer for the past 14 years, and during that time our Distributed Computing Platform infrastructure exploded by a factor of at least a hundred. It is now so complex and convoluted that we can barely keep it all running, and we really do not even have enough change window time to properly apply maintenance to it as I described in The Limitations of Darwinian Systems. Clearly, the Distributed Computing Platform was not sustainable, and an alternative was desperately needed. This is because the Distributed Computing Platform was IT's first shot at running software on a multicellular architecture, as I described in Software Embryogenesis. But the Distributed Computing Platform simply had too many moving parts, all working together independently on their own, to fully embrace the advantages of a multicellular organization. In many ways, the Distributed Computing Platform was much like the ancient stromatolites that tried to reap the advantages of a multicellular organism by simply tying together the diverse interests of multiple layers of prokaryotic cyanobacteria into a "multicellular organism" that seemingly benefited the interests of all.

Figure 7 – Stromatolites are still found today in Sharks Bay Australia. They consist of mounds of alternating layers of prokaryotic bacteria.

Figure 8 – The cross-section of an ancient stromatolite displays the multiple layers of prokaryotic cyanobacteria that came together for their own mutual self-survival to form a primitive "multicellular" organism that seemingly benefited the interests of all. The servers and software of the Distributed Computing Platform were very much like the primitive stromatolites.

The Rise of Cloud Computing

Now I must admit that I have no experience with Cloud Computing whatsoever because I am an old "legacy" mainframe programmer who has now become an old "legacy" Distributed Computing Platform person. But this is where softwarephysics comes in handy because it provides an unchanging theoretical framework. As I explained in The Fundamental Problem of Software, the second law of thermodynamics is not going away, and the fact that software must operate in a nonlinear Universe is not going away either, so although I may not be an expert in Cloud Computing, I do know enough softwarephysics to sense that a dramatic environmental change is taking place, and I do know from firsthand experience what comes next. So let me offer some advice to younger IT professionals on that basis.

The alternative to the Distributed Computing Platform is the Cloud Computing Platform, which is usually displayed as a series of services all stacked into levels. The highest level, SaaS (Software as a Service) runs the common third-party office software like Microsoft Office 365 and email. The second level, PaaS (Platform as a Service) is where the custom business software resides, and the lowest level, IaaS (Infrastructure as a Service) provides for an abstract tier of virtual servers and other resources that automatically scale with varying load levels. From an Applications Development standpoint, the PaaS layer is the most interesting because that is where they will be installing the custom application software used to run the business and also to run high-volume corporate websites that their customers use. Currently, that custom application software is installed into the middleware that is running on the Unix servers of the Distributed Computing Platform. The PaaS level will be replacing the middleware software, such as the Apache webservers and the J2EE Application servers, like WebSphere, Weblogic and JBoss that currently do that. For Operations, the IaaS level, and to a large extent, the PaaS level too are of most interest because those levels will be replacing the middleware and other support software running on hundreds or thousands of individual self-contained servers. The Cloud architecture can be run on a company's own hardware, or it can be run on a timesharing basis on the hardware at Amazon, Microsoft, IBM or other Cloud providers, using the Cloud software that the Cloud providers market.

Figure 9 – Cloud Computing returns us to the timesharing days of the 1960s and 1970s by viewing everything as a service.

Basically, the Cloud Computing Platform is based on two defining characteristics:

1. Returning to the timesharing days of the 1960s and 1970s when many organizations could not afford to support a mainframe infrastructure of their own.

2. Taking the multicellular architecture of the Distributed Computing Platform to the next level by using Cloud Platform software to produce a full-blown multicellular organism, and even higher, by introducing the self-organizing behaviors of the social insects like ants and bees.

Let's begin with the timesharing concept first because that should be the most familiar to IT professionals. This characteristic of Cloud Computing is actually not so new. For example, in 1968 my high school ran a line to the Illinois Institute of Technology to connect a local card reader and printer to the Institute's mainframe computer. We had our own keypunch machine to punch up the cards for Fortran programs that we could submit to the distant mainframe which then printed back the results of our program runs. The Illinois Institute of Technology also did the same for several other high schools in my area. This allowed high school students in the area to gain some experience with computers even though their high schools could clearly not afford to maintain a mainframe infrastructure.

Figure 10 - An IBM 029 keypunch machine like the one installed in my high school in 1968.

Figure 11 - Each card could hold a maximum of 80 bytes. Normally, one line of code was punched onto each card.

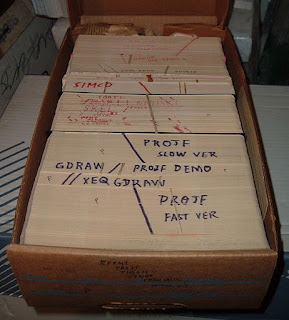

Figure 12 - The cards for a program were held together into a deck with a rubber band, or for very large programs, the deck was held in a special cardboard box that originally housed blank cards. Many times the data cards for a run followed the cards containing the source code for a program. The program was compiled and linked in two steps of the run and then the generated executable file processed the data cards that followed in the deck.

Figure 13 - To run a job, the cards in a deck were fed into a card reader, as shown on the left above, to be compiled, linked, and executed by a million-dollar mainframe computer back at the Illinois Institute of Technology. In the above figure, the mainframe is located directly behind the card reader.

Figure 14 - The output of Fortran programs run at the Illinois Institute of Technology was printed locally at my high school on a line printer.

Similarly, when I first joined the IT department of Amoco in 1979, we were using GE Timeshare for all of our interactive programming needs. GE Timeshare was running VM/CMS on mainframes, and we would connect to the mainframe using a 300 baud acoustic coupler with a standard telephone. You would first dial GE Timeshare with your phone and when you heard the strange "BOING - BOING - SHHHHH" sounds from the mainframe, you would jam the telephone receiver into the acoustic coupler so that you could login to the mainframe over a "glass teletype" dumb terminal.

Figure 15 – To timeshare, we connected to GE Timeshare over a 300 baud acoustic coupler modem.

Figure 16 – Then we did interactive computing on VM/CMS using a dumb terminal.

But timesharing largely went out of style in the 1970s when many organizations began to create their own datacenters and ran their own mainframes within them. They did so because with timesharing you paid the timesharing provider by the CPU-second and by the byte for disk space, and that became expensive as timesharing consumption rose. Timesharing also limited flexibility because businesses were limited by the constraints of their timesharing providers. For example, during the early 1980s, Amoco created 31 VM/CMS datacenters around the world and tied them all together with communications lines to support the interactive programming needs of the business. This network of 31 VM/CMS datacenters was called the Corporate Timeshare System and allowed end-users to communicate with each other around the world using an office system that Amoco developed in the late 1970s called PROFS (Professional Office System). PROFS had email, calendaring and document sharing software that later IBM sold as a product called OfficeVision. Essentially, this provided Amoco with a SaaS layer in the early 1980s.

So for IT professionals in Operations, the main question is will corporations initially move to a Cloud Computing Platform hosted by Cloud providers, but then become dissatisfied with the Cloud providers, like they did with the timesharing providers of the 1970s, and then create their own private corporate Cloud platforms? Or will corporations remain using the Cloud Computing Platform of Cloud providers on a timesharing basis? It could also go the other way too. Corporations might start up their own Cloud platforms within their existing datacenters, but then decide that it would be cheaper to move to the Cloud platform hosted by a Cloud provider. Right now, it is too early to know how Cloud Computing will unfold.

The second characteristic of Cloud Computing is more exciting. All of the major software players are currently investing heavily in the PaaS and IaaS levels of Cloud Computing, which promise to reduce the complexity and difficulty we had with running software that had a multicellular organization on the Distributed Computing Platform - see Software Embryogenesis for more on this. The PaaS software makes the underlying IaaS hardware look much like a huge integrated mainframe, and the IaaS level can spawn new virtual servers on the fly as the processing load increases, much like new worker ants can be spawned as needed within an ant colony. The PaaS level will still consist of billions of objects (cells) running in a multicellular manner, but the objects will be running in a much more orderly manner, using a PaaS architecture that is more like the architecture of a true multicellular organism, or more accurately, a colony of social multicellular organisms.

Figure 17 – The IaaS level of Cloud Computing Platform can spawn new virtual servers on the fly, much like new worker ants can be spawned as needed.

How to Survive a Software Mass Extinction

Softwarephysics suggests to always look to the biosphere for solutions to IT problems. To survive a mass extinction the best thing to do is:

1. Avoid those environments that experience the greatest change during a mass extinction event.

2. Be preadapted to the new environmental factors arising from the mass extinction.

For example, during the Permian-Triassic mass extinction, 95% of marine species went extinct, while only 70% of land-based species went extinct, so there was a distinct advantage in being a land-dweller. This was probably because the high levels of carbon dioxide in the Earth's atmosphere made the oceans more acidic than usual, and most marine life could simply not easily escape the adverse effects of the increased acidity. And if you were a land-dweller, being preadapted to the low level of oxygen in the Earth's atmosphere was necessary to be a survivor. For example, after the Permian-Triassic mass extinction, about 95% of land-based vertebrates were members of the Lystrosaurus species. Such dominance has never happened before or since. It is thought that Lystrosaurus was a barrel-chested creature, about the size of a pig, that lived in deep burrows underground. This allowed Lystrosaurus to escape the extreme heat of the day, and Lystrosaurus was also preadapted to live in the low oxygen levels that are found in deep burrows filled with stale air.

Figure 18 – After the Permian-Triassic mass extinction, Lystrosaurus accounted for 95% of all land-based vertebrates.

The above strategies worked well for me during the Mainframe → Distributed Computing Platform mass extinction 25 years ago. By exiting the mainframe environment as quickly as possible and preadapting myself to writing C and C++ code on Unix servers, I was able to survive the Mainframe → Distributed Computing Platform mass extinction. So what is the best strategy for surviving the Distributed Computing Platform → Cloud Computing Platform mass extinction? The first step is to determine which IT environments will be most affected. Obviously, IT professionals in Operations supporting the current Distributed Computing Platform will be impacted most severely. People in Applications Development will probably feel the least strain. In fact, the new Cloud Computing Platform will most likely just make their lives easier because most of the friction in moving code to Production will be greatly reduced when code is deployed to the PaaS level. Similarly, mainframe developers and Operations people should pull through okay because whatever caused them to survive the Mainframe → Distributed Computing Platform mass extinction 25 years ago should still be in play. So the real hit will be for "legacy" Operations people supporting the Distributed Computing Platform as it disappears. For those folks, the best thing would be to head back to Applications Development if possible. Otherwise, preadapt yourselves to the new Cloud Computing Platform as quickly as possible. The trouble is that there are many players producing software for the Cloud Computing Platform, so it is difficult to choose which product to pursue. If your company is building its own Cloud Computing Platform, try to be part of that effort. The odds are that your company will not forsake its own Cloud for an external Cloud provider. But don't forget that the Cloud Computing Platform will need far fewer Operations staff than the Distributed Computing Platform, even for a company running its own Cloud. Those Operations people who first adapt to the new Cloud Computing Platform will most likely be the Lystrosaurus of the new Cloud Computing Platform. So hang on tight for the next few years and good luck to all!

Comments are welcome at scj333@sbcglobal.net

To see all posts on softwarephysics in reverse order go to:

https://softwarephysics.blogspot.com/

Regards,

Steve Johnston

No comments:

Post a Comment