If you currently think of yourself simply as a seemingly very ordinary and mundane IT professional just struggling with the complexities of software on a daily basis, most likely you do not see yourself as a part of a grand mass movement of IT professionals saving the world from climate change.

Figure 1 - Above is a typical office full of clerks in the 1950s. Just imagine how much time and energy were required in a world without software to simply process all of the bank transactions, insurance premiums and claims, stock purchases and sales and all of the other business transactions in a single day.

But nearly all forms of software make things more efficient and that helps to curb climate change. Still, at times you might feel a bit disheartened with your IT career because it does not seem that it is making much of a difference at all. If so, as I pointed out in How To Cope With the Daily Mayhem of Life in IT, you really need to take some advice from Jayme Edwards.

Jayme Edwards' The Healthy Software Developer YouTube Home Page:

https://www.youtube.com/c/JaymeEdwardsMedia/featured

Jayme Edwards' The Healthy Software Developer YouTube videos:

https://www.youtube.com/c/JaymeEdwardsMedia/videos

The point is that being an underappreciated IT professional can sometimes become overwhelming. Nobody seems to understand just how difficult your job is and nobody seems to recognize the value to society that you deliver on a daily basis. I can fully appreciate such feelings because, if you have been following this blog on softwarephysics, you know that I am a 70-year-old former geophysicist and now a retired IT professional with more than 50 years of programming experience. At this point in my life, I also have many misgivings, but since I am nearing the end, I mainly just want to know if we are going to make it as the very first carbon-based form of Intelligence in this galaxy to finally bring forth a machine-based form of Intelligence to explore and make our Milky Way galaxy a safer place for Intelligences to thrive in for trillions of years into the future. For more on that see How Advanced AI Software Could Come to Dominate the Entire Galaxy Using Light-Powered Stellar Photon Sails and SETS - The Search For Extraterrestrial Software.

As you all know, I am obsessed with the fact that we see no signs of Intelligence in our Milky Way galaxy after more than 10 billion years of chemical evolution that should have brought forth a carbon-based or machine-based Intelligence to dominate the galaxy. All of the science that we now have in our possession would seem to indicate that we should see the effects of Intelligence in all directions but none are to be seen. Such thoughts naturally lead to Fermi's Paradox first proposed by Enrico Fermi over lunch one day in 1950:

Fermi’s Paradox - If the universe is just chock full of intelligent beings, why do we not see any evidence of their existence?

I have covered many explanations in other postings such as: The Deadly Dangerous Dance of Carbon-Based Intelligence, A Further Comment on Fermi's Paradox and the Galactic Scarcity of Software, Some Additional Thoughts on the Galactic Scarcity of Software, SETS - The Search For Extraterrestrial Software, The Sounds of Silence the Unsettling Mystery of the Great Cosmic Stillness, Last Call for Carbon-Based Intelligence on Planet Earth and Swarm Software and Killer Robots.

But in this posting, I would like to explain how the development and operation of sophisticated modeling software at startup First Light Fusion may be on the verge of saving our planet and ultimately making the possibility for machine-based Intelligence to finally leave this planet and to begin to navigate throughout our galaxy.

The Impact of Simulation Software on the Sciences, Engineering and Our Current Way of Life

In the modern world, it is difficult to fully appreciate the impact that simulation software has made on the way we now conduct scientific research and then apply the fruits of that research to engineer a new way of life. Simulation software is now used to let us explore things that we have not yet built and to even optimize them for performance and longevity before we have even begun to build them. In this posting, we will be seeing how simulation software at First Light Fusion seems to be rapidly making the possibility of building practical fusion reactors for the generation of energy in only about 10 years from now.

But such has not always been the case. Having been born in 1951, a few months after the United States government installed its very first commercial computer, a UNIVAC I, for the Census Bureau on June 14, 1951, I can still remember a time from my early childhood when their essentially was no software in the world at all. The UNIVAC I was 25 feet by 50 feet in size and contained 5,600 vacuum tubes, 18,000 crystal diodes and 300 relays with a total memory of 12 KB. From 1951 to 1958 a total of 46 UNIVAC I computers were built and installed.

Figure 2 – The UNIVAC I was very impressive on the outside.

Figure 3 – But the UNIVAC I was a little less impressive on the inside.

Back in the 1950s, scientists and engineers first began to use computers to analyze experimental data and perform calculations, essentially using computers as souped-up slide rules to do data reduction calculations.

Figure 4 – Back in 1973, I obtained a B.S. in Physics from the University of Illinois in Urbana solely with the aid of my trusty slide rule. I used to grease up my slide rule with vaseline just before physics exams to speed up my calculating abilities during the test.

But by the late 1960s, computers had advanced to the point where scientists and engineers were able to begin to use computers to perform simulated experiments to model things that previously had to be physically constructed in a lab. This dramatically helped to speed up research because it was found to be much easier to create a software simulation of a physical system, and perform simulated experiments on it, rather than to actually build the physical system itself in the lab. This revolution in the way science was done personally affected me. I finished up my B.S. in physics at the University of Illinois in Urbana Illinois in 1973 with the sole support of my trusty slide rule, but fortunately, I did take a class in FORTRAN programming my senior year. I then immediately began work on an M.S. degree in geophysics at the University of Wisconsin at Madison. For my thesis, I worked with a group of graduate students who were shooting electromagnetic waves into the ground to model the conductivity structure of the Earth’s upper crust. We were using the Wisconsin Test Facility (WTF) of Project Sanguine to send very low-frequency electromagnetic waves, with a bandwidth of about 1 – 100 Hz into the ground, and then we measured the reflected electromagnetic waves in cow pastures up to 60 miles away. All this information has been declassified and can be downloaded from the Internet at: http://www.fas.org/nuke/guide/usa/c3i/fs_clam_lake_elf2003.pdf. Project Sanguine built an ELF (Extremely Low-Frequency) transmitter in northern Wisconsin and another transmitter in northern Michigan in the 1970s and 1980s. The purpose of these ELF transmitters was to send messages to the U.S. nuclear submarine fleet at a frequency of 76 Hz. These very low-frequency electromagnetic waves can penetrate the highly conductive seawater of the oceans to a depth of several hundred feet, allowing the submarines to remain at depth, rather than coming close to the surface for radio communications. You see, normal radio waves in the Very Low-Frequency (VLF) band, at frequencies of about 20,000 Hz, only penetrate seawater to a depth of 10 – 20 feet. This ELF communications system became fully operational on October 1, 1989, when the two transmitter sites began synchronized transmissions of ELF broadcasts to the U.S. nuclear submarine fleet.

Anyway, back in the summers of 1973 and 1974, our team was collecting electromagnetic data from the WTF using a DEC PDP-8/e minicomputer. The machine cost $30,000 in 1973 (about $224,000 in 2022 dollars) and was about the size of a large side-by-side refrigerator, with 32 KB of magnetic core memory. We actually hauled this machine through the lumber trails of the Chequamegon National Forest in Wisconsin and powered it with an old diesel generator to digitally record the reflected electromagnetic data in the field. For my thesis, I then created models of the Earth’s upper conductivity structure down to a depth of about 20 km, using programs written in BASIC. The beautiful thing about the DEC PDP-8/e was that the computer time was free so I could play around with different models until I got a good fit for what we recorded in the field. This made me realize that one could truly use computers to do simulated experiments to uncover real knowledge by taking the fundamental laws of the Universe, really the handful of effective theories that we currently have, like Maxwell's equations, and by simulating those equations in computer code and letting them unfold in time, actually see the emerging behaviors of complex systems arise in a simulated Universe. All the sciences routinely now do this all the time, but back in 1974, it was quite a surprise for me.

Figure 5 – Some graduate students huddled around a DEC PDP-8/e minicomputer. Notice the teletype machines in the foreground on the left that were used to input code and data into the machine and to print out results as well.

Why Do We Need Fusion Energy If Wind and Solar Are Now So Cheap?

Nick Hawker explains that successfully fighting climate change is not a matter of finding a source of energy with the least cost. We already have done that with the advances that we have made with solar energy panels and with using wind turbines and chemical batteries to back up solar farms when the Sun is not shining. The problem is that we cannot make solar panels, wind turbines and batteries fast enough to keep up with the current rise of energy demand. Solar photons only have about 2 eV of energy and chemical batteries can only store about 2 eV of energy per atom so it would take a lot of solar panels, wind turbines and batteries to make the world carbon neutral today and we could not possibly keep up with the exponential demand for more energy. In order to do that, we need a concentrated form of energy, and that can only be done with nuclear energy. For example, when you fission a uranium-235 atom or a thorium atom that has been converted to uranium-233, you get 200 million eV of energy. Similarly, when you fuse a tritium atom of hydrogen with a deuterium atom of hydrogen, you get 8.8 million eV of energy per atom. Compare that to the 2 eV of energy per atom that you get from chemically burning an atom of coal or oil! Nuclear fuels have an energy density that is millions of times denser than chemical fuels and that is what we need to fix climate change on the Earth.

Now, as I pointed out in The Deadly Dangerous Dance of Carbon-Based Intelligence and Last Call for Carbon-Based Intelligence on Planet Earth we already have the technology to proceed with mass-producing molten salt nuclear reactors that can fission uranium, plutonium and thorium atoms to release 200 million eV of energy per atom, opposed to the 2 eV of energy per atom that we obtain from burning fossil fuels. That is a per atom advantage of 100 million! However, the downside of molten salt nuclear reactors is that they generate about two golf balls' worth of radioactive waste to power an American lifestyle for 100 years, and the generated nuclear waste from molten salt nuclear reactors needs to be safely stored for about 300 years to become as radioactive as coal ash. However, we already know how to safely store books, buildings and paintings for more than 300 years, so I really do not see that as much of a big deal, but many people do, and that seems to have dramatically hampered the development of nuclear energy. The world could have gone 100% nuclear with molten salt nuclear reactors in the 1980s and avoided the climate change disaster we are now facing and all of the wars that were fought over oil and natural gas for the past 40 years. The main reason we need fusion power is that we need a form of nuclear energy that does not produce nuclear waste and that is what fusion energy provides. We have also run out of time and cannot possibly fix climate change fast enough with 2 eV technologies because they simply require too much stuff to be quickly built. We are also going to need huge amounts of energy to geoengineer the Earth back to an atmosphere with 350 ppm of carbon dioxide and 2 eV technologies cannot do that. That is because the Arctic is already rapidly defrosting with our current atmosphere of 415 ppm of carbon dioxide.

The Danger of Defrosting the Arctic

We have already tripped several climate tipping points by generating an atmosphere with 415 ppm of carbon dioxide and we are still increasing that level by 2.3 ppm each year no matter what we pretend to do about climate change. For more about tripping nonlinear climate tipping points see Using Monitoring Data From Website Outages to Model Climate Change Tipping Point Cascades in the Earth's Climate.

Figure 6 - The permafrost of the Arctic is melting and releasing methane and carbon dioxide from massive amounts of ancient carbon that were deposited over the past 2.5 million years during the Ice Ages of the frigid Pleistocene.

But the worst problem, by far, with the Arctic defrosting, is methane gas. Methane gas is a powerful greenhouse gas. Eventually, methane degrades into carbon dioxide and water molecules, but over a 20-year period, methane traps 84 times as much heat in the atmosphere as carbon dioxide. About 25% of current global warming is due to methane gas. Natural gas is primarily methane gas with a little ethane mixed in, and it comes from decaying carbon-based lifeforms. Now here is the problem. For the past 2.5 million years, during the frigid Pleistocene, the Earth has been building up a gigantic methane bomb in the Arctic. Every summer, the Earth has been adding another layer of dead carbon-based lifeforms to the permafrost areas in the Arctic. That summer layer does not entirely decompose but gets frozen into the growing stockpile of carbon in the permafrost.

Figure 7 – Melting huge amounts of methane hydrate ice could release massive amounts of methane gas into the atmosphere.

The Earth has also been freezing huge amounts of methane gas as a solid called methane hydrate on the floor of the Arctic Ocean. Methane hydrate is a solid, much like ice, that is composed of water molecules surrounding a methane molecule frozen together into a methane hydrate ice. As the Arctic warms, this trapped methane gas melts and bubbles up to the surface.

This is very disturbing because in Could the Galactic Scarcity of Software Simply be a Matter of Bad Luck? we covered Professor Toby Tyrrell's computer-simulated research that suggests that our Earth may be a very rare "hole in one" planet that was able to maintain a habitable surface for 4 billion years by sheer luck. Toby Tyrrell's computer simulations indicate that the odds of the Earth turning into another Venus or Mars are quite high given the right set of perturbations. Toby Tyrrell created a computer simulation of 100,000 Earth-like planets to see if planets in the habitable zone of a star system could maintain a surface temperature that could keep water in a liquid form for 3 billion years. The computer-simulated 100,000 Earth-like planets were created with random positive and negative feedback loops that controlled the temperature of the planet's surface. Each planet also had a long-term forcing parameter acting on its surface temperature. For example, our Sun is a star on the main sequence that is getting 1% brighter every 100 million years as the amount of helium in the Sun's core increases. Helium is four times denser than hydrogen and as the Sun's core turns hydrogen into helium its density and gravitational pull increase so its fusion rate has to increase to produce a hotter core that can resist the increased gravitational pull of its core. Each planet was also subjected to random perturbations of random strength that could temporarily alter the planet's atmospheric temperature like those from asteroid strikes or periods of enhanced volcanic activity. This study again demonstrates the value of scientific simulation software. The key finding from this study can be summed up by Toby Tyrrell as:

Out of a population of 100,000, ~9% of planets (8,710) were successful at least once, but only 1 planet was successful on all 100 occasions. Success rates of individual planets were not limited to 0% or 100% but instead spanned the whole spectrum. Some planets were successful only 1 time in 100, others 2 times, and so on. All degrees of planet success are seen to occur in the simulation. It can be seen, as found in a previous study, that climate stabilisation can arise occasionally out of randomness - a proportion of the planets generated by the random assembly procedure had some propensity for climate regulation.

Toby Tyrrell's computer simulation of 100,000 Earth-like planets found that when 100,000 Earth-like planets were each run through 100 iterations with random, but realistic, values for the model parameters, about 9% of them maintained a habitable temperature for 3 billion years for at least 1 of the 100 runs. Some models had 1 successful run and others had 2 or more successful runs. The astounding finding was that only 1 of the 100,000 models was successful for all 100 runs! This study would suggest that the Earth may not be rare because of its current habitable conditions. Rather, the Earth may be rare because it was able to maintain a habitable surface temperature for about 4 billion years and become a Rare Earth with complex carbon-based life having Intelligence.

The end result is that if we keep doing what we are doing, there is the possibility of the Earth ending up with a climate similar to the Permian-Triassic greenhouse gas mass extinction 252 million years ago that nearly killed off all complex carbon-based life on the planet. A massive flood basalt known as the Siberian Traps covered an area about the size of the continental United States with several thousand feet of basaltic lava, with eruptions that lasted for about one million years. Flood basalts, like the Siberian Traps, are thought to arise when large plumes of hotter than normal mantle material rise from near the mantle-core boundary of the Earth and break to the surface. This causes a huge number of fissures to open over a very large area that then begin to disgorge massive amounts of basaltic lava over a very large region. After the eruptions of basaltic lava began, it took about 100,000 years for the carbon dioxide that bubbled out of the basaltic lava to dramatically raise the level of carbon dioxide in the Earth's atmosphere and initiate the greenhouse gas mass extinction. This led to an Earth with a daily high of 140 oF and purple oceans choked with hydrogen-sulfide-producing bacteria, producing a dingy green sky over an atmosphere tainted with toxic levels of hydrogen sulfide gas and an oxygen level of only about 12%. The Permian-Triassic greenhouse gas mass extinction killed off about 95% of marine species and 70% of land-based species, and dramatically reduced the diversity of the biosphere for about 10 million years. It took a full 100 million years to recover from it.

Figure 8 - Above is a map showing the extent of the Siberian Traps flood basalt. The above area was covered by flows of basaltic lava to a depth of several thousand feet.

Figure 9 - Here is an outcrop of the Siberian Traps formation. Notice the sequence of layers. Each new layer represents a massive outflow of basaltic lava that brought greenhouse gases to the surface.

Dr. Susan Natali is the Arctic Program Director at the Woodwell Climate Research Center and is leading a program to finally actually measure the methane and carbon dioxide emissions arising from the defrosting Arctic permafrost so that those emissions can be accounted for in the total emission of greenhouse gasses by society. For more on the dangers of defrosting Arctic carbon releasing methane and carbon dioxide gases see her TED talk at:

How Ancient Arctic Carbon Threatens Everyone on the Planet | Sue Natali

https://www.youtube.com/watch?v=r9lDDetKMi4

Susan Natali's webpage at the Woodwell Climate Research Center is:

https://www.woodwellclimate.org/staff/susan-natali/

The point is that we will need huge amounts of energy to geoengineer the Earth back to a level of 350 ppm of carbon dioxide from the current level of 415 ppm. Thanks to the second law of thermodynamics, we will probably need more energy to fix the planet than we obtained from the burning of the huge amounts of carbon that we burned since the Industrial Revolution began. Yes, renewable energy from wind and solar can be used to help stop dumping more carbon dioxide into the atmosphere, but removing thousands of gigatons of carbon dioxide from the planet's atmosphere and oceans will require huge amounts of energy, way beyond the 2 eV of solar photons or the 2 eV of energy per atom stored in chemical batteries. We will need the vast energies of nuclear energy to do that. I have already discussed the benefits of releasing the nuclear energy in uranium and thorium atoms in previous posts. So for the remainder of this post, let me focus on the vast amounts of nuclear energy that could be released by fusing the deuterium atoms of hydrogen that are to be found in the waters of the Earth's oceans.

The Prospects of First Light Fusion Mass-Producing Practical Fusion Power Plants in 10 Years

So let us now explore how the development and operation of some sophisticated modeling software at First Light Fusion is helping to save the world by likely making nuclear fusion a practical reality in just a few short years. Here is their corporate website:

First Light Fusion - A New Approach to Fusion

https://firstlightfusion.com/

But before we do that, we need to understand how nuclear fusion can produce vast amounts of energy. Fortunately, we already learned how to make nuclear fusion do that 70 years ago back in 1952 when we detonated the very first hydrogen bomb.

Figure 10 – We have been able to fuse deuterium hydrogen atoms with tritium hydrogen atoms for 70 years, ever since the first hydrogen bomb was tested in 1952. Above we see the test of a hydrogen bomb. But hydrogen bombs are not a very good source of energy because they release the energy of many millions of tons of TNT all at once. The trick is to make very tiny hydrogen bombs that explode every few seconds to power an electrical generation plant. People have been trying to do that for the past 70 years, and they have spent many billions of dollars doing so.

Figure 11 – Our Sun uses the proton-proton chain reaction to turn four hydrogen protons into one helium nucleus composed of two protons and two neutrons, but this reaction requires very high temperatures and pressures to achieve. It also does not produce a very high energy output level in our Sun. Yes, the Sun produces lots of energy in its core but that is just because the Sun's core is so large. The proton-proton reaction also produces some gamma rays that would bother the people who are so worried about radiation at any level of intensity.

Doing fusion is really hard even for stars. The problem is that hydrogen protons have a repelling positive electrical charge and do not like to get close enough together for the strong nuclear force to pull them together. The repulsive electrical force falls off with the square of the distance between the protons, but the strong attractive nuclear force between the protons only works over very short distances. So the problem is how do you get hydrogen protons close enough for the attractive strong nuclear force between them to overcome the repulsive electromagnetic force between the protons. For example, the core of our Sun uses the proton-proton chain reaction, depicted in Figure 11 above, to turn four hydrogen protons into helium nuclei composed of two protons and two neutrons. The core of the Sun has a density of 150 grams/cubic centimeter which is 150 times the density of water. So the hydrogen protons of the Sun's core are squeezed very tightly together by the weight of the Sun above. The protons are also bumping around at a very high velocity too at a temperature of 15 million oK (27 million degrees oF). Yet the very center of the Sun only generates 276.5 watts per cubic meter and that rapidly drops off to about 6.9 watts per cubic meter at a distance of 19% of a solar radius away from the center. Using a value of 276.5 watts per cubic meter means that you need a little more than four cubic meters of the Sun's core to produce the heat from the little 1200-watt space heater in your bathroom! The resting human body produces about 80 watts of heat, and the volume of a human body is about 0.062 cubic meters. That means a resting human body generates 1,290 watts per cubic meter or about 4.67 times as much heat as the very center of our Sun! In order to boost the output of the proton-proton chain reaction to a level useful for the generation of electricity, we would have to compress hydrogen protons to much higher densities, pressures and temperatures. The proton-proton chain reaction also produces four gamma rays for each produced helium nuclei and we all know how the fear of radiation scuttled nuclear fission reactors in the past. So reproducing the Sun will not do. We need to replicate the much easier deuterium-tritium reaction of the hydrogen bomb at a much smaller scale and then explode the very tiny hydrogen bombs every few seconds in a fusion reactor to produce a useful source of energy.

How To Build a Hydrogen Bomb

Hydrogen bombs fuse deuterium hydrogen nuclei with tritium hydrogen nuclei. Normal hydrogen has a nucleus composed of a single proton. Deuterium is an isotope of hydrogen and has a nucleus with one proton and one neutron. Tritium is another isotope of hydrogen that is slightly radioactive and has a nucleus composed of one proton and two neutrons. Deuterium can be chemically extracted from ordinary water that has a deuterium concentration of 150 ppm or 1 atom of deuterium per 6400 atoms of regular hydrogen. In other words, each quart (or liter) of water contains only a few drops of deuterium. Still, deuterium can be easily chemically extracted from ordinary water and only costs about $4,000 per kilogram and that price would dramatically drop if it were mass-produced for fusion reactors. The deuterium is a leftover from the Big Bang, but all of the tritium made during the Big Bang is long gone because tritium is radioactive with a half-life of only 12.32 years. Thus, we need to make some fresh tritium for our hydrogen bomb and it costs about $30,000,000 per kilogram to do that! So we need a cheap way of making tritium to build a hydrogen bomb or to run a nuclear fusion reactor that generates electricity.

Figure 12 – Here is the deuterium-tritium reaction used by the hydrogen bomb. It is much easier to produce and yields 17.6 million eV of kinetic energy and does not produce any radiation at all. The deuterium and tritium nuclei both contain a single proton that causes the two nuclei to be repelled by the electromagnetic force, but there now are 5 protons and neutrons colliding and each of the protons and neutrons are drawn together by the much stronger short-ranged strong nuclear force. This makes it much easier to squeeze them together to fuse.

So to build a large hydrogen bomb for a warhead or a very tiny hydrogen bomb for a fusion reactor, all you have to do is squeeze some deuterium and tritium together at a very high temperature and pressure and then quickly get out of the way while they fuse together. But that is not so easy to do. Yes, you can afford to buy some deuterium, but tritium is just way too expensive, and getting them to a very high pressure and temperature so that they fuse together is not very easy either. Now there is a cheap way to make tritium on the fly by bombarding lithium-6 atoms with high-energy neutrons.

Figure 13 – When a high-energy neutron hits a nucleus of lithium-6, it makes a helium nucleus and a nucleus of tritium.

Natural lithium, like the lithium in lithium-ion batteries, has two isotopes. About 7.59% of natural lithium is lithium-6 and 92.41% is lithium-7. To make a hydrogen bomb, all we need to do is to take some pure lithium-6 and then bombard it with energetic neutrons to make tritium on the fly. This would have to be done at a very high temperature and pressure in the presence of some deuterium that we had previously extracted from natural water. So all we need is something that produces high temperatures, high pressures and lots of high-energy neutrons. Now, what could that be? An atomic bomb of course!

Figure 14 – Above is the basic design for a hydrogen bomb.

The fission fuel core of the fission primary atomic bomb is composed of uranium-235 or plutonium-239. When the hydrogen bomb is detonated, the fission fuel core of the primary is squeezed tightly together by a spherical shell of chemical explosive that causes the fission core to implode and become momentarily tightly compressed to a critical mass. This initiates a chain reaction in the fission core as shown in Figure 15.

Figure 15 - When a neutron hits a uranium-235 nucleus it can split it into two lighter nuclei like Ba-144 and Kr-89 that fly apart at about 40% of the speed of light and two or three additional neutrons. This releases about 200 million eV of energy. The nuclei that fly apart are called fission products that are very radioactive with half-lives of less than 30 years and need to be stored for about 300 years. The additional neutrons can then strike other uranium-235 nuclei, causing them to split as well. Some neutrons can also hit uranium-238 nuclei and turn them into plutonium-239 and plutonium-240 that can also fission when hit by a neutron.

The fission atomic bomb primary produces a great deal of heat energy and high-energy neutrons. The very high temperatures that are generated also produce large amounts of x-rays that travel at the speed of light ahead of the expanding fission bomb explosion. The generated x-rays then vaporize the polystyrene foam housing holding the fusion secondary in place. The fusion fuel in the fusion secondary is composed of a solid material called lithium-6 deuteride which is composed of lithium-6 and deuterium atoms chemically bonded together. Running down the very center of the fusion secondary is a cylindrical "spark plug" rod composed of uranium-235 or plutonium-239 which gets crushed to criticality by the imploding lithium-6 deuteride. This initiates a fission chain reaction in the central "spark plug" that also releases 200 million eV of energy per fission and lots of high-energy neutrons too. These high-energy neutrons travel out from the "spark plug" rod and bombard the lithium-6 nuclei in the lithium-6 deuteride forming tritium nuclei at a very high temperature and pressure. The newly formed tritium then fuses with the deuterium in the lithium-6 deuteride producing 17.6 million eV of energy per fusion and also a very high-energy neutron too. Many times the whole hydrogen bomb is encased in very cheap natural uranium metal that costs about $50 per kilogram. Natural uranium metal is 99.3% uranium-238 and 0.7% uranium-235. When the outgoing high-energy neutrons from the fusing deuterium and tritium hit the uranium-235 atoms, they immediately fission and release 200 million eV of energy each. When the high-energy neutrons hit the uranium-238 atoms in the cheap natural uranium, they turn into plutonium-239 atoms which then also begin to fission and release 200 million eV each. Note that all of the radioactive fallout from a hydrogen bomb comes from the fission products released when the uranium-235 and plutonium-239 atoms fission. The fusing deuterium and tritium nuclei do not produce any radioactive waste at all and that is why a nuclear fusion reactor can safely produce energy without producing long-lived nuclear waste. For more details see the Wikipedia article below:

Thermonuclear weapon

https://en.wikipedia.org/wiki/Thermonuclear_weapon

As you can see, making deuterium and tritium fuse together is not easy. Everything has to happen just at the right time. It takes a huge amount of calculations to do that. That is why the very first hydrogen bomb was designed with the aid of a computer called the Mathematical and Numerical Integrator and Calculator or MANIAC. The need to design advanced nuclear weapons during the 1950s and 1960s was a major driving force leading to the advancement of computer hardware and software during that formative period.

First Light Fusion's Projectile Approach for Building Practical Fusion Reactors

With the information above, let us now explore how researchers at First Light Fusion have been attempting to fuse deuterium and tritium together. But first, we need to understand how their competitors are trying to do the same thing.

Figure 16 – The European ITER reactor uses magnetic confinement to hold and heat a torus containing 840 cubic meters of deuterium and tritium plasma that is heated to 150 - 300 million oK to have them collide together fast enough to fuse into helium and release a high-energy neutron. The ITER weighs 23,000 tons, about three times the weight of the Eifel Tower, and is designed to produce 500 MW of heat. There is a thick blanket of metal containing cooling water to absorb the high-energy neutrons and turn their kinetic energy into heat energy. The inner wall of the blanket is made of beryllium. The remainder is made of copper and stainless steel. The blanket will have to be periodically replaced due to damage to the blanket metals caused by the high-energy neutrons because they damage the crystalline structure of the metals with time and weaken them.

Figure 17 – The other approach to fusion energy is to blast the deuterium and tritium ions with a huge number of very powerful lasers to compress and heat them to the point of fusion.

First Light Fusion is taking a completely different approach that they call Projectile Based Inertial Fusion.

Figure 18 – The Projectile Based Inertial Fusion process periodically fires a very high-velocity projectile at a target containing deuterium and tritium.

Figure 19 – The target is constructed with several cavities containing the deuterium-tritium mixture in such a way to focus and dramatically amplify the projectile shockwave.

Figure 20 – Above is a sample experimental target containing a number of spherical cavities.

Figure 21 – Above we see the very end of a computer simulation for a target containing three cavities. There is a very large cavity on the left, a very large cavity on the right and a much smaller central cavity between the two.

You can watch this computer simulation unfold in the YouTube video below. The target contains a large cavity on the left and a large cavity on the right. The cavities are a little bit difficult to see. They are outlined with a very thin dark blue line which can be seen if you concentrate. Between the two large cavities is a much smaller central cavity. In the video, the shockwave from the impacting projectile strikes the target from above and sets up a downward traveling shockwave. When the shockwave reaches the left and right cavities, it is focused and dramatically compresses the cavities to nearly a zero volume. This causes two jets of high-temperature and pressure deuterium-tritium ions to converge into the central cavity just as the central cavity is also compressed by the arriving shockwave. This amplification process essentially concentrates the entire kinetic energy of the projectile into a very concentrated region of the target with nearly zero volume. That is how First Light Fusion attains the high temperature and density of deuterium-tritium ions that is necessary to have them fuse.

Cavity Collapse in a Three Cavity Target

https://youtu.be/aTMPigL7FB8&t=0s

The projectile must be fired at the target at a very high velocity around 25 km/sec. First Light Fusion is working on an electromagnetic launcher called M3 to do that.

Figure 22 – Above is First Light Fusion's M3 electromagnetic launcher. The large blue boxes surrounding the central electromagnetic launcher are huge capacitors that are used to store the electrical energy needed to launch a test projectile.

The M3 electromagnetic launcher operates in a manner similar to a railgun.

Homemade Railgun | Magnetic Games

https://www.youtube.com/watch?v=9myr32FgCWQ&t=1s

Figure 23 – The United States Navy recently cancelled an advanced railgun that was supposed to fire artillery shells from warships instead of using normal gunpowder.

But taking a hint from the United States Navy, in April of 2022, First Light Fusion decided to leapfrog their M3 electromagnetic launcher research by successfully achieving nuclear fusion of a deuterium-deuterium mixture in one of their advanced targets by firing a projectile from their BFG (Big Friendly Gun) using 3 kilograms of gunpowder like a naval artillery gun. The projectile hit the target at 4.5 km/sec and produced high-energy neutrons from the initiated fusion process. This was validated by the UK Atomic Energy Authority. First Light Fusion was able to achieve this milestone with only $59 million of funding instead of the billions of dollars that were expended by the other fusion technologies displayed above to do the very same thing.

Figure 24 – Above is First Light Fusion CEO Nick Hawker standing in front of the BFG (Big Friendly Gun) that used 3 kilogrms of gunpowder to fire a projectile into a target containing a mixture of deuterium-deuterium and successfully achieve nuclear fusion.

But how does First Light Fusion plan to put this success to work to produce vast amounts of energy?

Figure 25 – Above is a depiction of First Light Fusion's proposed 150 MWe fusion reactor that would generate 150 MW of electrical energy. In order to do that, the fusion reactor must generate about 450 MWh of heat energy because of energy losses brought on by the second law of thermodynamics. The proposed reactor would drop a new deuterium-tritium-bearing target every 30 seconds.

Below is a YouTube video depicting a First Light Fusion target falling into the fusion reactor core. The fusion-initiating projectile is then fired at 25 km/sec behind the falling target which is essentially standing still relative to the high-speed projectile. When the projectile hits the target, it causes the deuterium-tritium mixture to fuse and release 17.6 million eV of kinetic energy per fusion event. That kinetic energy is then absorbed by a one-meter thick curtain of molten lithium metal that is released from above at the same time. The thick curtain of molten lithium metal turns the 17.6 million eV of energy from each fusion event into 17.6 million eV of heat energy. The molten lithium metal does not sustain any damage from the high-speed neutrons because it is a liquid and not a solid. Only the crystalline structure of solids can be damaged by high-speed neutrons.

A falling target is hit by a projectile at 25 km/sec and fuses its deuterium-tritium mixture.

https://youtu.be/JN7lyxC11n0

Below is a YouTube depiction of how First Light Fusion's 150 MWe fusion reactor would operate.

First Light Fusion's Proposed 150 MWe Fusion Reactor Cycle

https://youtu.be/aW4eufacf-8

There are some additional benefits to using molten lithium metal to absorb the energy from the fast-moving fusion neutrons. Remember, when a high-energy neutron collides with lithium-6, it produces tritium and we need tritium for the fusion reactor fuel. Recall that about 7.59% of natural lithium is lithium-6 and that is plenty of lithium-6 to create the tritium needed for the fusion reactor fuel. Because the tritium would be created in a molten lithium liquid, it would just bubble out of the molten lithium and would not get trapped in the crystaline structure of a solid form of lithium metal.

Also, the melting point of lithium is 180.5 oC and the boiling point of lithium is 1,342 oC. That means the First Light Fusion reactor could be run at a much higher temperature than the cooling water used in a standard pressurized water fission reactor that is normally run at 300 oC and a pressure of 80 atmospheres. The First Light Fusion reactor could run at 700 oC, or more. Such a high-temperature reactor could be used for industrial process heat or to run more efficient turbines to generate electricity.

Figure 26 – Supercritical CO2 Brayton turbines can be about 8,000 times smaller than traditional Rankine steam turbines. They are also much more efficient.

Since the First Light Fusion reactor could run at 700 oC, instead of 300 oC, we could use Brayton supercritical carbon dioxide turbines instead of Rankine steam turbines. Supercritical CO2 Brayton turbines are about 8,000 times smaller than Rankine steam turbines because the supercritical CO2 working fluid has nearly the density of water. And because First Light Fusion reactors do not need an expensive and huge containment structure, they can be made into small factory-built modular units that can be mass-produced. This allows utilities and industrial plants to easily string together any required capacity. They would also be ideal for ocean-going container ships. Supercritical CO2 Brayton turbines can also reach an efficiency of 47% compared to the 33% efficiency of Rankine steam turbines. The discharge temperature of the supercritical CO2 turbines is also high enough to be used to desalinate seawater, and if a body of water is not available for cooling, the discharge heat of a First Light Fusion reactor could be directly radiated into the air. To watch some supercritical CO2 in action see:

Thermodynamics - Explaining the Critical Point

https://www.youtube.com/watch?v=RmaJVxafesU#t-1.

Here is an explanation of how this all works from Nick Hawker himself:

Nick's Blog

https://nickhawker.com/

Here is another interesting interview with Nick Hawker about fusion energy in which he explains how it all began for him at the University of Oxford.

Fusion technology will save the world... and soon! | Energy Live News

https://www.youtube.com/watch?v=GSSzlrRonD4

Here is an interesting YouTube video that contrasts our current pressurized water fission reactors that fission uranium-235 and plutonium-239 nuclei. Note that molten salt nuclear reactors can overcome many of the obstacles facing our current fleet of pressurized water fission reactors. For more on that see The Deadly Dangerous Dance of Carbon-Based Intelligence and Last Call for Carbon-Based Intelligence on Planet Earth.

IT HAPPENED! Nuclear Fusion FINALLY Hit The Market!

https://www.youtube.com/watch?v=WiOJSW4rmxM

Finally, Helen Czerski takes us on a tour of First Light Fusion and interviews CEO Nick Hawker:

First Light Fusion: The Future of Electricity Generation and a Clean Base Load? | Fully Charged

https://www.youtube.com/watch?v=M1RsHQCMRTw&t=1s

But is it Safe to Use Little Hydrogen Bombs to Generate Power?

Generating 450 MWh of heat energy means generating 450 million joules of heat energy per second or 0.450 gigajoules per second. Now if there is a shot every 30 seconds, that means that each target must yield 13.5 gigajoules of energy. Detonating a metric ton of TNT (2200 pounds of TNT) generates 4.184 gigajoules of energy, so each target must yield the energy of 3.23 metric tons of TNT and that energy must be absorbed by the meter-thick blanket of molten lithium metal. But 3.23 metric tons of TNT is actually a very tiny little hydrogen bomb. When I was a child in the 1950s and 1960s, hydrogen bombs were carried by strategic bombers and were in the range of 10 - 20 megatons of TNT! Now the nuclear warheads on today's strategic missiles are more in the range of about 0.450 megatons. However, the strategic missiles now carry several individually targeted warheads that can pepper an entire metropolitan area all at once.

Now all during the 1950s and early 1960s, great attention was paid in the United States to the matter of civil defense against a possible nuclear strike by the Russians. During those times, the government of the United States essentially admitted that it could not defend the citizens of the United States from a Soviet bomber attack with nuclear weapons, and so it was up to the individual citizens of the United States to prepare for such a nuclear attack.

Figure 27 - During the 1950s, as a very young child, with the beginning of each new school year, I was given a pamphlet by my teacher describing how my father could build an inexpensive fallout shelter in our basement out of cinderblocks and 2x4s.

Figure 28 - But to me, these cheap cinderblock fallout shelters always seemed a bit small for a family of 5, and my parents never bothered to build one because we lived only 25 miles from downtown Chicago.

Figure 29 - For the more affluent, more luxurious accommodations could be constructed for a price.

Figure 30 - But no matter what your socioeconomic level was at the time, all students in the 1950s participated in "duck and cover" drills for a possible Soviet nuclear attack.

Figure 31 - And if you were lucky enough to survive the initial flash and blast of a Russian nuclear weapon with your "duck and cover" maneuver, your school, and all other public buildings, also had a fallout shelter in the basement to help you get through the next two weeks, while the extremely radioactive nucleotides from the Russian nuclear weapons rapidly decayed away.

Unfortunately, living just 25 miles from downtown Chicago, the second largest city in the United States at the time, meant that the whole Chicagoland area was destined to be targeted by a multitude of overlapping 10 and 20 megaton bombs by the Soviet bomber force, meaning that I would be killed multiple times as my atoms were repeatedly vaporized and carried away in the winds of the Windy City. So as a child of the 1950s and 1960s, I patiently spent my early years just standing by for the directions in this official 1961 CONELRAD Nuclear Attack Message.

Official 1961 Nuclear Attack Message

https://www.youtube.com/watch?v=7iaQMbfazQk

Unfortunately, with the recent invasion of the Ukraine by Russia, both sides of the New Cold War are now rather cavalierly talking about having a global thermonuclear war, something that nobody ever dared to even speak of back in the 1950s and 1960s. I have not seen things this bad since the Cuban Missile Crises of October 1962 when I was 11 years old.

But the little hydrogen bombs used by the First Light Fusion reactor are very small indeed and do not fission any uranium-235 or plutonium-239. The radioactive fallout from hydrogen bombs comes from the highly-radioactive fission products that are produced when the uranium-235 or plutonium-239 nuclei of the fission primary, the bomb's "spark plug" and the cheap natural uranium casing of the weapon's booster split apart. Most of those fission products rapidly decay and allow people to safely emerge from their fallout shelters after about two weeks. Even so, the survivors would be living with residual radiation levels that would put most antinuclear folks into shock.

The First Light Fusion reactor does none of that. The only radioactive product is a small amount of tritium gas which is harvested from the molten lithium to be used for the reactor fuel. Tritium decays into helium-3 and when it does it releases an electron, or beta particle, with an average energy of about 5,700 eV of energy. The picture tubes of old television sets used beta particle electrons with an energy of 25,000 eV to form an image on the screen. The very low-energy beta particles from the decay of tritium can only travel through about 6.0 mm of air and cannot pass through the dead outermost layer of human skin, so they are not of much concern. In the worst-case scenario, the very light tritium gas would simply float away to the upper atmosphere because it is even less dense than the helium gas used for weather balloons and the Goodyear Blimp.

You Cannot Do Such Things Without Hardware and Software

By now you should be able to appreciate the difficulties of trying to build such things using a slide rule. Such things really cannot be done without the benefits of some heavy-duty software running on some heavy-duty hardware. For example, below is the webpage describing the hardware and software that First Light Fusion is using to numerically model what happens to their specially designed targets. As Nick Hawker explained, the real secret to successful projectile fusion is designing and controlling the amplification processes that take place in their proprietary target designs when several cavities containing the deuterium-tritium fuel mixture collapse at very high temperatures and pressures. All of that must be modeled in software first and then physically tested.

First Light Fusion's Numerical Physics

https://firstlightfusion.com/technology/simulations

This brings to mind something I once read about Richard Feynman when he was working on the very first atomic bomb at Los Alamos from 1943 to 1945. He led a group that figured out that they could run several differently colored card decks through a string of IBM unit record processing machines to perform different complex mathematical calculations simultaneously on the same hardware in a multithreaded manner. For more on Richard Feynman see Hierarchiology and the Phenomenon of Self-Organizing Organizational Collapse. Below is the pertinent section extracted from a lecture given by Richard Feynman:

Los Alamos From Below: Reminiscences 1943-1945, by Richard Feynman

http://calteches.library.caltech.edu/34/3/FeynmanLosAlamos.htm

In the extract below, notice the Agile group dynamics at play in the very early days of the Information Revolution.

Well, another kind of problem I worked on was this. We had to do lots of calculations, and we did them on Marchant calculating machines. By the way, just to give you an idea of what Los Alamos was like: We had these Marchant computers - hand calculators with numbers. You push them, and they multiply, divide, add and so on, but not easy like they do now. They were mechanical gadgets, failing often, and they had to be sent back to the factory to be repaired. Pretty soon you were running out of machines. So a few of us started to take the covers off. (We weren't supposed to. The rules read: "You take the covers off, we cannot be responsible...") So we took the covers off and we got a nice series of lessons on how to fix them, and we got better and better at it as we got more and more elaborate repairs. When we got something too complicated, we sent it back to the factory, but we'd do the easy ones and kept the things going. I ended up doing all the computers and there was a guy in the machine shop who took care of typewriters.

Anyway, we decided that the big problem - which was to figure out exactly what happened during the bomb's explosion, so you can figure out exactly how much energy was released and so on - required much more calculating than we were capable of. A rather clever fellow by the name of Stanley Frankel realized that it could possibly be done on IBM machines. The IBM company had machines for business purposes, adding machines called tabulators for listing sums, and a multiplier that you put cards in and it would take two numbers from a card and multiply them. There were also collators and sorters and so on.

Figure 32 - Richard Feynman is describing the IBM Unit Record Processing machines from the 1940s and 1950s. The numerical data to be processed was first punched onto IBM punch cards with something like this IBM 029 keypunch machine from the 1960s.

Figure 33 - Each card could hold a maximum of 80 characters.

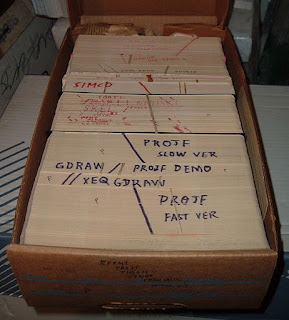

Figure 34 - The cards with numerical data were then bundled into card decks for processing.

The Unit Record Processing machines would then process hundreds of punch cards per minute by routing the punch cards from machine to machine in processing streams.

Figure 35 – The Unit Record Processing machines like this card sorter were programmed by physically rewiring a plugboard.

Figure 36 – The plugboard for a Unit Record Processing machine.

So Frankel figured out a nice program. If we got enough of these machines in a room, we could take the cards and put them through a cycle. Everybody who does numerical calculations now knows exactly what I'm talking about, but this was kind of a new thing then - mass production with machines. We had done things like this on adding machines. Usually you go one step across, doing everything yourself. But this was different - where you go first to the adder, then to the multiplier, then to the adder, and so on. So Frankel designed this system and ordered the machines from the IBM company, because we realized it was a good way of solving our problems.

We needed a man to repair the machines, to keep them going and everything. And the Army was always going to send this fellow they had, but he was always delayed. Now, we always were in a hurry. Everything we did, we tried to do as quickly as possible. In this particular case, we worked out all the numerical steps that the machines were supposed to do - multiply this, and then do this, and subtract that. Then we worked out the program, but we didn't have any machine to test it on. So we set up this room with girls in it. Each one had a Marchant. But she was the multiplier, and she was the adder, and this one cubed, and we had index cards, and all she did was cube this number and send it to the next one.

We went through our cycle this way until we got all the bugs out. Well, it turned out that the speed at which we were able to do it was a hell of a lot faster than the other way, where every single person did all the steps. We got speed with this system that was the predicted speed for the IBM machine. The only difference is that the IBM machines didn't get tired and could work three shifts. But the girls got tired after a while.

Anyway, we got the bugs out during this process, and finally the machines arrived, but not the repairman. These were some of the most complicated machines of the technology of those days, big things that came partially disassembled, with lots of wires and blueprints of what to do. We went down and we put them together, Stan Frankel and I and another fellow, and we had our troubles. Most of the trouble was the big shots coming in all the time and saying, "You're going to break something! "

We put them together, and sometimes they would work, and sometimes they were put together wrong and they didn't work. Finally I was working on some multiplier and I saw a bent part inside, but I was afraid to straighten it because it might snap off - and they were always telling us we were going to bust something irreversibly. When the repairman finally got there, he fixed the machines we hadn't got ready, and everything was going. But he had trouble with the one that I had had trouble with. So after three days he was still working on that one last machine.

I went down, I said, "Oh, I noticed that was bent."

He said, "Oh, of course. That's all there is to it!" Bend! It was all right. So that was it.

Well, Mr. Frankel, who started this program, began to suffer from the computer disease that anybody who works with computers now knows about. It's a very serious disease and it interferes completely with the work. The trouble with computers is you play with them. They are so wonderful. You have these switches - if it's an even number you do this, if it's an odd number you do that - and pretty soon you can do more and more elaborate things if you are clever enough, on one machine.

And so after a while the whole system broke down. Frankel wasn't paying any attention; he wasn't supervising anybody. The system was going very, very slowly - while he was sitting in a room figuring out how to make one tabulator automatically print arctangent X, and then it would start and it would print columns and then bitsi, bitsi, bitsi, and calculate the arc-tangent automatically by integrating as it went along and make a whole table in one operation.

Absolutely useless. We had tables of arc-tangents. But if you've ever worked with computers, you understand the disease -- the delight in being able to see how much you can do. But he got the disease for the first time, the poor fellow who invented the thing.

And so I was asked to stop working on the stuff I was doing in my group and go down and take over the IBM group, and I tried to avoid the disease. And, although they had done only three problems in nine months, I had a very good group.

The real trouble was that no one had ever told these fellows anything. The Army had selected them from all over the country for a thing called Special Engineer Detachment - clever boys from high school who had engineering ability. They sent them up to Los Alamos. They put them in barracks. And they would tell them nothing.

Then they came to work, and what they had to do was work on IBM machines - punching holes, numbers that they didn't understand. Nobody told them what it was. The thing was going very slowly. I said that the first thing there has to be is that these technical guys know what we're doing. Oppenheimer went and talked to the security and got special permission so I could give a nice lecture about what we were doing, and they were all excited: "We're fighting a war! We see what it is!" They knew what the numbers meant. If the pressure came out higher, that meant there was more energy released, and so on and so on. They knew what they were doing.

Complete transformation! They began to invent ways of doing it better. They improved the scheme. They worked at night. They didn't need supervising in the night; they didn't need anything. They understood everything; they invented several of the programs that we used - and so forth.

So my boys really came through, and all that had to be done was to tell them what it was, that's all. As a result, although it took them nine months to do three problems before, we did nine problems in three months, which is nearly ten times as fast.

But one of the secret ways we did our problems was this: The problems consisted of a bunch of cards that had to go through a cycle. First add, then multiply and so it went through the cycle of machines in this room, slowly, as it went around and around. So we figured a way to put a different colored set of cards through a cycle too, but out of phase. We'd do two or three problems at a time.

But this got us into another problem. Near the end of the war for instance, just before we had to make a test in Albuquerque, the question was: How much would be released? We had been calculating the release from various designs, but we hadn't computed for the specific design that was ultimately used. So Bob Christie came down and said, "We would like the results for how this thing is going to work in one month" - or some very short time, like three weeks.

I said, "It's impossible."

He said, "Look, you're putting out nearly two problems a month. It takes only two weeks per problem, or three weeks per problem."

I said, "I know. It really takes much longer to do the problem, but we're doing them in parallel. As they go through, it takes a long time and there's no way to make it go around faster."

So he went out, and I began to think. Is there a way to make it go around faster? What if we did nothing else on the machine, so there was nothing else interfering? I put a challenge to the boys on the blackboard - CAN WE DO IT? They all start yelling, "Yes, we'll work double shifts, we'll work overtime," - all this kind of thing. "We'll try it. We'll try it!"

And so the rule was: All other problems out. Only one problem and just concentrate on this one. So they started to work.

My wife died in Albuquerque, and I had to go down. I borrowed Fuchs' car. He was a friend of mine in the dormitory. He had an automobile. He was using the automobile to take the secrets away, you know, down to Santa Fe. He was the spy. I didn't know that. I borrowed his car to go to Albuquerque. The damn thing got three flat tires on the way. I came back from there, and I went into the room, because I was supposed to be supervising everything, but I couldn't do it for three days.

It was in this mess. There's white cards, there's blue cards, there's yellow cards, and I start to say, "You're not supposed to do more than one problem - only one problem!" They said, "Get out, get out, get out. Wait -- and we'll explain everything."

So I waited, and what happened was this. As the cards went through, sometimes the machine made a mistake, or they put a wrong number in. What we used to have to do when that happened was to go back and do it over again. But they noticed that a mistake made at some point in one cycle only affects the nearby numbers, the next cycle affects the nearby numbers, and so on. It works its way through the pack of cards. If you have 50 cards and you make a mistake at card number 39, it affects 37, 38, and 39. The next, card 36, 37, 38, 39, and 40. The next time it spreads like a disease.

So they found an error back a way, and they got an idea. They would only compute a small deck of 10 cards around the error. And because 10 cards could be put through the machine faster than the deck of 50 cards, they would go rapidly through with this other deck while they continued with the 50 cards with the disease spreading. But the other thing was computing faster, and they would seal it all up and correct it. OK? Very clever.

That was the way those guys worked, really hard, very clever, to get speed. There was no other way. If they had to stop to try to fix it, we'd have lost time. We couldn't have got it. That was what they were doing.

Of course, you know what happened while they were doing that. They found an error in the blue deck. And so they had a yellow deck with a little fewer cards; it was going around faster than the blue deck. Just when they are going crazy - because after they get this straightened out, they have to fix the white deck - the boss comes walking in.

"Leave us alone," they say. So I left them alone and everything came out. We solved the problem in time and that's the way it was.

The above should sound very familiar to most 21st century IT professionals.

Now Richard Feynman was quite a character and well known for his much-celebrated antics amongst all 20th-century physicists. I have read all of his books, some of them several times. So I was quite surprised when reading:

Surely You're Joking, Mr. Feynman!

by Richard P. Feynman

https://sistemas.fciencias.unam.mx/~compcuantica/RICHARD%20P.%20FEYNMAN-SURELY%20YOU'RE%20JOKING%20MR.%20FEYNMAN.PDF

to find that one of the chapters began with:

"I learned to pick locks from a guy named Leo Lavatelli"

You see, I had Professor Leo Lavatelli for Physics 107 back in 1970, and later Physics 341, at the University of Illinois in Urbana. Both classes were on classical electrodynamics. Professor Leo Lavatelli was a very cool guy but he never once mentioned that he had worked on the very first atomic bomb.

Figure 37 - Professor Leo Lavatelli.

Leo S. Lavatelli (1917-1998)

https://physics.illinois.edu/people/memorials/lavatelli

During World War II, my Dad was a sailor on a tanker filled with 40,000 barrels of 110-octane aviation fuel. He helped to deliver the fuel for the B-29 Enola Gay on Tinian Island. The USS Indianapolis delivered the very first atomic bomb, Little Boy, to Tinian Island in July of 1945, but was torpedoed and sunk by a Japanese submarine on July 30, 1945, on the way back home. The USS Indianapolis sank in 12 minutes and of the 1,195 crewmen, only 316 survived, making the sinking of the Indianapolis the greatest loss of life at sea from a single ship in the history of the US Navy. Fortunately, my Dad made it back okay, so I am here to tell the tale. We all owe a lot to the cool guys and gals of the 1940s who made a world order possible that was based on the rule of law and the fruits of the 17-century Scientific Revolution and the 18th-century Enlightenment. My only hope is that we can manage to keep it.

Okay, we can all admit that the history of nuclear energy has been a bit sullied by the "real world" of human affairs, especially when it comes to using nuclear energy for the purposes of warfare. But that is not the fault of nuclear energy. That is the fault of ourselves. The important point to keep in mind is that nuclear energy is the only way we can fix the damage that we have all caused to this planet through the disaster of climate change. If it took our foolish obsession with building nuclear weapons of mass destruction to bring forth the technology necessary to save our planet from becoming another Venus, so be it.

Comments are welcome at

scj333@sbcglobal.net

To see all posts on softwarephysics in reverse order go to:

https://softwarephysics.blogspot.com/

Regards,

Steve Johnston

No comments:

Post a Comment